Introduction to BitNet Model

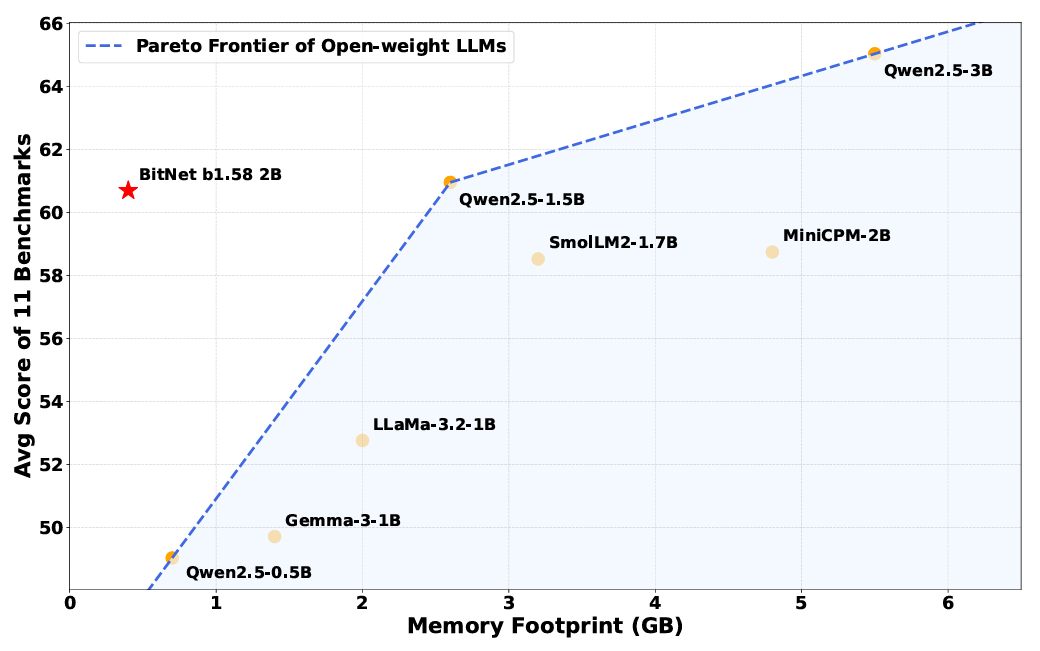

Memory requirements are the most obvious advantage of reducing the complexity of a model’s internal weights. The BitNet b1.58 model can run using just 0.4GB of memory, compared to anywhere from 2 to 5GB for other open-weight models of roughly the same parameter size.

Does Size Matter?

But the simplified weighting system also leads to more efficient operation at inference time, with internal operations that rely much more on simple addition instructions and less on computationally costly multiplication instructions. Those efficiency improvements mean BitNet b1.58 uses anywhere from 85 to 96 percent less energy compared to similar full-precision models, the researchers estimate.

Efficiency and Performance

A demo of BitNet b1.58 running at speed on an Apple M2 CPU.

By using a highly optimized kernel designed specifically for the BitNet architecture, the BitNet b1.58 model can also run multiple times faster than similar models running on a standard full-precision transformer. The system is efficient enough to reach “speeds comparable to human reading (5-7 tokens per second)” using a single CPU, the researchers write (you can download and run those optimized kernels yourself on a number of ARM and x86 CPUs, or try it using this web demo).

Benchmark Performance

Crucially, the researchers say these improvements don’t come at the cost of performance on various benchmarks testing reasoning, math, and “knowledge” capabilities (although that claim has yet to be verified independently). Averaging the results on several common benchmarks, the researchers found that BitNet “achieves capabilities nearly on par with leading models in its size class while offering dramatically improved efficiency.”

Despite its smaller memory footprint, BitNet still performs similarly to “full precision” weighted models on many benchmarks.

Future Research and Development

Despite the apparent success of this “proof of concept” BitNet model, the researchers write that they don’t quite understand why the model works as well as it does with such simplified weighting. “Delving deeper into the theoretical underpinnings of why 1-bit training at scale is effective remains an open area,” they write. And more research is still needed to get these BitNet models to compete with the overall size and context window “memory” of today’s largest models.

Conclusion and Future Implications

Still, this new research shows a potential alternative approach for AI models that are facing spiraling hardware and energy costs from running on expensive and powerful GPUs. It’s possible that today’s “full precision” models are like muscle cars that are wasting a lot of energy and effort when the equivalent of a nice sub-compact could deliver similar results.

Conclusion

In conclusion, the BitNet model has shown promising results in terms of efficiency and performance, and it has the potential to revolutionize the field of AI by providing a more efficient and cost-effective alternative to traditional models.

Frequently Asked Questions

Here are some frequently asked questions about the BitNet model:

- Q: What is the BitNet model?

- A: The BitNet model is a type of AI model that uses a simplified weighting system to improve efficiency and reduce energy consumption.

- Q: How does the BitNet model work?

- A: The BitNet model works by using a highly optimized kernel and a simplified weighting system to reduce the complexity of the model’s internal weights.

- Q: What are the benefits of the BitNet model?

- A: The benefits of the BitNet model include improved efficiency, reduced energy consumption, and the potential to revolutionize the field of AI by providing a more efficient and cost-effective alternative to traditional models.