Introduction to Vision-Language Models

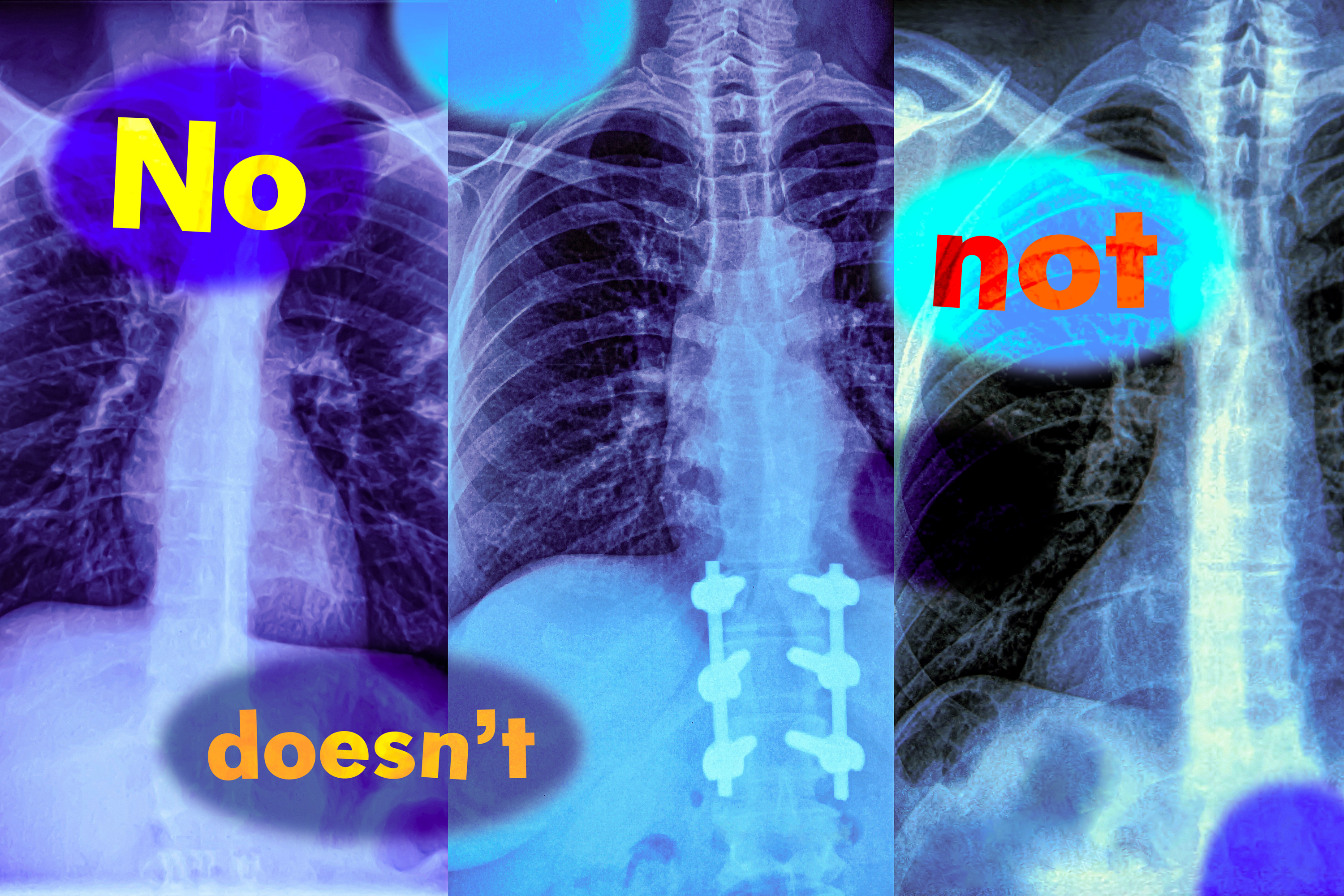

Imagine a radiologist examining a chest X-ray from a new patient. She notices the patient has swelling in the tissue but does not have an enlarged heart. Looking to speed up diagnosis, she might use a vision-language machine-learning model to search for reports from similar patients. However, these models have a significant flaw: they don’t understand negation, which are words like "no" and "doesn’t" that specify what is false or absent.

The Problem with Negation

In a new study, MIT researchers have found that vision-language models are extremely likely to make mistakes in real-world situations because they don’t understand negation. For example, if a model mistakenly identifies reports with both conditions, the most likely diagnosis could be quite different: If a patient has tissue swelling and an enlarged heart, the condition is very likely to be cardiac related, but with no enlarged heart, there could be several underlying causes. "Those negation words can have a very significant impact, and if we are just using these models blindly, we may run into catastrophic consequences," says Kumail Alhamoud, an MIT graduate student and lead author of the study.

Testing Vision-Language Models

The researchers tested the ability of vision-language models to identify negation in image captions. The models often performed as well as a random guess. Building on those findings, the team created a dataset of images with corresponding captions that include negation words describing missing objects. They show that retraining a vision-language model with this dataset leads to performance improvements when a model is asked to retrieve images that do not contain certain objects. It also boosts accuracy on multiple choice question answering with negated captions.

Understanding Vision-Language Models

Vision-language models (VLM) are trained using huge collections of images and corresponding captions, which they learn to encode as sets of numbers, called vector representations. The models use these vectors to distinguish between different images. A VLM utilizes two separate encoders, one for text and one for images, and the encoders learn to output similar vectors for an image and its corresponding text caption. However, because the image-caption datasets don’t contain examples of negation, VLMs never learn to identify it.

Neglecting Negation

The researchers designed two benchmark tasks that test the ability of VLMs to understand negation. For the first, they used a large language model (LLM) to re-caption images in an existing dataset by asking the LLM to think about related objects not in an image and write them into the caption. Then they tested models by prompting them with negation words to retrieve images that contain certain objects, but not others. The models often failed at both tasks, with image retrieval performance dropping by nearly 25 percent with negated captions.

A Solvable Problem

Since VLMs aren’t typically trained on image captions with negation, the researchers developed datasets with negation words as a first step toward solving the problem. Using a dataset with 10 million image-text caption pairs, they prompted an LLM to propose related captions that specify what is excluded from the images, yielding new captions with negation words. They found that fine-tuning VLMs with their dataset led to performance gains across the board. It improved models’ image retrieval abilities by about 10 percent, while also boosting performance in the multiple-choice question answering task by about 30 percent.

Conclusion

The study highlights a significant flaw in vision-language models: they don’t understand negation. This flaw can have serious implications in high-stakes settings, such as healthcare and manufacturing. However, the researchers believe that this is a solvable problem and that their work can serve as a starting point for improving VLMs. They hope that their research will encourage more users to think about the problem they want to use a VLM to solve and design some examples to test it before deployment.

FAQs

- Q: What is the problem with vision-language models?

A: Vision-language models don’t understand negation, which are words like "no" and "doesn’t" that specify what is false or absent. - Q: How did the researchers test vision-language models?

A: The researchers tested the ability of vision-language models to identify negation in image captions and found that they often performed as well as a random guess. - Q: Can the problem be solved?

A: Yes, the researchers believe that the problem is solvable and that their work can serve as a starting point for improving VLMs. - Q: What are the implications of the study?

A: The study highlights a significant flaw in vision-language models that can have serious implications in high-stakes settings, such as healthcare and manufacturing. - Q: What can be done to improve vision-language models?

A: The researchers suggest that VLMs can be improved by training them on datasets that include negation words and by designing examples to test them before deployment.