Introduction to Graph Neural Networks

Graph Neural Networks (GNNs) combine the power of neural networks with the complexity of graphs. Neural networks are excellent at processing images and text, while graphs can represent data as a network of nodes and edges, effectively capturing intricate dependencies. GNNs use the structure of graphs to aggregate data from neighboring nodes, allowing them to learn node embeddings that encode both a node’s features and its position within the graph topology.

How GNNs Work

GNNs work by iteratively aggregating data from neighboring nodes using a message-passing mechanism. This process allows the network to learn node embeddings that can be used for node classification, graph classification, and other tasks. The message-passing mechanism is based on a simple linear equation, where the output of a node is a weighted sum of its input and the inputs of its neighbors.

Data Analysis

To demonstrate the power of GNNs, we’ll use the Zachary’s Karate Club dataset, a classic graph dataset that represents a social network of 34 members. The dataset is widely used as a benchmark for graph analysis and serves as an excellent starting point for learning and experimenting with GNNs.

Graph Characteristics

The Zachary’s Karate Club dataset has the following characteristics:

- Number of nodes (members): 34

- Number of edges (social interactions): 156 (undirected and unweighted)

- Node features: Each node has 34 features.

- Node labels: There are four classes available for classification.

GNN Implementation

We’ll implement a Graph Attention Network (GAT) using the PyTorch Geometric library. The GAT model uses an attention mechanism to identify the most relevant features and neighboring nodes for prediction.

Model Setup

We’ll set up the GAT model with the following parameters:

- Input channels: 34 (number of node features)

- Hidden channels: 5

- Output channels: 4 (number of node labels)

- Device: CUDA (if available) or CPU

Model Training and Results

We’ll train the GAT model using the Adam optimizer and cross-entropy loss function. The training results show a gradual decline in loss and an increase in accuracy, achieving a training accuracy of over 90%.

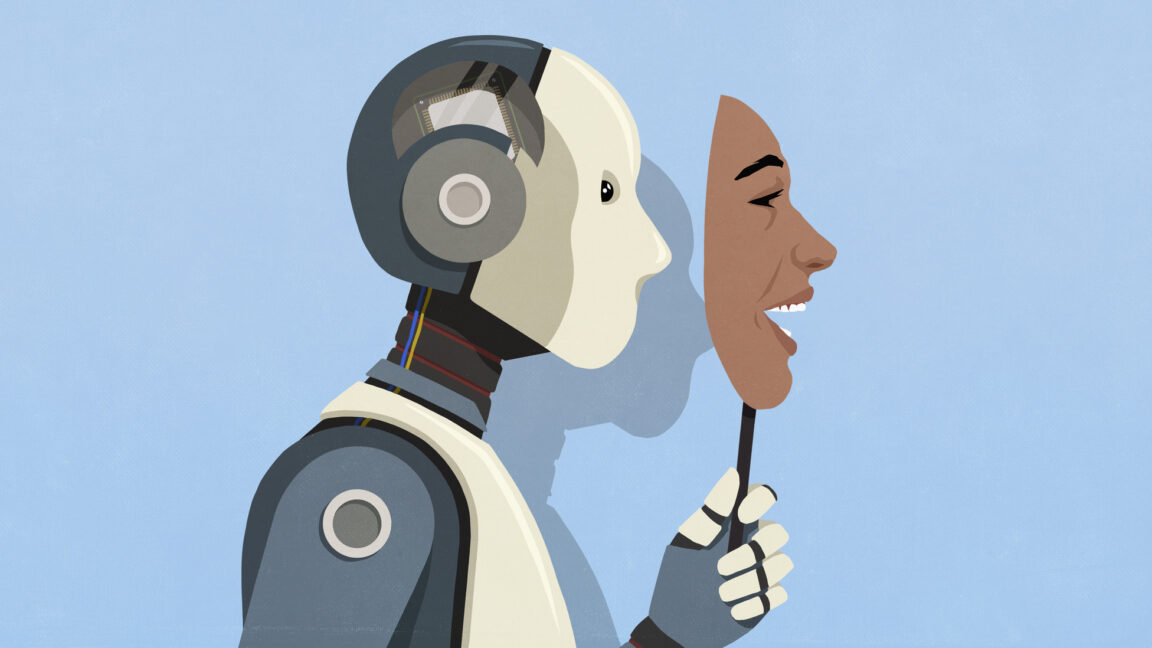

Explainable AI (XAI)

XAI is a technique used to explain the predictions of machine learning models. In the context of GNNs, XAI can be used to explain which neighboring nodes and features have influenced the decision. We’ll use the GNNExplainer library to explain the predictions of our GAT model.

Visualizing Explanations

The GNNExplainer library provides a visualization of the explanation, which shows the importance of each feature and neighboring node. The visualization can be used to identify the most relevant features and nodes that have influenced the decision.

Why Do We Need XAI?

XAI is essential for building trust and interpretability in machine learning models. It helps troubleshoot or debug the model, build trust and interpretability, and meet audit and compliance requirements. XAI serves as a bridge between a GNN’s black-box output and a human’s need for understanding, ensuring that these powerful models can be used responsibly and effectively.

Conclusion

In this article, we’ve introduced Graph Neural Networks (GNNs) and explained how they work. We’ve also demonstrated the power of GNNs using the Zachary’s Karate Club dataset and implemented a Graph Attention Network (GAT) using the PyTorch Geometric library. Finally, we’ve discussed the importance of Explainable AI (XAI) in building trust and interpretability in machine learning models.

FAQs

- What is a Graph Neural Network (GNN)?

A GNN is a type of neural network that combines the power of neural networks with the complexity of graphs. - What is the message-passing mechanism in GNNs?

The message-passing mechanism is a process that allows GNNs to aggregate data from neighboring nodes. - What is Explainable AI (XAI)?

XAI is a technique used to explain the predictions of machine learning models. - Why is XAI important in GNNs?

XAI is essential for building trust and interpretability in GNNs, and it helps troubleshoot or debug the model. - Can GNNs be used for other tasks besides node classification?

Yes, GNNs can be used for other tasks such as graph classification, link prediction, and graph generation.