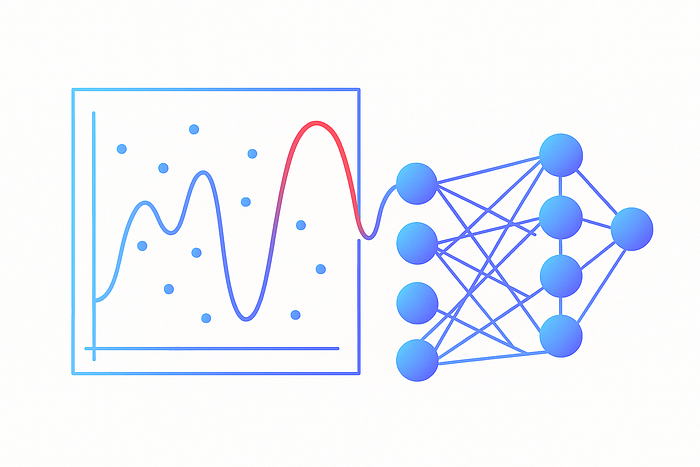

Introduction to Overfitting

Overfitting is when a neural network (or any ML model) captures noise and characteristics of the training dataset rather than the underlying patterns. It excels at training performance but fails to generalize to unseen data. Think of it as overspecialization where the model becomes like a parrot, repeating what it memorized, rather than a thinker that understands.

Understanding Overfitting with an Analogy

Imagine a student studying for a math exam:

- A good student learns the underlying formulas and concepts (generalization). They can solve problems they’ve never seen before.

- An overfitting student memorizes the exact answers to every question in the textbook (memorization). When given a new, slightly different problem on the exam, they fail completely because it doesn’t match what they memorized.

Why Does Overfitting Happen?

Overfitting occurs due to several reasons:

- Excessive Model Complexity: Deep/wide networks with millions of parameters have enormous capacity. They can memorize the training data completely, including outliers.

- Insufficient or Imbalanced Data: Small datasets make it trivial for a large model to memorize. Class imbalance can worsen this: the model may “memorize” the dominant class.

- Excessive Training (Too Many Epochs): After the generalizable structure is learned, the model keeps chasing smaller loss values by fitting noise.

- Noisy or Irrelevant Features: False correlations, mislabeled data, or irrelevant columns mislead the network into learning non-generalizable rules.

Symptoms of Overfitting

The symptoms of overfitting include:

- Training accuracy climbs → nearly perfect.

- Validation/test accuracy stalls or declines.

- Training loss continues decreasing, but validation loss diverges.

- Model confidence is high on training examples, but erratic on unseen samples.

Methods to Fix Overfitting

There are several methods to fix overfitting:

Data Centric Approaches

- Collect More Data: Bigger, more diverse datasets dilute noise.

- Data Augmentation: Create new examples by transformations (rotation, noise injection, synonym replacement). Forces robustness to variations.

Model Centric Approaches

- Simplify the Architecture: Reduce layers/neurons → constrain capacity.

- Regularization:

- L1 (Lasso): Shrinks weights, encourages sparsity.

- L2 (Ridge / Weight Decay): Prevents excessively large weights.

- Dropout: Randomly deactivates neurons during training → prevents co adaptation.

- Batch Normalization: Adds stability, slight regularization through mini-batch noise.

Training Centric Approaches

- Early Stopping: Stop training when validation loss no longer improves → “freeze” the model at its sweet spot.

- Cross-Validation: Ensures model performance is consistent across different data splits.

- Learning Rate Scheduling: Reduces step size progressively, avoiding overfitting to noise late in training.

A Practical Anti-Overfitting Recipe

To prevent overfitting, follow these steps:

- Always hold out validation/test sets.

- Use augmentation (images/text/audio) aggressively.

- Start small → increase model size only if underfitting.

- Add Dropout + L2 as default.

- Enable Early Stopping callback.

- Iterate systematically, not blindly.

Conclusion

Overfitting is one of the most common challenges in training neural networks, but it is also one of the most preventable. By recognizing the early warning signs, like the widening gap between training and validation performance, you can intervene before your model becomes a memorization machine. The key lies in balance: building models that are powerful enough to capture the true patterns in data but disciplined enough to ignore the noise.

FAQs

Q: What is overfitting in machine learning?

A: Overfitting occurs when a model is too complex and learns the noise in the training data, rather than the underlying patterns.

Q: How can I prevent overfitting?

A: You can prevent overfitting by using techniques such as data augmentation, regularization, dropout, and early stopping.

Q: What are the symptoms of overfitting?

A: The symptoms of overfitting include high training accuracy but low validation/test accuracy, and a widening gap between training and validation loss.

Q: How can I fix overfitting?

A: You can fix overfitting by simplifying the model architecture, using regularization techniques, and stopping training when validation loss no longer improves.

Q: What is the importance of data augmentation in preventing overfitting?

A: Data augmentation helps to prevent overfitting by creating new examples that force the model to be robust to variations, rather than memorizing the training data.