Introduction to Large Language Models

Large Language Models (LLMs) like GPT, LLaMA, and Falcon have revolutionized the way machines understand and generate human-like text. These models have the ability to learn from vast amounts of data and produce coherent and natural-sounding text. However, their true potential is unlocked when they are fine-tuned for specific tasks.

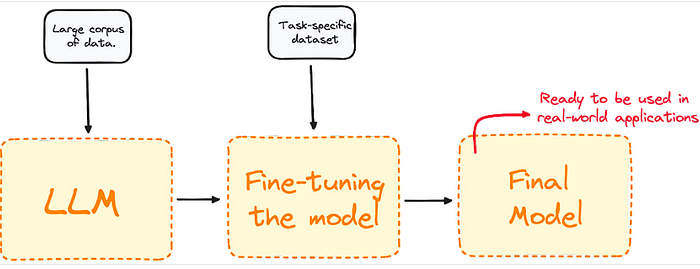

What is Fine-Tuning?

Fine-tuning is the process of adapting a pre-trained model to perform specialized tasks. This process bridges the gap between a general-purpose model and a domain-specific intelligence, enabling customized responses, better contextual understanding, and higher relevance in specialized use cases such as medical diagnosis, legal writing, or research analysis.

The Importance of Fine-Tuning

Fine-tuning is crucial for LLMs as it allows them to learn from specific datasets and adapt to particular tasks. This results in more accurate and relevant outputs, making the models more useful in real-world applications. For instance, a fine-tuned LLM can be used to analyze medical texts and provide more accurate diagnoses, or to generate legal documents that are tailored to specific cases.

Preparing for Fine-Tuning

Before fine-tuning an LLM, it is essential to prepare a suitable dataset and environment. This includes collecting and preprocessing the data, setting up the necessary computational resources, and selecting the appropriate fine-tuning technique. A well-prepared dataset and environment are critical for successful fine-tuning and can significantly impact the performance of the model.

Practical Code Framework for Fine-Tuning

Implementing fine-tuning requires a practical code framework that can handle the complexities of LLMs. This includes using libraries and tools that support fine-tuning, such as Hugging Face’s Transformers library, and writing code that can efficiently process and adapt to the data. A well-structured code framework is essential for successful fine-tuning and can help to streamline the process.

Parameter-Efficient Fine-Tuning Techniques

Parameter-efficient fine-tuning techniques are methods that allow for efficient adaptation of pre-trained models to new tasks without requiring significant changes to the model’s architecture. These techniques include methods such as adapter-based fine-tuning, which adds small adapter modules to the pre-trained model, and prompt-based fine-tuning, which uses carefully designed prompts to guide the model’s output.

Evaluating Model Performance

Evaluating the performance of a fine-tuned LLM is critical to ensuring that it is functioning as intended. This includes using metrics such as accuracy, precision, and recall to assess the model’s performance on specific tasks. However, it is also important to consider other factors, such as the model’s ability to express uncertainty and its potential biases, to ensure that it is fair and reliable.

Ethical Considerations of AI

The development and deployment of LLMs raise important ethical considerations. As these models become more pervasive and influential, it is essential to ensure that they are designed and used responsibly. This includes prioritizing transparency, accountability, and fairness, and ensuring that the models are able to express uncertainty and navigate the complexities of human understanding.

Conclusion

Fine-tuning large language models is a critical step in unlocking their full potential. By adapting these models to specific tasks and datasets, we can create more accurate, relevant, and useful outputs. However, it is also essential to consider the ethical implications of AI and ensure that these models are designed and used responsibly.

FAQs

What is fine-tuning in the context of LLMs?

Fine-tuning is the process of adapting a pre-trained LLM to perform specialized tasks.

Why is fine-tuning important?

Fine-tuning is important because it allows LLMs to learn from specific datasets and adapt to particular tasks, resulting in more accurate and relevant outputs.

What is required for successful fine-tuning?

Successful fine-tuning requires a well-prepared dataset, a suitable environment, and a practical code framework.

What are parameter-efficient fine-tuning techniques?

Parameter-efficient fine-tuning techniques are methods that allow for efficient adaptation of pre-trained models to new tasks without requiring significant changes to the model’s architecture.

Why is evaluating model performance important?

Evaluating model performance is important to ensure that the fine-tuned LLM is functioning as intended and to identify potential biases or areas for improvement.