Understanding Transformers and Multi-Head Attention

Transformers have become a crucial part of many AI breakthroughs in areas such as natural language processing (NLP), vision, and speech. A key component of transformers is multi-head self-attention, which allows a model to use several “attention lenses” to look at different aspects of the input.

What is Multi-Head Attention?

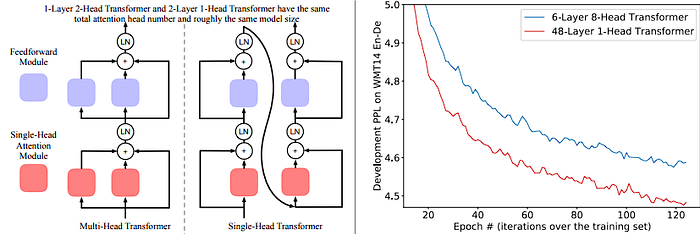

Multi-head attention is a concept where a model uses multiple attention mechanisms to focus on different parts of the input data. This is different from using a single attention lens, which can only focus on one aspect of the data at a time. By using multiple attention heads, a model can capture a wider range of information and relationships within the data.

Benefits of Multi-Head Attention

The use of multi-head attention has several benefits, including improved performance and efficiency. By allowing the model to focus on different aspects of the data, multi-head attention can help to capture complex relationships and patterns that may not be apparent with a single attention lens.

Limitations and Trade-Offs

However, increasing the number of attention heads is not always strictly better. There are limits and trade-offs to consider, such as the potential for redundancy and decreased efficiency. As the number of heads increases, the model may start to capture redundant information, which can lead to decreased performance and increased computational costs.

Practical Applications and Guidelines

In practical applications, the number of attention heads should be carefully considered to avoid redundancy and ensure meaningful representation of the data. Guidelines for managing head counts effectively include starting with a small number of heads and gradually increasing as needed, as well as monitoring performance and adjusting the number of heads accordingly.

Impact on Model Performance and Efficiency

The number of attention heads can significantly impact a model’s performance and efficiency. Increasing the number of heads can lead to improved performance, but it can also increase computational costs and lead to redundancy. Therefore, it is essential to find a balance between the number of heads and the model’s performance and efficiency.

Conclusion

In conclusion, multi-head attention is a powerful concept in transformers that allows models to capture complex relationships and patterns in data. While increasing the number of attention heads can lead to improved performance, it is essential to consider the limitations and trade-offs, such as redundancy and decreased efficiency. By carefully managing head counts and monitoring performance, developers can create more efficient and effective models.

FAQs

Q: What is multi-head attention in transformers?

A: Multi-head attention is a concept where a model uses multiple attention mechanisms to focus on different parts of the input data.

Q: What are the benefits of multi-head attention?

A: The benefits of multi-head attention include improved performance and efficiency, as well as the ability to capture complex relationships and patterns in data.

Q: What are the limitations and trade-offs of multi-head attention?

A: The limitations and trade-offs of multi-head attention include the potential for redundancy and decreased efficiency, as well as increased computational costs.

Q: How can developers manage head counts effectively?

A: Developers can manage head counts effectively by starting with a small number of heads and gradually increasing as needed, as well as monitoring performance and adjusting the number of heads accordingly.