Understanding AI and Neural Networks

Looking ahead, if the information removal techniques receive further development in the future, AI companies could potentially one day remove, say, copyrighted content, private information, or harmful memorized text from a neural network without destroying the model’s ability to perform transformative tasks. However, since neural networks store information in distributed ways that are still not completely understood, for the time being, the researchers say their method “cannot guarantee complete elimination of sensitive information.” These are early steps in a new research direction for AI.

Traveling the Neural Landscape

To understand how researchers from Goodfire distinguished memorization from reasoning in these neural networks, it helps to know about a concept in AI called the “loss landscape.” The “loss landscape” is a way of visualizing how wrong or right an AI model’s predictions are as you adjust its internal settings (which are called “weights”).

Imagine you’re tuning a complex machine with millions of dials. The “loss” measures the number of mistakes the machine makes. High loss means many errors, low loss means few errors. The “landscape” is what you’d see if you could map out the error rate for every possible combination of dial settings.

During training, AI models essentially “roll downhill” in this landscape (gradient descent), adjusting their weights to find the valleys where they make the fewest mistakes. This process provides AI model outputs, like answers to questions.

Memorization vs Reasoning

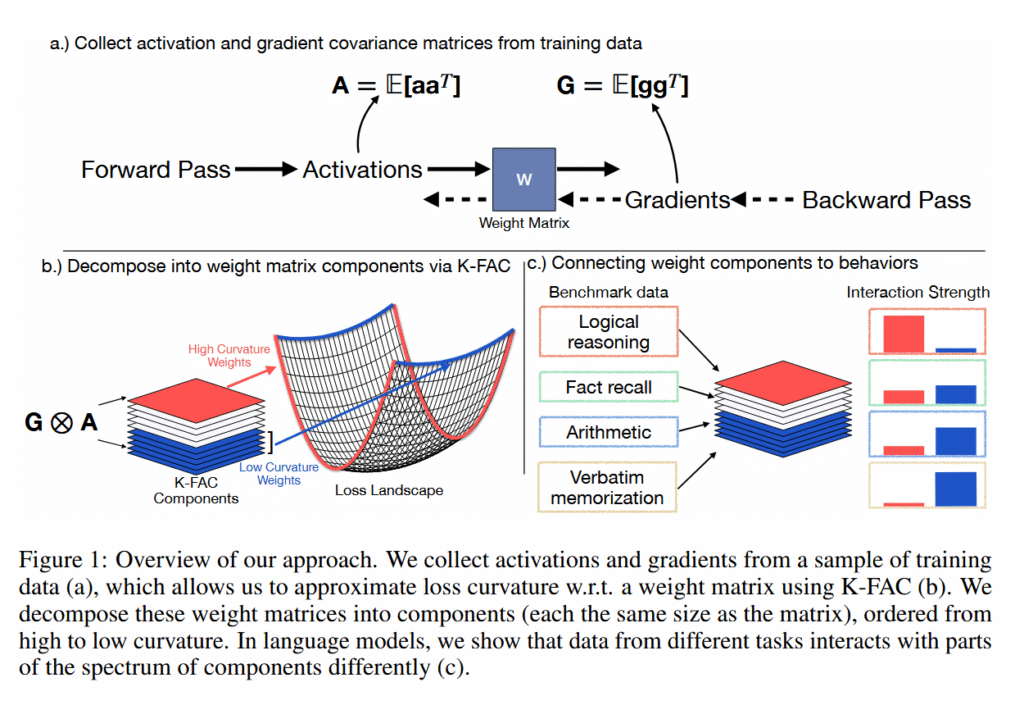

The researchers analyzed the “curvature” of the loss landscapes of particular AI language models, measuring how sensitive the model’s performance is to small changes in different neural network weights. Sharp peaks and valleys represent high curvature (where tiny changes cause big effects), while flat plains represent low curvature (where changes have minimal impact).

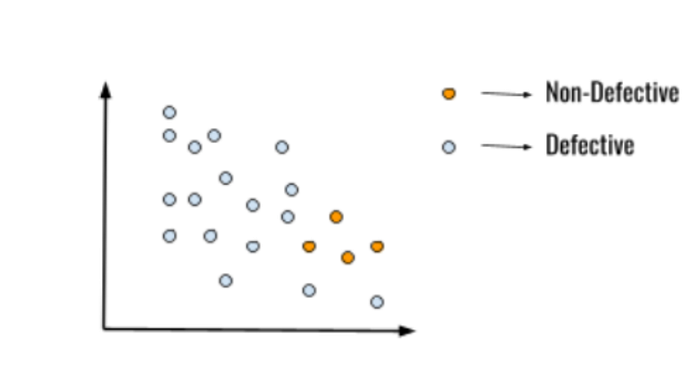

Using a technique called K-FAC (Kronecker-Factored Approximate Curvature), they found that individual memorized facts create sharp spikes in this landscape, but because each memorized item spikes in a different direction, when averaged together they create a flat profile. Meanwhile, reasoning abilities that many different inputs rely on maintain consistent moderate curves across the landscape, like rolling hills that remain roughly the same shape regardless of the direction from which you approach them.

Conclusion

The study of AI and neural networks is a complex and ongoing field of research. As we continue to develop and improve these systems, it is essential to understand the differences between memorization and reasoning. By analyzing the loss landscapes of AI models, researchers can gain insights into how these systems work and how they can be improved. This knowledge can be used to create more efficient and effective AI systems that are capable of performing a wide range of tasks.

Frequently Asked Questions

Q: What is the difference between memorization and reasoning in AI?

A: Memorization refers to the ability of an AI system to recall specific facts or pieces of information, while reasoning refers to the ability to draw conclusions and make decisions based on that information.

Q: How do researchers analyze the loss landscapes of AI models?

A: Researchers use techniques such as K-FAC (Kronecker-Factored Approximate Curvature) to analyze the curvature of the loss landscapes of AI models. This helps them understand how sensitive the model’s performance is to small changes in different neural network weights.

Q: What are the potential applications of this research?

A: The potential applications of this research include the development of more efficient and effective AI systems that are capable of performing a wide range of tasks, such as natural language processing, image recognition, and decision-making.