Introduction to Private AI Compute

Google has rolled out Private AI Compute, a new cloud-based processing system designed to bring the privacy of on-device AI to the cloud. The platform aims to give users faster, more capable AI experiences without compromising data security. It combines Google’s most advanced Gemini models with strict privacy safeguards, reflecting the company’s ongoing effort to make AI both powerful and responsible.

How Private AI Compute Compares to Other Solutions

The feature closely resembles Apple’s Private Cloud Compute, signalling how major tech firms are rethinking privacy in the age of large-scale AI. Both companies are trying to balance two competing needs — the huge computing power required to run advanced AI models and users’ expectations for data privacy.

Why Google Built Private AI Compute

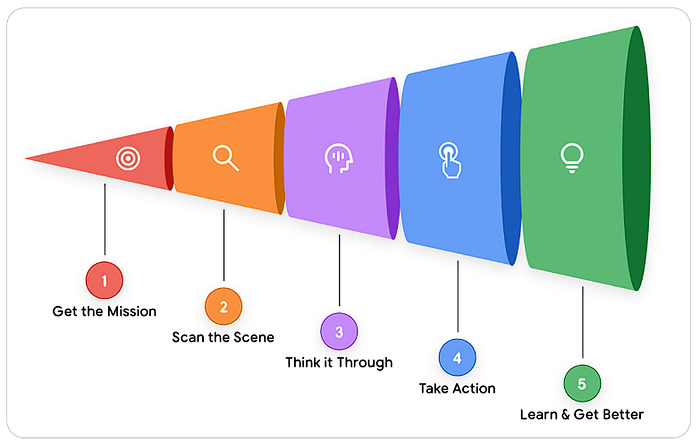

As AI systems get smarter, they’re also becoming more personal. What started as tools that completed simple tasks or answered direct questions are now systems that can anticipate user needs, suggest actions, and handle complex processes in real time. That kind of intelligence demands a level of reasoning and computation that often exceeds what’s possible on a single device.

Private AI Compute bridges that gap. It lets Gemini models in the cloud process data faster and more efficiently while ensuring that sensitive information remains private and inaccessible to anyone else — not even Google engineers. Google describes it as combining the power of cloud AI with the security users expect from local processing.

In practical terms, this means you could get quicker responses, smarter suggestions, and more personalised results without your personal data ever leaving your control.

How Private AI Compute Keeps Data Secure

Google claims the new platform is based on the same principles that underpin its broader AI and privacy strategy: giving users control, maintaining security, and earning trust. The system acts as a protected computing environment, isolating data so it can be processed safely and privately.

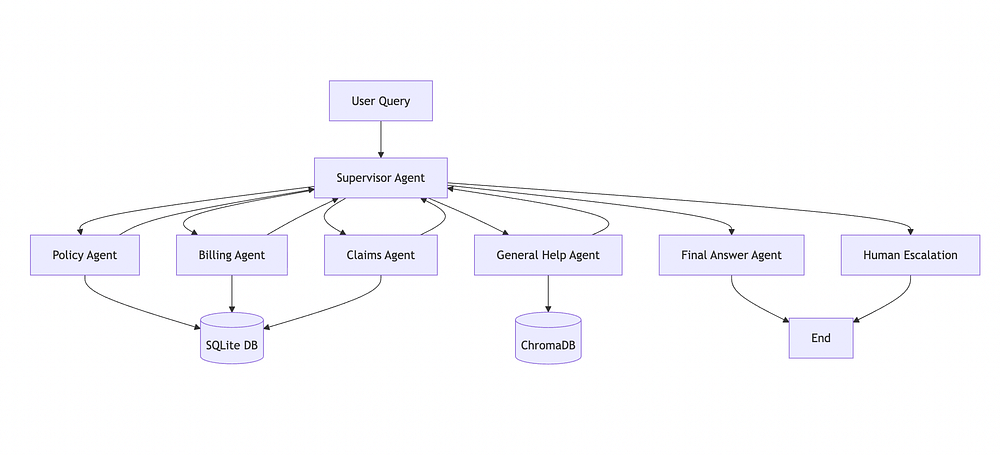

It uses a multi-layered design centred on three key components:

- Unified Google tech stack: Private AI Compute runs entirely on Google’s own infrastructure, powered by custom Tensor Processing Units (TPUs). It’s secured through Titanium Intelligence Enclaves (TIE), which create an additional layer of protection for data processed in the cloud.

- Encrypted connections: Before data is sent for processing, remote attestation and encryption verify that it’s connecting to a trusted, hardware-secured environment. Once inside this sealed cloud space, information stays private to the user.

- Zero access assurance: Google says the system is designed so that no one — not even the company itself — can access the data processed within Private AI Compute.

This design builds on Google’s Secure AI Framework (SAIF), AI Principles, and Privacy Principles, which outline how the company develops and deploys AI responsibly.

What Users Can Expect

Private AI Compute also improves the performance of AI features that are already running on devices. Magic Cue on the Pixel 10 can now offer more relevant and timely suggestions by leveraging cloud-level processing power. Similarly, the Recorder app can use the system to summarise transcriptions across a wider range of languages — something that would be difficult to do entirely on-device.

These examples hint at what’s ahead. With Private AI Compute, Google can deliver AI experiences that combine the privacy of local models with the intelligence of cloud-based ones. It’s an approach that could eventually apply to everything from personal assistants and photo organisation to productivity and accessibility tools.

Google calls this launch “just the beginning.” The company says Private AI Compute opens the door to a new generation of AI tools that are both more capable and more private. As AI becomes increasingly woven into everyday tasks, users are demanding greater transparency and control over how their data is used — and Google appears to be positioning this technology as part of that answer.

Conclusion

Private AI Compute is a significant step forward in balancing the power of AI with the need for data privacy. By providing a secure, cloud-based platform for processing sensitive information, Google is setting a new standard for responsible AI development. As the technology continues to evolve, it will be exciting to see how Private AI Compute enables new AI experiences that are both powerful and private.

FAQs

- What is Private AI Compute? Private AI Compute is a cloud-based processing system designed to bring the privacy of on-device AI to the cloud.

- How does Private AI Compute keep data secure? Private AI Compute uses a multi-layered design that includes a unified Google tech stack, encrypted connections, and zero access assurance to keep data secure.

- What are the benefits of Private AI Compute? Private AI Compute enables faster, more capable AI experiences without compromising data security. It also improves the performance of AI features that are already running on devices.

- Is Private AI Compute available now? Yes, Private AI Compute has been rolled out by Google, and it is expected to enable a new generation of AI tools that are both more capable and more private.