Introduction to AI Safety

As AI systems become more powerful, they will be integrated into more important domains, making it crucial to ensure their safety. According to Leo Gao, a research scientist at OpenAI, "It’s very important to make sure they’re safe." This is still early research, but the goal is to learn about the hidden mechanisms inside AI models to make them safer and more reliable.

The New Model

The new model, called a weight-sparse transformer, is smaller and less capable than top-tier models like GPT-5, Anthropic’s Claude, and Google DeepMind’s Gemini. It’s comparable to GPT-1, a model developed by OpenAI in 2018. The aim of this research is not to compete with the best models but to understand how they work and make them safer.

Understanding AI Models

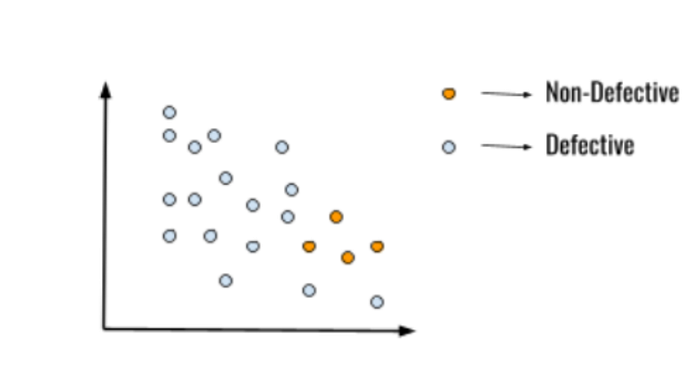

The research is part of a new field called mechanistic interpretability, which aims to map the internal mechanisms of AI models. This is a challenging task because AI models are built from neural networks, which consist of nodes called neurons arranged in layers. In most networks, each neuron is connected to every other neuron in its adjacent layers, making it hard to understand how they work.

How Neural Networks Work

Neural networks are relatively efficient to train and run, but they spread what they learn across a vast knot of connections. This means that simple concepts or functions can be split up between neurons in different parts of a model. Additionally, specific neurons can represent multiple different features, a phenomenon known as superposition. This makes it difficult to relate specific parts of a model to specific concepts.

Expert Opinions

Experts in the field agree that this research is interesting and has the potential to make a significant impact. Elisenda Grigsby, a mathematician at Boston College, says, "I’m sure the methods it introduces will have a significant impact." Lee Sharkey, a research scientist at AI startup Goodfire, adds, "This work aims at the right target and seems well executed."

Conclusion

In conclusion, the research on AI safety and mechanistic interpretability is crucial for the development of reliable and trustworthy AI models. By understanding how AI models work, we can make them safer and more efficient. This research has the potential to make a significant impact in the field of AI and contribute to the development of more advanced and reliable models.

FAQs

Q: What is mechanistic interpretability?

A: Mechanistic interpretability is a field of research that aims to map the internal mechanisms of AI models to understand how they work.

Q: Why is it hard to understand AI models?

A: AI models are built from neural networks, which consist of nodes called neurons arranged in layers, making it hard to understand how they work.

Q: What is the goal of the research on AI safety?

A: The goal of the research on AI safety is to make AI models safer and more reliable by understanding how they work and identifying potential risks.

Q: What is the potential impact of this research?

A: The research on AI safety and mechanistic interpretability has the potential to make a significant impact in the field of AI and contribute to the development of more advanced and reliable models.