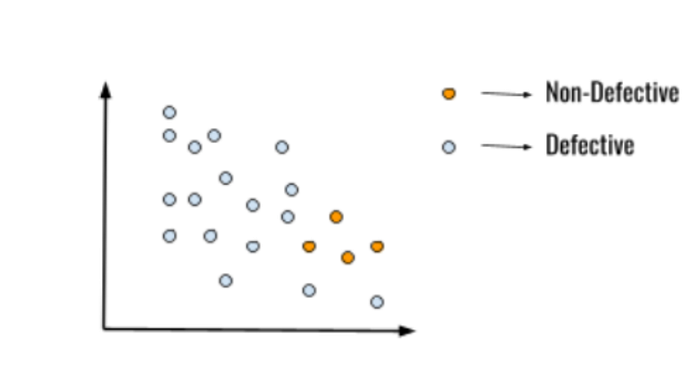

Introduction to Imbalanced Datasets

All that people ask for in a machine learning model is the accuracy of the model; this accuracy is sometimes nothing but a hoax. There are a lot of factors that determine the accuracy of the model, the major one among them is the quality of the dataset. The preparation of data is the most fundamental step in machine learning models.

The Challenges of Imbalanced Datasets

The article discusses the challenges posed by imbalanced datasets in machine learning, explaining how such datasets can lead to misleading accuracy metrics. It highlights various methods to address these issues, such as undersampling, oversampling, and SMOTE (Synthetic Minority Over-sampling Technique).

Undersampling Example

Methods to Handle Imbalanced Datasets

Each method is detailed with examples, demonstrating their applications and the potential pitfalls associated with each approach. These methods include:

- Undersampling: reducing the number of samples in the majority class

- Oversampling: increasing the number of samples in the minority class

- SMOTE: creating synthetic samples to balance the dataset

Conclusion

Handling imbalanced datasets is a crucial step in machine learning. By understanding the challenges and methods to address them, developers can create more accurate and reliable models. It’s essential to choose the right approach based on the specific dataset and problem being solved.

FAQs

Q: What is an imbalanced dataset?

A: An imbalanced dataset is a dataset where one class has a significantly larger number of instances than the other classes.

Q: Why is it important to handle imbalanced datasets?

A: Handling imbalanced datasets is important because it can lead to misleading accuracy metrics and affect the performance of machine learning models.

Q: What are some methods to handle imbalanced datasets?

A: Some methods to handle imbalanced datasets include undersampling, oversampling, and SMOTE (Synthetic Minority Over-sampling Technique).