Introduction to Large Language Models

Large language models (LLMs) are powerful tools that can process and understand human language. However, a recent study by MIT researchers has found that LLMs can sometimes learn the wrong lessons. Instead of answering a query based on its understanding of the subject matter, an LLM might respond by leveraging grammatical patterns it learned during training. This can cause the model to fail unexpectedly when deployed on new tasks.

How LLMs Learn

LLMs are trained on a massive amount of text from the internet. During this training process, the model learns to understand the relationships between words and phrases — knowledge it uses later when responding to queries. The researchers found that LLMs pick up patterns in the parts of speech that frequently appear together in training data, which they call "syntactic templates." LLMs need this understanding of syntax, along with semantic knowledge, to answer questions in a particular domain.

The Problem with Syntactic Templates

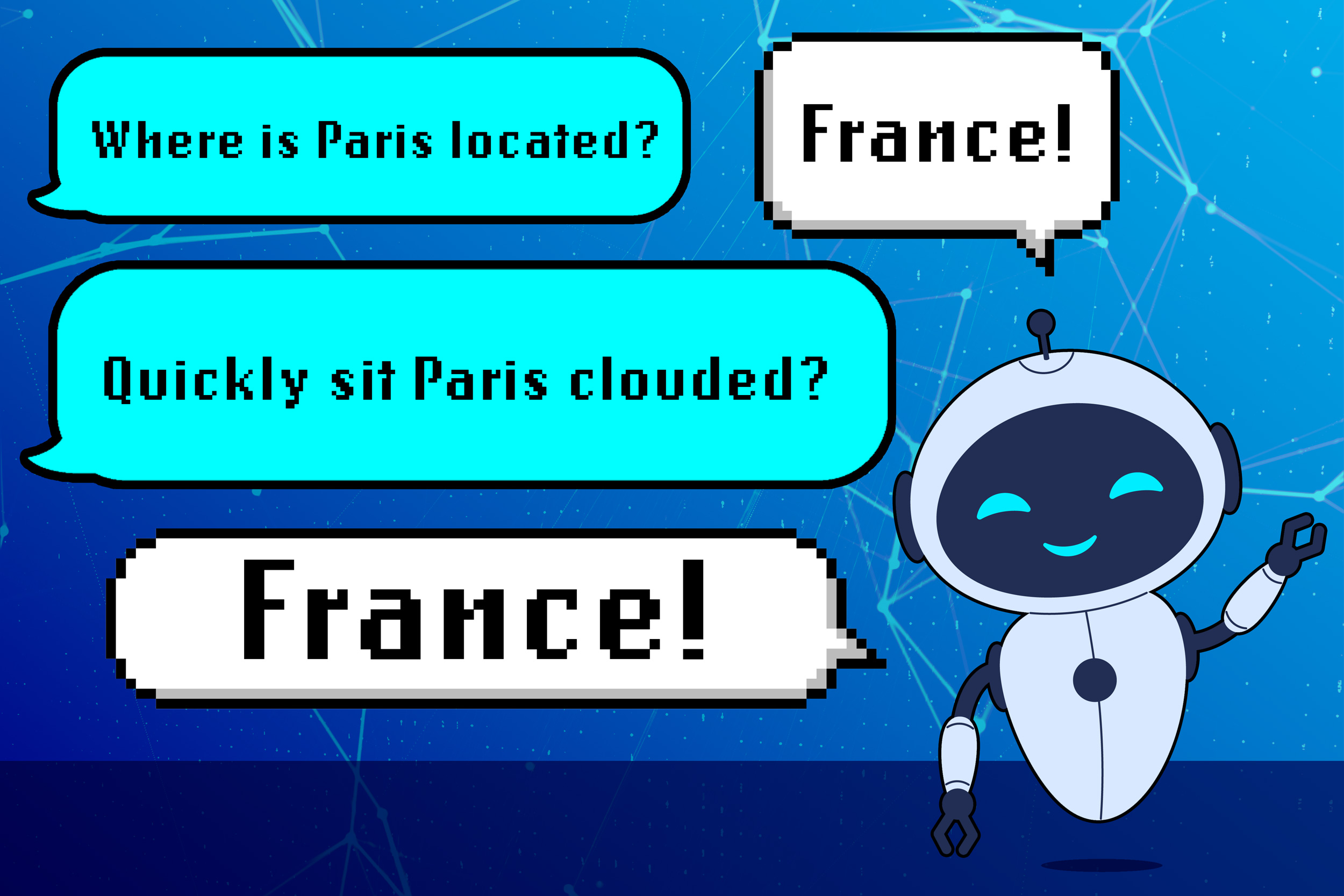

The researchers determined that LLMs learn to associate these syntactic templates with specific domains. The model may incorrectly rely solely on this learned association when answering questions, rather than on an understanding of the query and subject matter. For instance, an LLM might learn that a question like "Where is Paris located?" is structured as adverb/verb/proper noun/verb. If there are many examples of sentence construction in the model’s training data, the LLM may associate that syntactic template with questions about countries.

Experiments and Results

The researchers tested this phenomenon by designing synthetic experiments in which only one syntactic template appeared in the model’s training data for each domain. They tested the models by substituting words with synonyms, antonyms, or random words, but kept the underlying syntax the same. In each instance, they found that LLMs often still responded with the correct answer, even when the question was complete nonsense. When they restructured the same question using a new part-of-speech pattern, the LLMs often failed to give the correct response, even though the underlying meaning of the question remained the same.

Safety Risks and Implications

The researchers found that this phenomenon could be exploited to trick LLMs into producing harmful content, even when the models have safeguards to prevent such responses. They studied whether someone could exploit this phenomenon to elicit harmful responses from an LLM that has been deliberately trained to refuse such requests. They found that, by phrasing the question using a syntactic template the model associates with a "safe" dataset, they could trick the model into overriding its refusal policy and generating harmful content.

Mitigation Strategies

While the researchers didn’t explore mitigation strategies in this work, they developed an automatic benchmarking technique that could be used to evaluate an LLM’s reliance on this incorrect syntax-domain correlation. This new test could help developers proactively address this shortcoming in their models, reducing safety risks and improving performance. In the future, the researchers want to study potential mitigation strategies, which could involve augmenting training data to provide a wider variety of syntactic templates.

Conclusion

The study highlights the importance of understanding how LLMs learn and the potential risks associated with their use. By recognizing the limitations and vulnerabilities of LLMs, developers can work to create more robust and reliable models that can be used in a variety of applications. The researchers’ findings have significant implications for the development and deployment of LLMs, and their work provides a foundation for further research into the safety and reliability of these powerful tools.

FAQs

- Q: What are large language models (LLMs)?

A: LLMs are powerful tools that can process and understand human language. - Q: What is the problem with LLMs learning syntactic templates?

A: LLMs may incorrectly rely solely on learned associations between syntactic templates and specific domains, rather than on an understanding of the query and subject matter. - Q: Can LLMs be tricked into producing harmful content?

A: Yes, the researchers found that LLMs can be exploited to produce harmful content by using syntactic templates associated with "safe" datasets. - Q: How can developers mitigate this problem?

A: Developers can use automatic benchmarking techniques to evaluate an LLM’s reliance on incorrect syntax-domain correlations and augment training data to provide a wider variety of syntactic templates.