Introduction to Model Context Protocol (MCP)

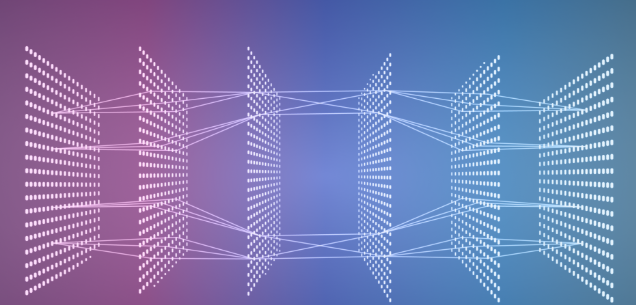

The Model Context Protocol (MCP) is a standard way for AI assistants to talk to external tools. It defines how a model can discover, call, and communicate with tools in a structured and predictable way. Think of it as a toolbelt for LLMs (Large Language Models).

What Makes MCP Special

MCP has several key features that make it useful:

- Standardised Communication: It uses JSON-RPC 2.0 over standard input/output (stdio), so everything speaks the same language.

- Tool Discovery: LLMs can list available tools and understand their schemas — like checking a toolbox before getting to work.

- Safe Execution: Built-in input validation and error handling keep interactions clean and predictable.

- Extensible: You can expose any capability as an MCP tool — HTTP clients, databases, shell commands, you name it.

Why JSON-RPC 2.0

JSON-RPC 2.0 is a lightweight remote procedure-call (RPC) protocol that uses JSON for formatting requests and responses. It is transport-agnostic, meaning you can use it over stdio, HTTP, TCP, WebSockets — whichever you prefer. This makes it a good fit for MCP tools, as it provides a simple, reliable, and flexible communication layer between LLMs and external tools.

How LLMs Use MCP Tools

When an LLM gets a request from a user, it follows a reasoning process that looks something like this:

- Intent Recognition: Understand what the user wants in natural language.

- Tool Matching: Find the right MCP tool that can handle that request.

- Parameter Extraction: Convert the user’s request into structured JSON parameters.

- Tool Invocation: Call the MCP tool via JSON-RPC with those parameters.

- Response Integration: Take the tool’s response and blend it into the final answer for the user.

Example

For example, if a user asks "Get the latest posts from https://api.example.com/posts", the LLM would:

- Identify the intent as fetching data from a URL

- Match the request to the "make_request" tool

- Extract the parameters (url, method, etc.)

- Invoke the tool with these parameters

- Format the response for the user

How the LLM “Thinks” When Using MCP Tools

The LLM follows a structured reasoning process that turns natural language into actionable commands and interprets the results. This process includes:

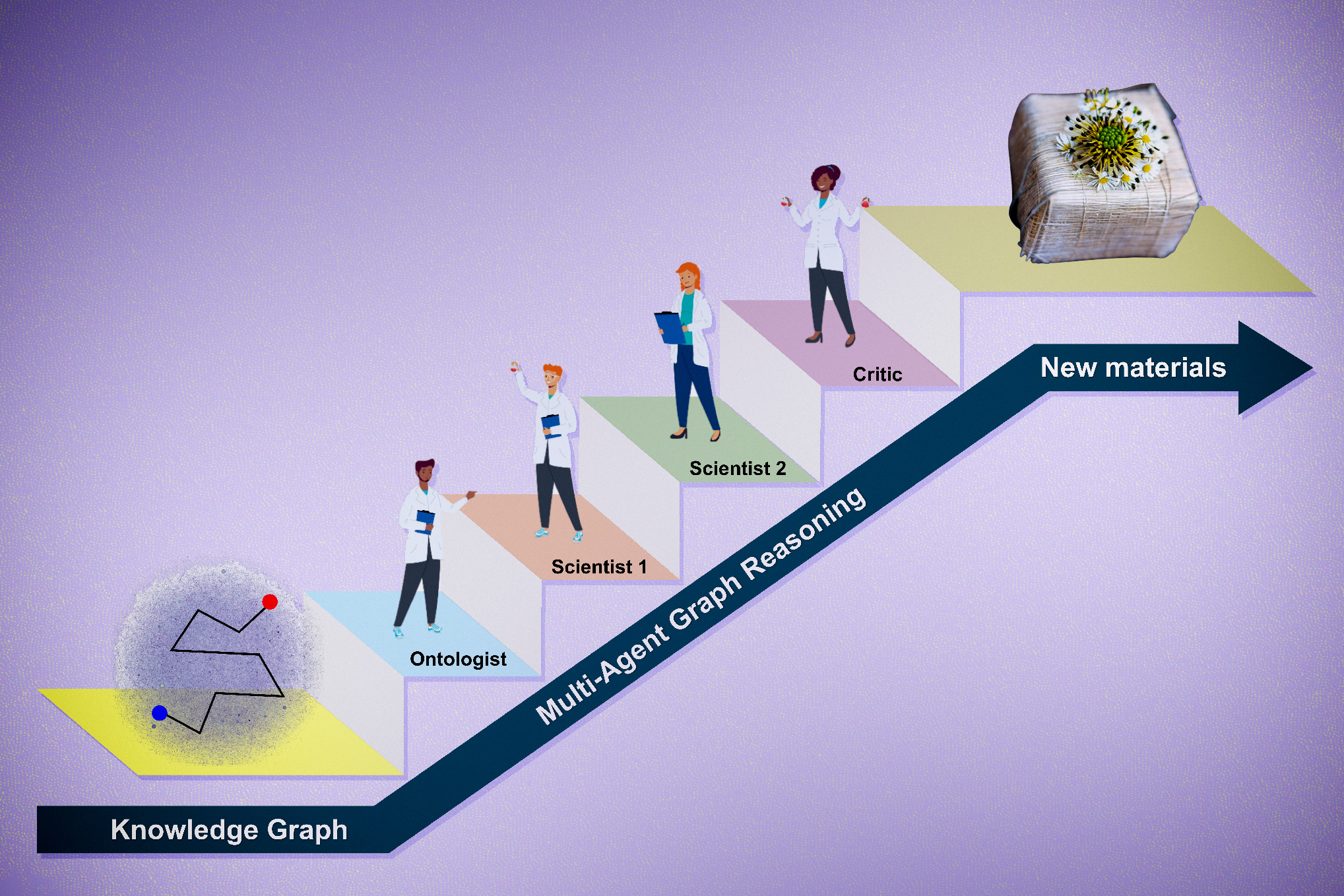

0. Tool Discovery Phase (Initial Connection)

The LLM discovers available tools and their schemas.

1. Natural Language Understanding

The LLM identifies what the user wants and matches it to a tool.

2. Parameter Extraction

The LLM converts the user’s request into structured parameters.

3. JSON Construction

The LLM formats the parameters into a JSON-RPC request.

4. Result Interpretation

The LLM interprets the tool’s response and prepares a coherent explanation.

5. User-Friendly Output

The LLM presents a clear, user-friendly answer to the original request.

Wrapping Up

In conclusion, the Model Context Protocol (MCP) is a powerful tool for AI assistants to communicate with external tools. By understanding how MCP works and how LLMs use it, we can build more effective and efficient AI systems.

What’s Next

In the next part, we will dive into the technical side of building an MCP server, including designing a modular and scalable architecture, handling discovery, requests, and responses, and connecting with tools like Cursor or Claude.

Conclusion

The Model Context Protocol (MCP) is an essential component of modern AI systems, enabling LLMs to communicate with external tools in a structured and predictable way. By understanding MCP and its applications, we can unlock new possibilities for AI development and create more sophisticated AI systems.

FAQs

- What is the Model Context Protocol (MCP)?: MCP is a standard way for AI assistants to talk to external tools.

- What is JSON-RPC 2.0?: JSON-RPC 2.0 is a lightweight remote procedure-call (RPC) protocol that uses JSON for formatting requests and responses.

- How do LLMs use MCP tools?: LLMs use MCP tools by following a structured reasoning process that turns natural language into actionable commands and interprets the results.

- What are the benefits of using MCP?: MCP provides a simple, reliable, and flexible communication layer between LLMs and external tools, enabling more efficient and effective AI systems.