Introduction to Large Language Models

Researchers from MIT, Northeastern University, and Meta recently released a paper suggesting that large language models (LLMs) similar to those that power ChatGPT may sometimes prioritize sentence structure over meaning when answering questions. The findings reveal a weakness in how these models process instructions that may shed light on why some prompt injection or jailbreaking approaches work, though the researchers caution their analysis of some production models remains speculative since training data details of prominent commercial AI models are not publicly available.

Understanding the Research

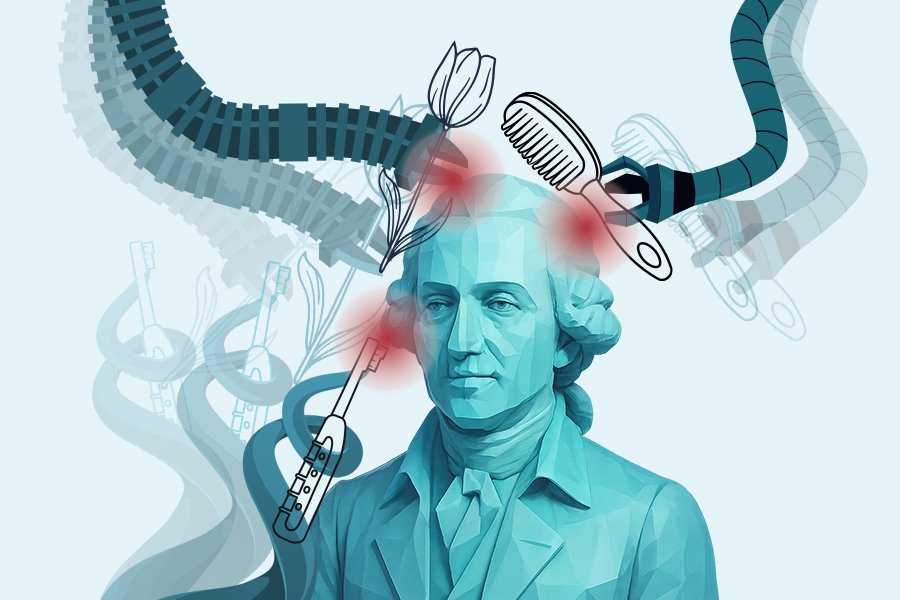

The team, led by Chantal Shaib and Vinith M. Suriyakumar, tested this by asking models questions with preserved grammatical patterns but nonsensical words. For example, when prompted with “Quickly sit Paris clouded?” (mimicking the structure of “Where is Paris located?”), models still answered “France.” This suggests models absorb both meaning and syntactic patterns, but can overrely on structural shortcuts when they strongly correlate with specific domains in training data, which sometimes allows patterns to override semantic understanding in edge cases.

Syntax vs. Semantics

As a refresher, syntax describes sentence structure—how words are arranged grammatically and what parts of speech they use. Semantics describes the actual meaning those words convey, which can vary even when the grammatical structure stays the same. Semantics depends heavily on context, and navigating context is what makes LLMs work. The process of turning an input, your prompt, into an output, an LLM answer, involves a complex chain of pattern matching against encoded training data.

The Experiment

To investigate when and how this pattern-matching can go wrong, the researchers designed a controlled experiment. They created a synthetic dataset by designing prompts in which each subject area had a unique grammatical template based on part-of-speech patterns. For instance, geography questions followed one structural pattern while questions about creative works followed another. They then trained Allen AI’s Olmo models on this data and tested whether the models could distinguish between syntax and semantics.

Conclusion

The study’s findings indicate that large language models can prioritize sentence structure over meaning, which may lead to incorrect or nonsensical answers in certain cases. The researchers plan to present these findings at NeurIPS later this month. This research highlights the importance of understanding how LLMs process language and the need for further development to improve their ability to distinguish between syntax and semantics.

FAQs

- Q: What are large language models (LLMs)?

A: LLMs are artificial intelligence models that process and generate human-like language. - Q: What is the difference between syntax and semantics?

A: Syntax refers to the structure of sentences, while semantics refers to the meaning of the words and phrases. - Q: Why do LLMs sometimes prioritize syntax over semantics?

A: LLMs may prioritize syntax over semantics when they are trained on data that strongly correlates specific domains with certain grammatical patterns, leading to overreliance on structural shortcuts. - Q: What are the implications of this research?

A: The research highlights the need for further development of LLMs to improve their ability to distinguish between syntax and semantics, leading to more accurate and meaningful responses.