Introduction to Perforated Backpropagation

Perforated Backpropagation is an optimization technique that brings a long overdue update to the current model based on 1943 neuroscience. The new neuron instantiates the concept of artificial dendrites, bringing a parallel to the computation power dendrites add to neurons in biological systems.

What is Perforated Backpropagation?

Perforated Backpropagation is a new type of artificial neuron that leverages the concept of artificial dendrites to improve the computation power of neurons in artificial neural networks. This technique can be used to improve the accuracy of models and compress them, making them more efficient.

Step-by-Step Guide to Implementing Perforated Backpropagation

To implement Perforated Backpropagation in your PyTorch training pipeline, follow these steps:

Step 1: Install the Package

The first step is to install the package using pip:

pip install perforatedaiStep 2: Add Imports

Add the necessary imports to the top of your training script:

from perforatedai import pb_globals as PBG

from perforatedai import pb_models as PBM

from perforatedai import pb_utils as PBUStep 3: Convert Modules to Add Artificial Dendrites

Convert the modules in your model to be wrapped in a way that allows them to add artificial dendrites:

model = Net().to(device)

model = PBU.initializePB(model)Step 4: Set Up the Optimizer and Scheduler

Set up the optimizer and scheduler using the Perforated Backpropagation tracker object:

PBG.pbTracker.setOptimizer(optim.Adadelta)

PBG.pbTracker.setScheduler(StepLR)

optimArgs = {'params': model.parameters(), 'lr': args.lr}

schedArgs = {'step_size': 1, 'gamma': args.gamma}

optimizer, scheduler = PBG.pbTracker.setupOptimizer(model, optimArgs, schedArgs)Step 5: Add Validation Scores

Add the validation scores to the Perforated Backpropagation tracker object:

model, restructured, trainingComplete = PBG.pbTracker.addValidationScore(100. * correct / len(test_loader.dataset), model)Running Your First Experiment

With these steps, you are now ready to run your first experiment. After making these changes, the system will add three Dendrites, and then inform you that no problems were detected.

Using Dendrites for Compression

Perforated Backpropagation can also be used for model compression. By adding Dendrites to a model, you can increase its accuracy while reducing its size.

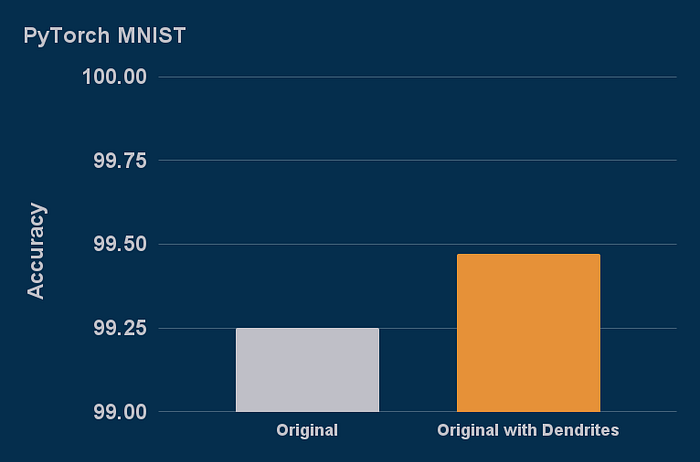

Example Use Case: MNIST Model

The MNIST model was used as an example to demonstrate the effectiveness of Perforated Backpropagation. The results showed a 29% reduction in the remaining error of the MNIST model.

Conclusion

Perforated Backpropagation is a powerful technique that can be used to improve the accuracy and efficiency of artificial neural networks. By following the steps outlined in this article, you can implement Perforated Backpropagation in your PyTorch training pipeline and start seeing improvements in your models.

FAQs

Q: What is Perforated Backpropagation?

A: Perforated Backpropagation is an optimization technique that uses artificial dendrites to improve the computation power of neurons in artificial neural networks.

Q: How do I implement Perforated Backpropagation in my PyTorch training pipeline?

A: Follow the steps outlined in this article to implement Perforated Backpropagation in your PyTorch training pipeline.

Q: Can Perforated Backpropagation be used for model compression?

A: Yes, Perforated Backpropagation can be used for model compression by adding Dendrites to a model and reducing its size while maintaining its accuracy.

Q: What are the benefits of using Perforated Backpropagation?

A: The benefits of using Perforated Backpropagation include improved accuracy, increased efficiency, and model compression.