Introduction to Spatial Speech Translation

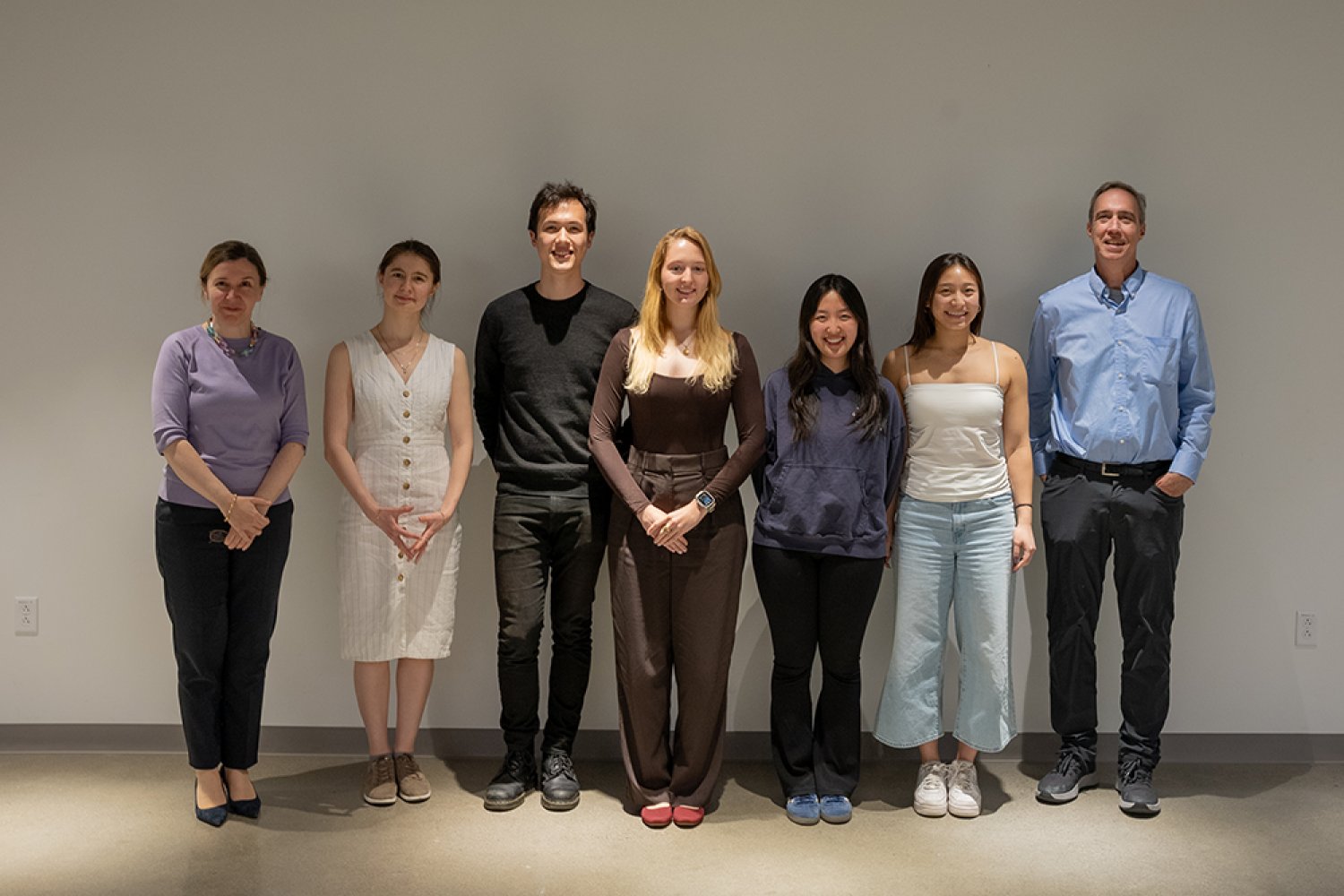

Spatial Speech Translation is a revolutionary technology that combines two AI models to translate speech in real-time, allowing people who speak different languages to communicate seamlessly. The first model uses a neural network to divide the space surrounding the person wearing the headphones into small regions and pinpoint the direction of potential speakers.

How it Works

The second model then translates the speakers’ words from French, German, or Spanish into English text using publicly available data sets. This model also extracts the unique characteristics and emotional tone of each speaker’s voice, such as the pitch and the amplitude, and applies those properties to the text. This creates a "cloned" voice that sounds like the speaker’s own, rather than a robotic computer voice. When the translated version of a speaker’s words is relayed to the headphone wearer a few seconds later, it sounds as if it’s coming from the speaker’s direction.

Challenges and Limitations

Given that separating out human voices is hard enough for AI systems, being able to incorporate that ability into a real-time translation system, map the distance between the wearer and the speaker, and achieve decent latency on a real device is impressive. However, experts note that the system’s performance is limited by the quality and quantity of the training data. For a real product, much more training data would be needed, possibly with noise and real-world recordings from the headset, rather than purely relying on synthetic data.

Future Developments

The team behind Spatial Speech Translation is now focusing on reducing the latency of the AI translation, which will accommodate more natural-sounding conversations between people speaking different languages. They aim to reduce the latency to less than a second, allowing for a more conversational vibe. However, reducing the latency could make the translations less accurate, as the longer the system waits before translating, the more context it has, and the better the translation will be.

Language-Specific Challenges

The speed at which an AI system can translate one language into another depends on the languages’ structure. For example, the system was quickest to translate French into English, followed by Spanish and then German. This is because German places a sentence’s verbs and much of its meaning at the end, rather than at the beginning, making it more challenging to translate in real-time.

Conclusion

Spatial Speech Translation is a groundbreaking technology that has the potential to revolutionize the way people communicate across language barriers. While there are still challenges and limitations to be addressed, the technology has made significant progress in recent years. With further developments and refinements, Spatial Speech Translation could become an indispensable tool for people around the world.

FAQs

- What languages does Spatial Speech Translation support? Currently, the system supports translation from French, German, and Spanish into English.

- How does the system handle background noise and multiple speakers? The system uses a neural network to separate out human voices and pinpoint the direction of potential speakers, allowing it to handle background noise and multiple speakers.

- What is the current latency of the system? The current latency of the system is a few seconds, but the team is working to reduce it to less than a second.

- Can the system be used in real-world settings? While the system has shown promising results in limited testing settings, it would require more training data and refinement to be used in real-world settings.

- Is the system available for public use? The system is not currently available for public use, but it has the potential to become a widely used tool in the future.