Introduction to Ant Group’s AI Advancements

Ant Group, the operator of Alipay, has made a significant milestone in the field of artificial intelligence (AI) with the release of Ling-1T, a trillion-parameter AI model. This model is positioned as a breakthrough in balancing computational efficiency with advanced reasoning capabilities. The release of Ling-1T marks a significant step for Ant Group, which has been rapidly building out its AI infrastructure across multiple model architectures.

Dual-Pronged Approach to AI Advancement

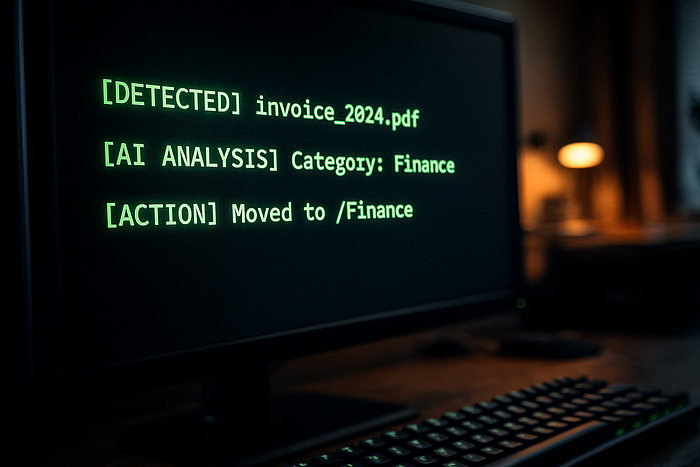

The trillion-parameter AI model release coincides with Ant Group’s launch of dInfer, a specialized inference framework engineered for diffusion language models. This parallel release strategy reflects the company’s bet on multiple technological approaches rather than a single architectural paradigm. Diffusion language models represent a departure from the autoregressive systems that underpin widely used chatbots like ChatGPT. Unlike sequential text generation, diffusion models produce outputs in parallel—an approach already prevalent in image and video generation tools but less common in language processing.

Performance Metrics and Competitive Advantage

Ant Group’s performance metrics for dInfer suggest substantial efficiency gains. Testing on the company’s LLaDA-MoE diffusion model yielded 1,011 tokens per second on the HumanEval coding benchmark, versus 91 tokens per second for Nvidia’s Fast-dLLM framework and 294 for Alibaba’s Qwen-2.5-3B model running on vLLM infrastructure. The trillion-parameter AI model demonstrates competitive performance on complex mathematical reasoning tasks, achieving 70.42% accuracy on the 2025 American Invitational Mathematics Examination (AIME) benchmark—a standard used to evaluate AI systems’ problem-solving abilities.

Ecosystem Expansion Beyond Language Models

The Ling-1T trillion-parameter AI model sits within a broader family of AI systems that Ant Group has assembled over recent months. The company’s portfolio now spans three primary series: the Ling non-thinking models for standard language tasks, Ring thinking models designed for complex reasoning (including the previously released Ring-1T-preview), and Ming multimodal models capable of processing images, text, audio, and video. This diversified approach extends to an experimental model designated LLaDA-MoE, which employs Mixture-of-Experts (MoE) architecture—a technique that activates only relevant portions of a large model for specific tasks, theoretically improving efficiency.

Competitive Dynamics in a Constrained Environment

The timing and nature of Ant Group’s releases illuminate strategic calculations within China’s AI sector. With access to cutting-edge semiconductor technology limited by export restrictions, Chinese technology firms have increasingly emphasized algorithmic innovation and software optimization as competitive differentiators. ByteDance, parent company of TikTok, similarly introduced a diffusion language model called Seed Diffusion Preview in July, claiming five-fold speed improvements over comparable autoregressive architectures. These parallel efforts suggest industry-wide interest in alternative model paradigms that might offer efficiency advantages.

Open-Source Strategy as Market Positioning

By making the trillion-parameter AI model publicly available alongside the dInfer framework, Ant Group is pursuing a collaborative development model that contrasts with the closed approaches of some competitors. This strategy potentially accelerates innovation while positioning Ant’s technologies as foundational infrastructure for the broader AI community. The company is simultaneously developing AWorld, a framework intended to support continual learning in autonomous AI agents—systems designed to complete tasks independently on behalf of users.

Conclusion

Ant Group’s release of the trillion-parameter AI model and the dInfer framework marks a significant step in the company’s AI development efforts. With a dual-pronged approach to AI advancement, Ant Group is positioning itself as a major player in the global AI landscape. The open-source strategy and collaborative development model may accelerate innovation and establish Ant Group as a significant force in AI development.

FAQs

- What is Ling-1T, and what makes it significant?

Ling-1T is a trillion-parameter AI model released by Ant Group, which is positioned as a breakthrough in balancing computational efficiency with advanced reasoning capabilities. - What is dInfer, and how does it relate to Ling-1T?

dInfer is a specialized inference framework engineered for diffusion language models, released alongside Ling-1T. It suggests substantial efficiency gains and is part of Ant Group’s bet on multiple technological approaches. - How does Ant Group’s AI model performance compare to others?

The trillion-parameter AI model demonstrates competitive performance on complex mathematical reasoning tasks, achieving 70.42% accuracy on the 2025 American Invitational Mathematics Examination (AIME) benchmark. - What is the significance of Ant Group’s open-source strategy?

By making the trillion-parameter AI model publicly available, Ant Group is pursuing a collaborative development model that potentially accelerates innovation and positions Ant’s technologies as foundational infrastructure for the broader AI community. - What are the potential applications of Ant Group’s AI developments?

The potential applications include customer-facing applications, autonomous AI agents, and continual learning in AI systems, among others.