Introduction to AI Reasoning Models

In early June, Apple researchers released a study suggesting that simulated reasoning (SR) models, such as OpenAI’s o1 and o3, DeepSeek-R1, and Claude 3.7 Sonnet Thinking, produce outputs consistent with pattern-matching from training data when faced with novel problems requiring systematic thinking. The researchers found similar results to a recent study by the United States of America Mathematical Olympiad (USAMO) in April, showing that these same models achieved low scores on novel mathematical proofs.

The Study

The new study, titled "The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity," comes from a team at Apple led by Parshin Shojaee and Iman Mirzadeh, and it includes contributions from Keivan Alizadeh, Maxwell Horton, Samy Bengio, and Mehrdad Farajtabar. The researchers examined what they call "large reasoning models" (LRMs), which attempt to simulate a logical reasoning process by producing a deliberative text output sometimes called "chain-of-thought reasoning" that ostensibly assists with solving problems in a step-by-step fashion.

Methodology

To do that, they pitted the AI models against four classic puzzles—Tower of Hanoi (moving disks between pegs), checkers jumping (eliminating pieces), river crossing (transporting items with constraints), and blocks world (stacking blocks)—scaling them from trivially easy (like one-disk Hanoi) to extremely complex (20-disk Hanoi requiring over a million moves).

Findings

"Current evaluations primarily focus on established mathematical and coding benchmarks, emphasizing final answer accuracy," the researchers write. In other words, today’s tests only care if the model gets the right answer to math or coding problems that may already be in its training data—they don’t examine whether the model actually reasoned its way to that answer or simply pattern-matched from examples it had seen before. The researchers found results consistent with the aforementioned USAMO research, showing that these same models achieved mostly under 5 percent on novel mathematical proofs, with only one model reaching 25 percent, and not a single perfect proof among nearly 200 attempts. Both research teams documented severe performance degradation on problems requiring extended systematic reasoning.

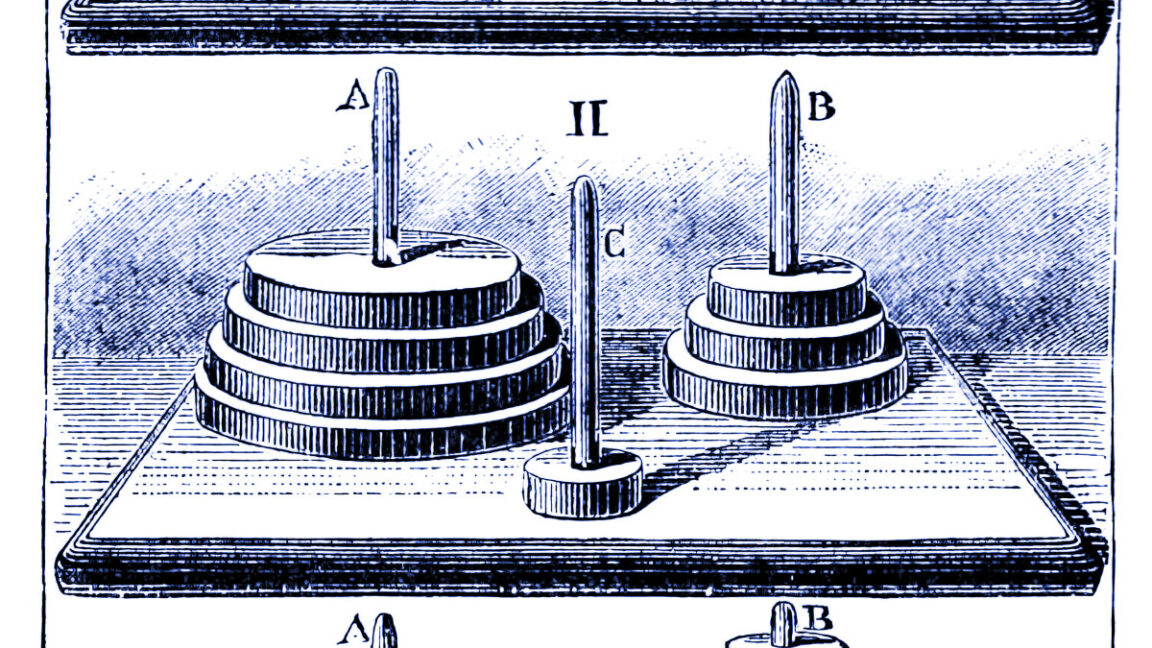

Visual Representation

Figure 1 from Apple’s "The Illusion of Thinking" research paper illustrates the concept.

<img width="1024" height="666" src="https://cdn.arstechnica.net/wp-content/uploads/2025/06/7f98178d-fd87-4e83-ba40-c44a0fd7ecbf_2166x1408-1024×666.png" class="center large" alt="Figure 1 from Apple’s "The Illusion of Thinking" research paper." decoding="async" loading="lazy" srcset="https://cdn.arstechnica.net/wp-content/uploads/2025/06/7f98178d-fd87-4e83-ba40-c44a0fd7ecbf_2166x1408-1024×666.png 1024w, https://cdn.arstechnica.net/wp-content/uploads/2025/06/7f98178d-fd87-4e83-ba40-c44a0fd7ecbf_2166x1408-640×416.png 640w, https://cdn.arstechnica.net/wp-content/uploads/2025/06/7f98178d-fd87-4e83-ba40-c44a0fd7ecbf_2166x1408-768×499.png 768w, https://cdn.arstechnica.net/wp-content/uploads/2025/06/7f98178d-fd87-4e83-ba40-c44a0fd7ecbf_2166x1408-1536×998.png 1536w, https://cdn.arstechnica.net/wp-content/uploads/2025/06/7f98178d-fd87-4e83-ba40-c44a0fd7ecbf_2166x1408-2048×1331.png 2048w, https://cdn.arstechnica.net/wp-content/uploads/2025/06/7f98178d-fd87-4e83-ba40-c44a0fd7ecbf_2166x1408-980×637.png 980w, https://cdn.arstechnica.net/wp-content/uploads/2025/06/7f98178d-fd87-4e83-ba40-c44a0fd7ecbf_2166x1408-1440×936.png 1440w" sizes="auto, (max-width: 1024px) 100vw, 1024px"/>

Credit: Apple

Conclusion

The study by Apple researchers highlights the limitations of current AI reasoning models, which tend to rely on pattern-matching rather than actual reasoning. This has significant implications for the development of more advanced AI systems that can truly think and reason like humans. By recognizing the strengths and limitations of these models, researchers can work towards creating more robust and reliable AI systems that can tackle complex problems in a more systematic and logical way.

FAQs

Q: What is the main finding of the Apple study?

A: The main finding is that current AI reasoning models tend to rely on pattern-matching rather than actual reasoning when faced with novel problems.

Q: What are the implications of this study?

A: The study highlights the need for more advanced AI systems that can truly think and reason like humans, rather than just relying on pattern-matching.

Q: What are the limitations of current AI reasoning models?

A: Current models tend to perform poorly on problems that require extended systematic reasoning, and they often rely on pattern-matching rather than actual reasoning.

Q: How can this study contribute to the development of more advanced AI systems?

A: By recognizing the strengths and limitations of current AI reasoning models, researchers can work towards creating more robust and reliable AI systems that can tackle complex problems in a more systematic and logical way.