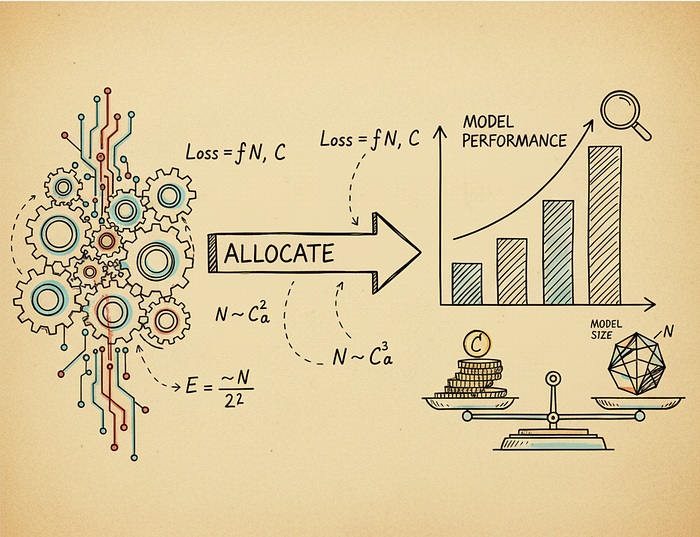

Introduction to Scaling Laws

Training a language model is expensive, with a single training run for a 70 billion parameter model costing millions of dollars in compute. The concept of scaling laws in training language models emphasizes the importance of balancing model size, training data, and compute budget.

What are Scaling Laws?

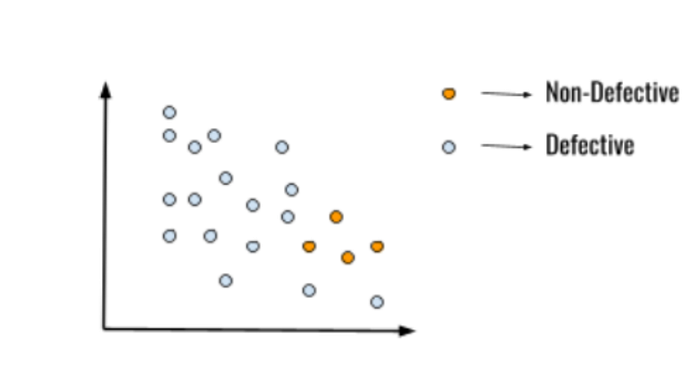

Scaling laws refer to the guidelines that help practitioners achieve significant improvements in model efficiency and effectiveness. These laws highlight the importance of scaling models equally in size and data for optimal performance. By following these empirical guidelines, developers can create better-performing language models while addressing the critical trade-offs between training and inference costs.

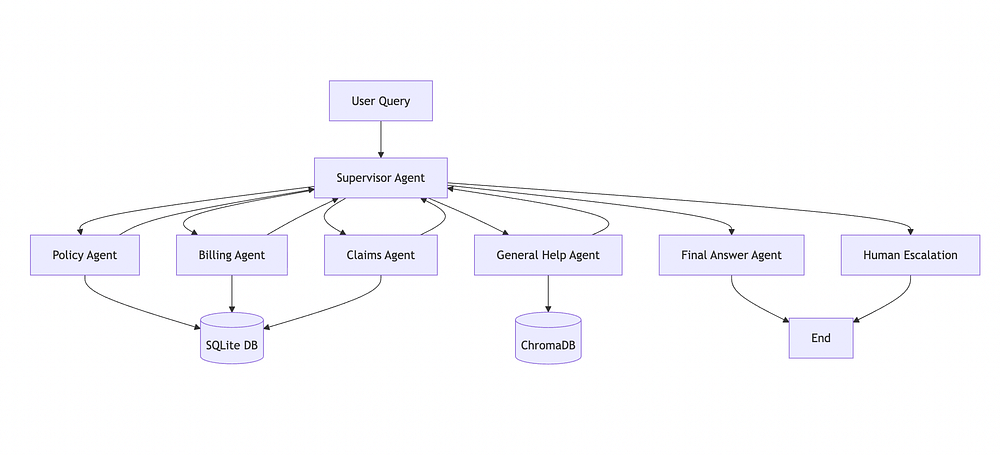

Chinchilla’s 20:1 Rule

DeepMind’s Chinchilla research highlighted that models should scale equally in size and data for optimal performance. This finding has significant implications for the development of language models, as it provides a clear guideline for balancing model size and training data.

SmolLM3’s 3,700:1 Ratio

In contrast to Chinchilla’s 20:1 rule, SmolLM3’s 3,700:1 ratio demonstrates the diversity of scaling laws in different models. This ratio highlights the importance of considering the specific requirements of each model when determining the optimal balance between model size and training data.

Balancing Model Size, Training Data, and Compute Budget

The key to achieving optimal performance in language models is balancing model size, training data, and compute budget. By understanding the scaling laws that govern these factors, developers can create more efficient and effective models. This balance is critical, as it directly impacts the performance and cost of the model.

Conclusion

In conclusion, scaling laws play a crucial role in the development of language models. By understanding and applying these laws, developers can create more efficient and effective models that balance model size, training data, and compute budget. As the field of natural language processing continues to evolve, the importance of scaling laws will only continue to grow.

FAQs

What are scaling laws in language models?

Scaling laws refer to the guidelines that help practitioners achieve significant improvements in model efficiency and effectiveness by balancing model size, training data, and compute budget.

Why are scaling laws important?

Scaling laws are important because they provide a clear guideline for balancing model size and training data, leading to better-performing language models and addressing the critical trade-offs between training and inference costs.

How do I apply scaling laws to my language model?

To apply scaling laws to your language model, you should consider the specific requirements of your model and balance its size, training data, and compute budget accordingly. This may involve adjusting the model’s architecture, increasing or decreasing the amount of training data, or optimizing the compute resources used for training.