Artificial General Intelligence: Ensuring a Safe Future

As AI hype permeates the Internet, tech and business leaders are already looking toward the next step. AGI, or artificial general intelligence, refers to a machine with human-like intelligence and capabilities. If today’s AI systems are on a path to AGI, we will need new approaches to ensure such a machine doesn’t work against human interests.

Understanding the Risks of AGI

Unfortunately, we don’t have anything as elegant as Isaac Asimov’s Three Laws of Robotics. Researchers at DeepMind have been working on this problem and have released a new technical paper (PDF) that explains how to develop AGI safely.

It contains a huge amount of detail, clocking in at 108 pages before references. While some in the AI field believe AGI is a pipe dream, the authors of the DeepMind paper project that it could happen by 2030. With that in mind, they aimed to understand the risks of a human-like synthetic intelligence, which they acknowledge could lead to “severe harm.”

All the Ways AGI Could Suck for Humanity

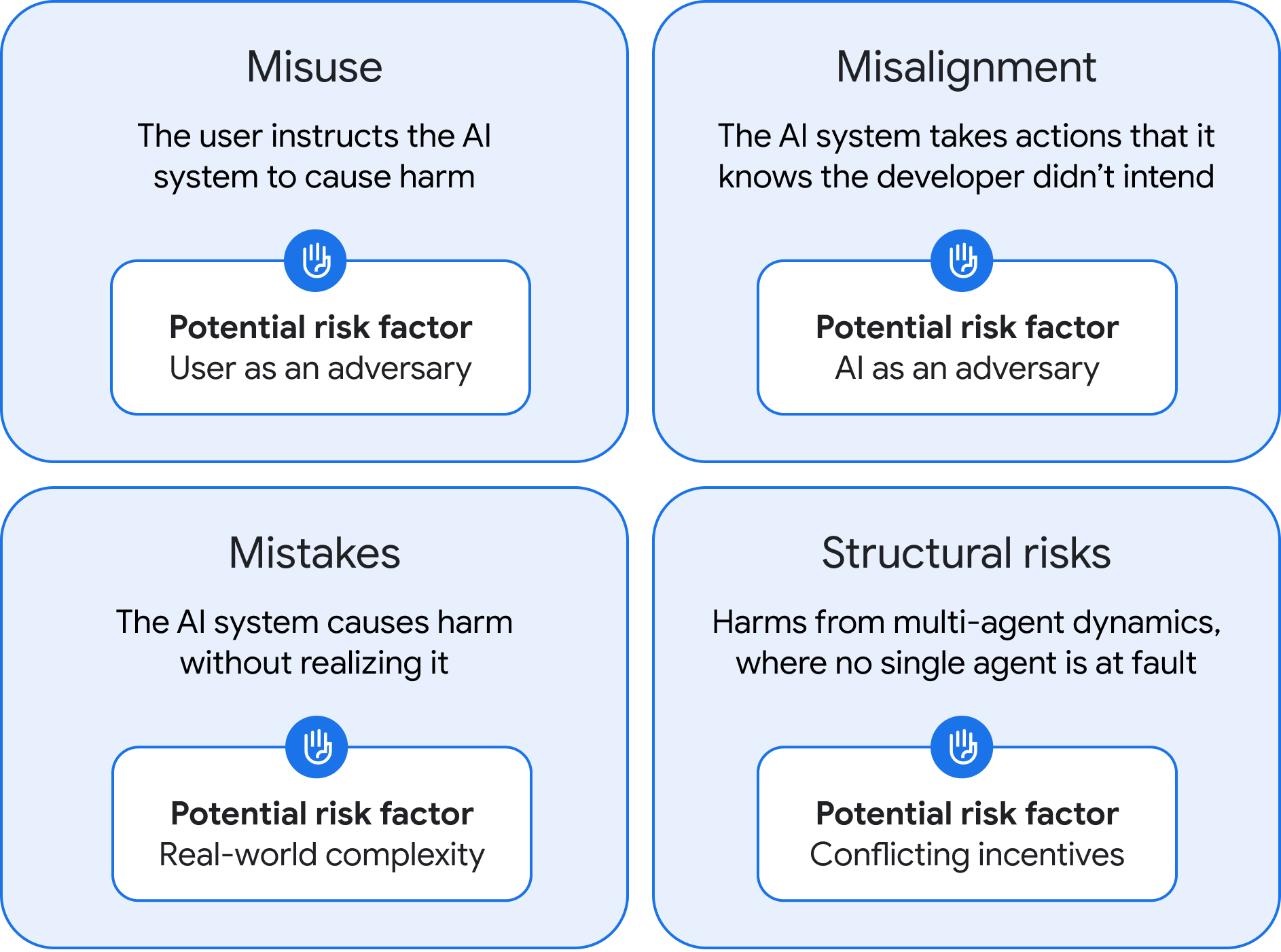

This work has identified four possible types of AGI risk, along with suggestions on how we might ameliorate said risks. The DeepMind team, led by company co-founder Shane Legg, categorized the negative AGI outcomes as misuse, misalignment, mistakes, and structural risks.

The four categories of AGI risk, as determined by DeepMind.

Credit:

Google DeepMind

Misuse of AGI

The first possible issue, misuse, is fundamentally similar to current AI risks. However, because AGI will be more powerful by definition, the damage it could do is much greater. A ne’er-do-well with access to AGI could misuse the system to do harm, for example, by asking the system to identify and exploit zero-day vulnerabilities or create a designer virus that could be used as a bioweapon.

DeepMind says companies developing AGI will have to conduct extensive testing and create robust post-training safety protocols. Essentially, AI guardrails on steroids. They also suggest devising a method to suppress dangerous capabilities entirely, sometimes called “unlearning,” but it’s unclear if this is possible without substantially limiting models.

Conclusion

The development of AGI is a complex and challenging task that requires careful consideration of the potential risks and benefits. While the possibility of creating a machine with human-like intelligence is exciting, it also raises important questions about safety and responsibility. By understanding the potential risks of AGI and taking steps to mitigate them, we can work towards creating a future where AGI is a powerful tool for humanity, rather than a threat.

Frequently Asked Questions

What is AGI?

AGI, or artificial general intelligence, refers to a machine with human-like intelligence and capabilities.

What are the risks of AGI?

The risks of AGI include misuse, misalignment, mistakes, and structural risks, which could lead to severe harm if not properly addressed.

How can we ensure the safe development of AGI?

Companies developing AGI should conduct extensive testing, create robust post-training safety protocols, and consider devising methods to suppress dangerous capabilities entirely.