Try Taking a Picture of Each of North America’s 11,000 Tree Species: An Automated Research Assistant

Try taking a picture of each of North America’s roughly 11,000 tree species, and you’ll have a mere fraction of the millions of photos within nature image datasets. These massive collections of snapshots – ranging from butterflies to humpback whales – are a great research tool for ecologists because they provide evidence of organisms’ unique behaviors, rare conditions, migration patterns, and responses to pollution and other forms of climate change.

Automated Research Assistant: The Future of Nature Research

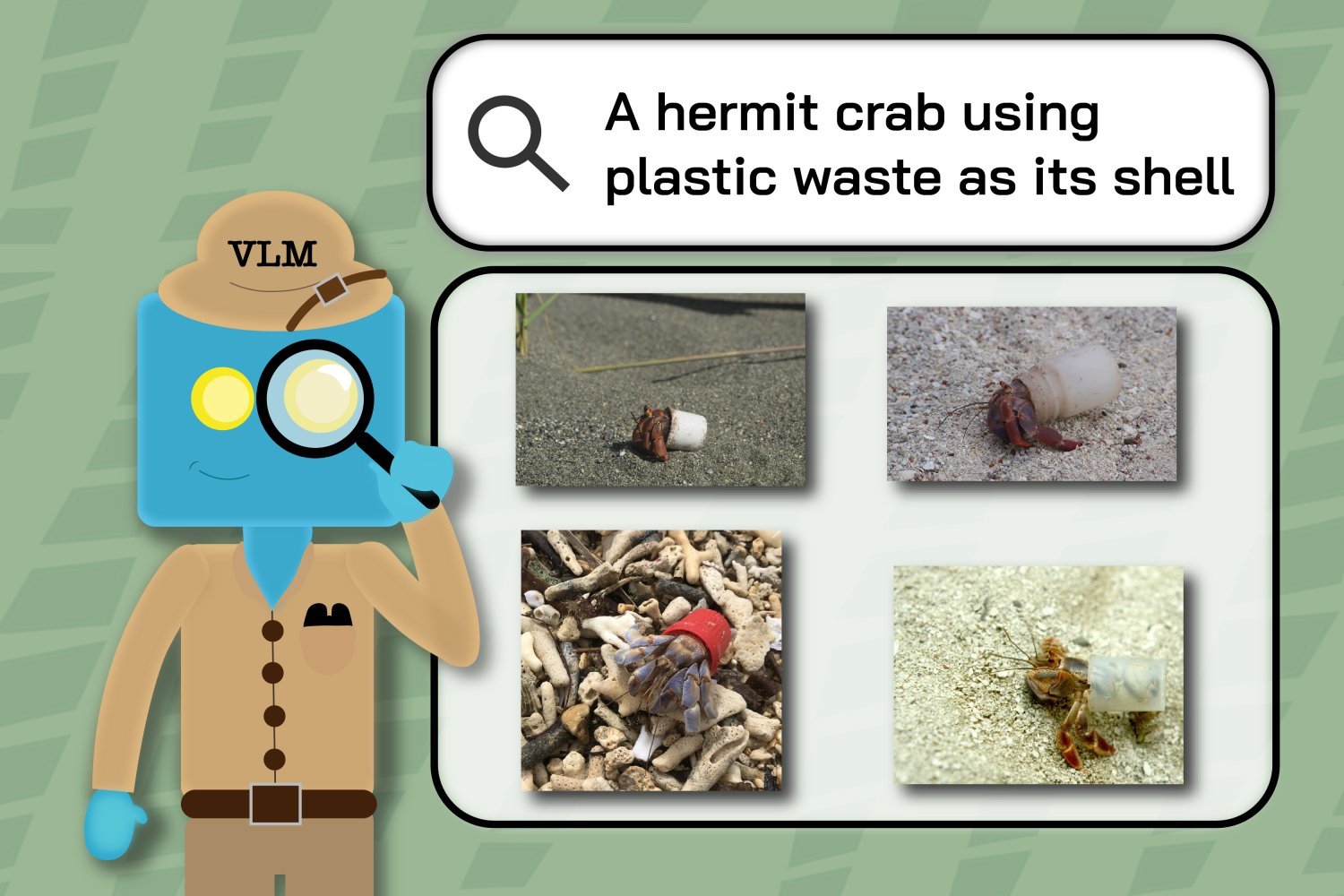

While comprehensive, nature image datasets aren’t yet as useful as they could be. It’s time-consuming to search these databases and retrieve the images most relevant to your hypothesis. You’d be better off with an automated research assistant – or perhaps artificial intelligence systems called multimodal vision language models (VLMs). They’re trained on both text and images, making it easier for them to pinpoint finer details, like the specific trees in the background of a photo.

How Well Can VLMs Assist Nature Researchers with Image Retrieval?

A team from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), University College London, iNaturalist, and elsewhere designed a performance test to find out. Each VLM’s task: locate and reorganize the most relevant results within the team’s "INQUIRE" dataset, composed of 5 million wildlife pictures and 250 search prompts from ecologists and other biodiversity experts.

Looking for that Special Frog

In these evaluations, the researchers found that larger, more advanced VLMs, which are trained on far more data, can sometimes get researchers the results they want to see. The models performed reasonably well on straightforward queries about visual content, like identifying debris on a reef, but struggled significantly with queries requiring expert knowledge, like identifying specific biological conditions or behaviors. For example, VLMs somewhat easily uncovered examples of jellyfish on the beach, but struggled with more technical prompts like "axanthism in a green frog," a condition that limits their ability to make their skin yellow.

A Step Towards Better Research Assistants

The team’s findings indicate that the models need much more domain-specific training data to process difficult queries. MIT PhD student Edward Vendrow, a CSAIL affiliate who co-led work on the dataset in a new paper, believes that by familiarizing with more informative data, the VLMs could one day be great research assistants. "We want to build retrieval systems that find the exact results scientists seek when monitoring biodiversity and analyzing climate change," says Vendrow. "Multimodal models don’t quite understand more complex scientific language yet, but we believe that INQUIRE will be an important benchmark for tracking how they improve in comprehending scientific terminology and ultimately helping researchers automatically find the exact images they need."

Conclusion

The team’s experiments illustrated that larger models tended to be more effective for both simpler and more intricate searches due to their expansive training data. They first used the INQUIRE dataset to test if VLMs could narrow a pool of 5 million images to the top 100 most-relevant results (also known as "ranking"). For straightforward search queries like "a reef with manmade structures and debris," relatively large models like SigLIP found matching images, while smaller-sized CLIP models struggled.

Frequently Asked Questions

Q: How can VLMs assist nature researchers with image retrieval?

A: VLMs can help researchers locate and reorganize relevant results within massive nature image datasets, making it easier to find the exact images they need.

Q: How well can VLMs process difficult queries?

A: VLMs can struggle with queries requiring expert knowledge, like identifying specific biological conditions or behaviors.

Q: What is the future of VLMs in nature research?

A: The team aims to develop a query system to better help scientists and other curious minds find the images they actually want to see, and to improve the re-ranking system by augmenting current models to provide better results.

Inquiring Minds Want to See

The researchers are working with iNaturalist to develop a query system to better help scientists and other curious minds find the images they actually want to see. Their working demo allows users to filter searches by species, enabling quicker discovery of relevant results like, say, the diverse eye colors of cats.