Introduction to Reasoning in LLMs

Our AI team attended COLM 2025 this year. In this piece, François Huppé-Marcoux, one of our AI engineers, shares the “aha” moment that reshaped his view of reasoning in LLMs.

What is Reasoning?

We talk about “reasoning” as if it’s a universal boost: add more steps, get a better answer. But reasoning isn’t one thing. Sometimes it’s the quiet, automatic pattern-matching that lets you spot your friend in a crowd. Sometimes it’s deliberate, step-by-step analysis — checking assumptions, chaining facts, auditing edge cases. Both are reasoning. The mistake is treating only the slow, verbal kind as “real.”

Everyday Life and Reasoning

Everyday life makes the split obvious. You don’t narrate how to catch a tossed set of keys; your body just does it. You do narrate your taxes. In one case, forcing a running commentary would make you worse; in the other, not writing the steps down would be reckless. The skill isn’t “always think more.” It’s knowing when thinking out loud helps and when it injects noise.

Large Language Models and Reasoning

Large language models tempt us to ask for “step-by-step” on everything. That sounds prudent, but it can turn into cargo-cult analysis: extra words standing in for better judgment. If a task is mostly matching or recognition, demanding a verbal chain of thought can distract the system (and us) from the signal we actually need. For multi-constraint problems, skipping structure is how you get confident nonsense.

A Personal Experience

I learned this the hard way and mid-lecture, with a room full of people humming their answers while I was still building a mental proof. I was at Tom Griffiths’ COLM 2025 talk on neuroscience and LLMs. He argued that reasoning is not always beneficial for LLMs. I was surprised by this. It’s generally assumed that reasoning enhances a model’s capabilities by allowing it to generate intermediate thoughts before the actual response.

System 1 and System 2

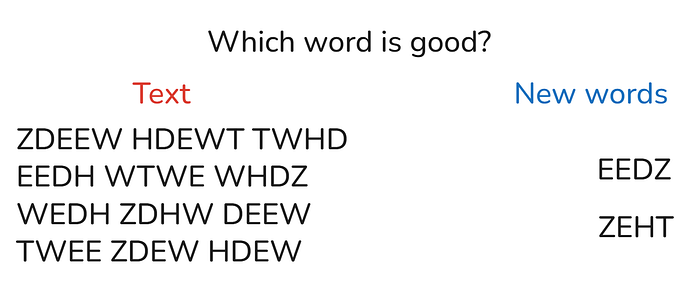

In psychology and neuroscience, there’s the well-known concept of System 1 and System 2 from Thinking, Fast and Slow by Daniel Kahneman. Fast thinking (System 1) is effortless and always active — recognizing a face, answering 2+2=4, or making practiced movements in sports. There’s little conscious deliberation, even though the brain is actively responding. Slow thinking (System 2) is when we need to mentally process information, such as solving “37*13” or my letter-by-letter analysis to find which sequences violated the language.

Disjunctive and Conjunctive Search

He finds that for disjunctive search, VLM accuracy does not degrade as we increase the number of objects, while conjunctive search accuracy degrades significantly with more objects. He writes: “This pattern suggests interference arising from the simultaneous processing of multiple items.”

Practical Applications

So what does this mean for everyday prompting? First, thinking isn’t free. Asking for chain-of-thought (or using a “reasoning mode”) consumes tokens, time, and often the model’s intuitive edge. Second, not all tasks need it. Simple classification, direct retrieval, and pure matching often perform best with minimal scaffolding: “answer in one line,” “choose A or B,” “return the ID,” “pick the closest example.” Save step-by-step for problems that truly have multiple constraints or multi-step dependencies: data transformations, multi-hop reasoning, policy checks, tool choreography, and long instructions with delicate edge cases.

A Practical Rule

A practical rule I’ve adopted since that talk:

- If the task is mostly about recognition, retrieval, or matching, prefer fast mode: concise prompts, no forced explanations, crisp output constraints.

- If the task is to compose, transform, or verify across several conditions, switch on deliberate reasoning, either by asking the model to plan (briefly) before answering or by structuring the workflow into explicit steps.

A Cleaner Mental Checklist

- Is success a pattern match? Use fast mode.

- Are there multiple “ANDs” to satisfy? Use deliberate mode.

- Do I need a rationale for auditability? Ask for a short, structured justification — after the answer, not before.

- Am I adding instructions just to feel safer? Remove them and re-test.

Conclusion

I still like my DFA instincts. They’re great when correctness matters. But that day, the humming crowd had the right intuition: sometimes, the best prompt is the shortest one, and the best “reasoning” is not reasoning at all. Next time you turn on thinking mode in your favorite LLM application and forget it’s still on, remember: the model may perform better in non-thinking mode depending on the task.

FAQs

Q: What is the difference between System 1 and System 2 thinking?

A: System 1 thinking is fast, automatic, and effortless, while System 2 thinking is slow, deliberate, and mentally demanding.

Q: When should I use fast mode in LLMs?

A: Use fast mode for tasks that involve recognition, retrieval, or matching, and prefer concise prompts with no forced explanations.

Q: What is the trade-off between fast and deliberate mode in LLMs?

A: Fast mode is faster but may lack certainty and justification, while deliberate mode provides traceable logic and better reliability but at the cost of latency and sometimes creativity.