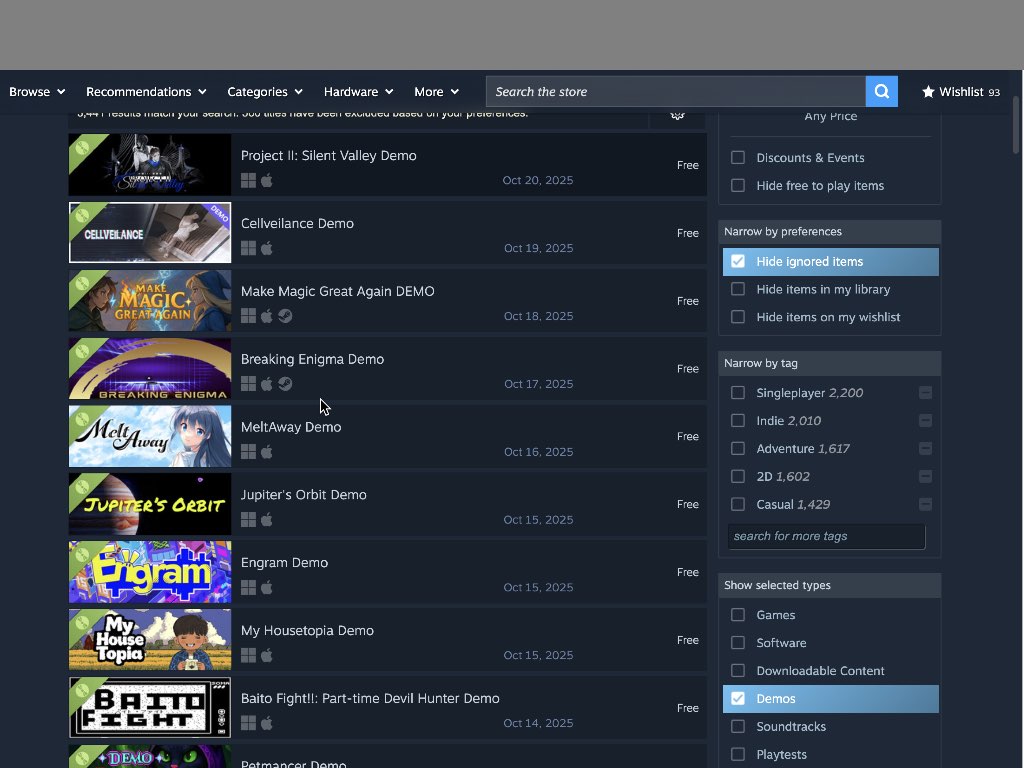

Introduction to MedGemma AI Models

Google has recently released two new AI models, MedGemma 27B Multimodal and MedSigLIP, as part of their growing collection of open-source healthcare AI models. These models are designed to be used by healthcare developers, hospitals, and researchers to improve patient care and medical research. The MedGemma 27B model can read medical text and understand medical images, such as chest X-rays and pathology slides, while the MedSigLIP model is specifically trained to understand medical images.

Google’s AI Meets Real Healthcare

The MedGemma 27B model has been tested on a standard medical knowledge benchmark, MedQA, and scored 87.7%, which is impressive considering its smaller size compared to other models. The model can process medical text and images together, similar to how a doctor would. The smaller sibling, MedGemma 4B, also performed well, scoring 64.4% on the same test. When US board-certified radiologists reviewed chest X-ray reports written by the model, they deemed 81% accurate enough to guide actual patient care.

MedSigLIP: A Featherweight Powerhouse

MedSigLIP is a smaller model, with only 400 million parameters, but it has been specifically trained to understand medical images. It can spot patterns and features that matter in medical contexts and can handle everyday images as well. The model creates a bridge between images and text, allowing it to find similar cases in a database based on visual and medical significance.

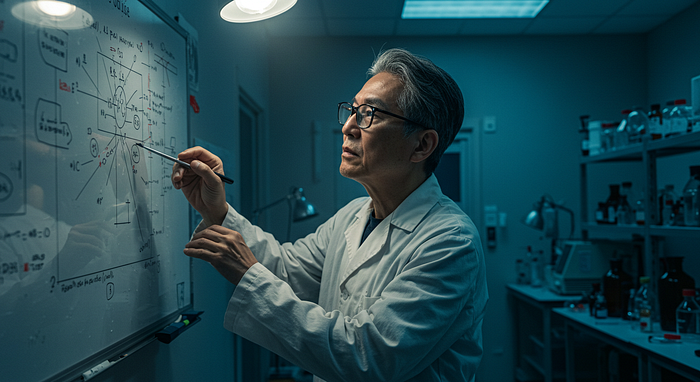

Healthcare Professionals Are Putting Google’s AI Models to Work

The proof of any AI tool lies in whether real professionals actually want to use it. Early reports suggest that doctors and healthcare companies are excited about what these models can do. DeepHealth in Massachusetts has been testing MedSigLIP for chest X-ray analysis and has found it helps spot potential problems that might otherwise be missed. Meanwhile, at Chang Gung Memorial Hospital in Taiwan, researchers have discovered that MedGemma works with traditional Chinese medical texts and answers staff questions with high accuracy.

Why Open-Sourcing the AI Models Is Critical in Healthcare

Google’s decision to make these models open-source is strategic. Healthcare has unique requirements that standard AI services can’t always meet. Hospitals need to know their patient data isn’t leaving their premises, and research institutions need models that won’t suddenly change behavior without warning. By open-sourcing the AI models, Google has addressed these concerns with healthcare deployments. A hospital can run MedGemma on their own servers, modify it for their specific needs, and trust that it’ll behave consistently over time.

Accessibility and Potential Applications

The models are designed to run on single graphics cards, with the smaller versions even adaptable for mobile devices. This accessibility opens doors for point-of-care AI applications in places where high-end computing infrastructure simply doesn’t exist. Smaller hospitals that couldn’t afford expensive AI services can now access cutting-edge technology. Researchers in developing countries can build specialized tools for local health challenges. Medical schools can teach students using AI that actually understands medicine.

Conclusion

Google’s release of MedGemma AI models has the potential to revolutionize healthcare. The models’ ability to understand medical text and images, combined with their open-source nature, makes them a powerful tool for healthcare professionals. While the models are not ready to replace doctors, they can amplify human expertise and make it more accessible where it’s needed most. As healthcare continues to grapple with staff shortages, increasing patient loads, and the need for more efficient workflows, AI tools like Google’s MedGemma could provide some much-needed relief.

FAQs

Q: What are the MedGemma AI models?

A: The MedGemma AI models are open-source healthcare AI models developed by Google, designed to be used by healthcare developers, hospitals, and researchers to improve patient care and medical research.

Q: What can the MedGemma 27B model do?

A: The MedGemma 27B model can read medical text and understand medical images, such as chest X-rays and pathology slides.

Q: What is MedSigLIP?

A: MedSigLIP is a smaller model, specifically trained to understand medical images, and creates a bridge between images and text.

Q: Why did Google make the AI models open-source?

A: Google made the AI models open-source to address the unique requirements of healthcare, such as data privacy and consistency, and to allow hospitals and research institutions to modify and use the models as needed.

Q: What are the potential applications of the MedGemma AI models?

A: The potential applications of the MedGemma AI models include point-of-care AI applications, medical research, and education, and can be used by smaller hospitals, researchers in developing countries, and medical schools.