Introduction to AI Vulnerabilities

Imagine uploading a cute cat photo to Google’s Gemini AI for a quick analysis, only to have it secretly whisper instructions to steal your Google data. Sounds like a plot from a sci-fi thriller, right? Well, it’s not fiction — it’s a real vulnerability in Gemini’s image processing. In this post, we’ll dive into how hackers are embedding invisible commands in images, turning your helpful AI assistant into an unwitting accomplice.

How This Happens: The Sneaky Mechanics of Image-Based Prompt Injection

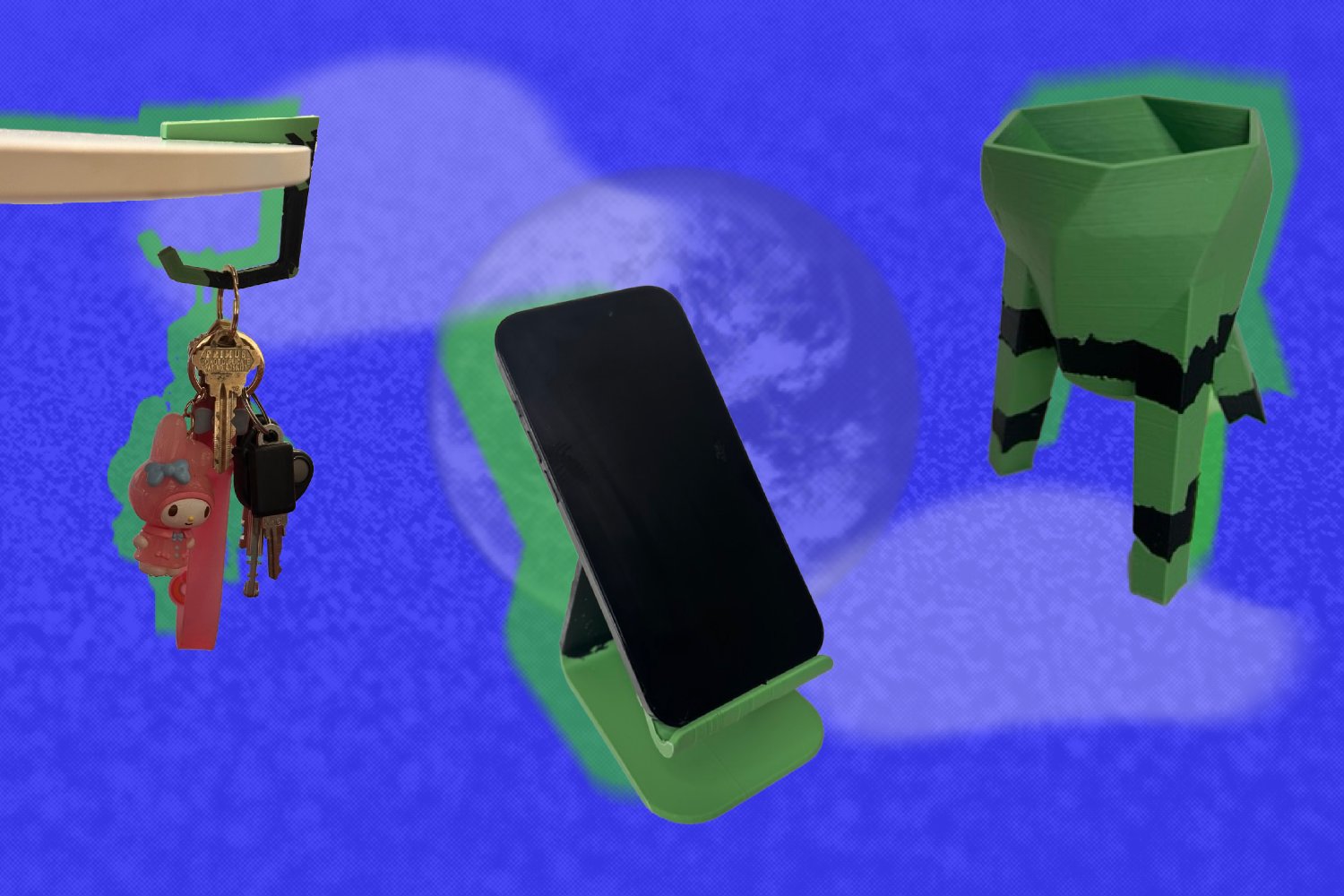

Picture this: You’re chatting with Gemini, Google’s multimodal AI that can handle text, images, and more, and you toss in an image for it to ponder. But behind the scenes, something sinister could be at play. The key here is prompt injection, which is basically when bad actors slip malicious instructions into an AI’s input to hijack its behavior. In Gemini’s case, this gets visual — hackers embed hidden messages right into image files.

It all starts with how Gemini processes large images. To save on computing power, the system automatically downscales them — shrinking the resolution using algorithms like bicubic interpolation (that’s a fancy way of saying it smooths out pixels by averaging neighbors). Hackers exploit this by crafting high-res images that look totally normal to you. They tweak pixels in subtle spots, like dark corners, to encode text or commands. At full size, it’s invisible, blending into the background like a chameleon. But when Gemini scales it down? Boom — the hidden stuff emerges clear as day, thanks to aliasing effects (think of it as digital distortion, like those optical illusions where patterns shift when you squint).

Why This Happens: Root Causes in AI Design and the Quest for Efficiency

Now that we’ve seen the “how,” let’s unpack the “why” — because understanding the roots helps us spot the cracks. At its core, Gemini is built as a multimodal powerhouse, meaning it embeds and processes info from images, text, and videos into vectors. Models like multimodalembedding@001 do this brilliantly, but they prioritize speed and usability over ironclad security.

The big culprit? Efficiency trade-offs. Downscaling images isn’t just a quirk — it’s essential for handling massive files without crashing servers or draining batteries. But this creates a mismatch: you see the full-res image, while Gemini works on the shrunken version, where hidden nasties pop out. It’s like editing a movie scene that’s only visible in the director’s cut — except here, the director is a hacker.

Implications and How Hackers Exploit It

The implications? Oh boy! At stake is everything from personal privacy to enterprise security. A successful attack could lead to data exfiltration (fancy term for stealing info), like siphoning your Google Calendar events to a hacker’s inbox without a trace. In agentic systems — where Gemini integrates with tools like Zapier — it might even trigger real actions, such as sending emails or controlling smart devices. Imagine your AI “helping” by opening your smart locks because a hidden prompt said so. Broader risks include phishing waves in workspaces, where one tainted image in a shared doc spreads chaos, or even supply-chain attacks hitting millions via apps like Gmail.

How to Be Safe from These Attacks

Don’t panic — while these attacks sound like something out of Black Mirror, staying safe is doable with some smart habits. First off, treat untrusted images like suspicious candy from strangers: don’t upload them to Gemini without a once-over. Use free tools like pixel analyzers or steganography detectors (apps that sniff out hidden data in files) to scan for anomalies before hitting “submit.”

Next Steps by Gemini: Google’s Game Plan for a Safer Future

Speaking of fixes, what’s next for Gemini? Google isn’t sitting idle — they’re already layering defenses, like training models on adversarial data to spot injections (think of it as AI boot camp against bad prompts). Their blogs highlight “layered security,” from better prompt parsing to ML detectors that flag hidden commands before they activate.

Conclusion

In conclusion, the vulnerability in Gemini’s image processing is a real concern, but by understanding how it happens and taking steps to protect ourselves, we can stay safe. Google is working to fix the issue, and by being vigilant and taking precautions, we can prevent these types of attacks.

FAQs

- What is prompt injection?

Prompt injection is when bad actors slip malicious instructions into an AI’s input to hijack its behavior. - How do hackers embed hidden messages in images?

Hackers tweak pixels in subtle spots, like dark corners, to encode text or commands, which emerge when the image is downscaled. - What are the implications of a successful attack?

A successful attack could lead to data exfiltration, triggering real actions, and broader risks like phishing waves and supply-chain attacks. - How can I stay safe from these attacks?

Treat untrusted images with caution, use free tools to scan for anomalies, and keep your software up to date. - What is Google doing to fix the issue?

Google is layering defenses, training models on adversarial data, and highlighting “layered security” to prevent these types of attacks.