Introduction to AI Optimization

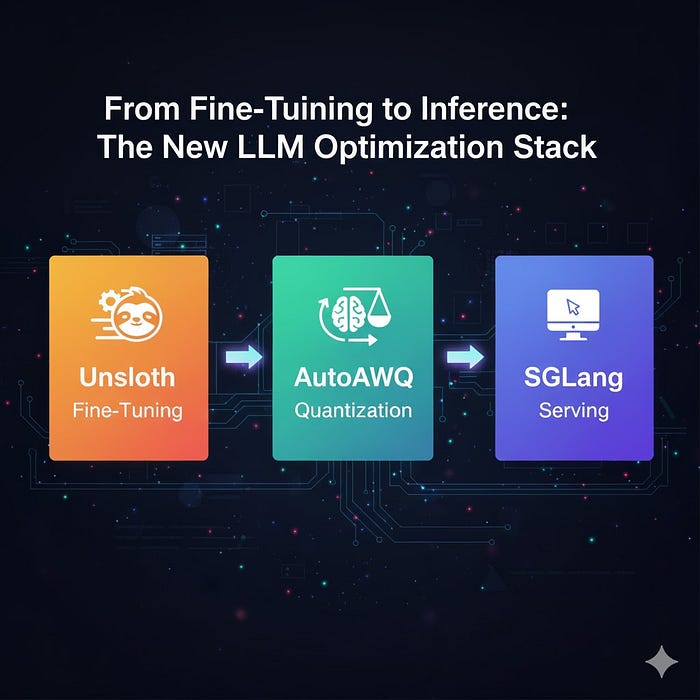

Training and deploying Large Language Models (LLMs) has remained expensive and resource-intensive as LLMs become more powerful. In recent times, a new generation of lightweight AI optimization frameworks has emerged, which enables developers to train, compress, and serve models more efficiently. This new stack is built around three core frameworks: Unsloth, AutoAWQ, and SGLang.

What are the Three Core Frameworks?

These frameworks create a seamless, end-to-end workflow that reduces compute costs, accelerates experimentation, and scales better than traditional stacks.

- Unsloth: accelerates fine-tuning with memory-efficient kernels

- AutoAWQ: automates quantization to shrink models for cheaper inference

- SGLang: provides high-throughput and structured inference for production

Unsloth — Fast & Efficient Fine-Tuning

Fine-tuning has traditionally been one of the biggest bottlenecks in working with LLMs. Even for mid-sized models with ~7B parameters, running full fine-tuning or LoRA requires massive GPU memory and long training cycles. Unsloth solves this with kernel-level optimizations and efficient LoRA/QLoRA implementations. Also, it supports popular models such as LLaMA, Mistral, Phi, and Gemma.

Key Benefits of Unsloth

- 2–3x faster training compared to standard Hugging Face + PEFT setups

- Memory-efficient LoRA/QLoRA implementations — train 7B-13B models on consumer GPUs

- Optimized CUDA kernels for transformer layers to reduce training overhead

Example — How to fine-tune a Llama 3 model

# Install Unsloth

pip install unsloth

# Start fine-tuning

unsloth finetune

--model llama-3-8b

--dataset ./data/instructions.json

--output ./finetuned-llama

--lora-r 8 --lora-alpha 16 --bits 4Unsloth allows developers and startups to train models at a fraction of the usual cost, without needing large GPU clusters.

AutoAWQ — Smarter Quantization, Smaller Models

After fine-tuning, LLM models are still typically too large for cost-effective inference. That’s where AutoAWQ comes in. AutoAWQ automates the quantization process for popular LLM architectures, building on top of the AWQ (Activation-Aware Weight Quantization) method. It applies AWQ automatically, reducing precision while maintaining accuracy.

Key Benefits of AutoAWQ

- Reduce model size by 50–75% with INT4 quantization

- Compatible with Unsloth fine-tuned models and SGLang inference

- Enables running large models on consumer or edge hardware

- Cuts inference costs drastically

Quantization Example

# Install AutoAWQ

pip install autoawq

# Quantize your model

autoawq quantize

--model ./finetuned-llama

--output ./llama-awq

--wbits 4By using AutoAWQ after fine-tuning, before deployment, you can shrink your models and reduce inference costs at scale.

SGLang — High-Performance Structured Inference

After training the model, the next challenge is serving it efficiently. SGLang is a next-generation inference engine built for structured generation and high throughput. It can act as a drop-in replacement for inference frameworks like vLLM while offering more control over the structure of generated outputs which is ideal for applications like function calling, JSON generation, or agent frameworks.

Key Benefits of SGLang

- Faster inference through optimized KV cache handling and token streaming

- Structured output support — ensures models produce parseable, predictable formats (no regex hacks)

- High throughput for multi-user environments

- Lightweight and production-ready without custom hacks

Serving Example

# Install SGLang

pip install sglang

# Serve your Model

sglang serve --model ./llama-awq --port 8080Then, you can send structured queries:

from sglang.client import Client

client = Client("http://localhost:8080")

response = client.generate(

prompt="Return a JSON object with two fields: framework and benefit",

format="json"

)

print(response.text)With SGLang, developers can scale inference to thousands of concurrent users while keeping responses well-structured for downstream applications.

How do these frameworks fit together?

By combining Unsloth, AutoAWQ, and SGLang, developers can build an end-to-end pipeline:

- Fine-tune with Unsloth — Fast, efficient training even on single GPUs

- Quantize with AutoAWQ — Shrink models for cheaper, faster inference

- Serve with SGLang — Deploy structured, high-throughput inference at scale

Together, they form a modern, modular optimization workflow that saves money, accelerates development, and scales production.

Conclusion

If you’re an AI developer, now is the time to experiment with this modular stack. These frameworks reflect a broader shift in the AI ecosystem:

- Instead of one-size-fits-all tools, developers are assembling tailored stacks

- GPU time = money — optimization directly impacts viability

- These tools let small teams do what they used to require large research labs

- Fine-tuning, quantization, and serving are becoming plug-and-play

While Unsloth, AutoAWQ, and SGLang cover the core stages, the ecosystem is evolving rapidly.

FAQs

Q: What is the purpose of Unsloth?

A: Unsloth accelerates fine-tuning with memory-efficient kernels, allowing for faster and more efficient training of LLMs.

Q: How does AutoAWQ work?

A: AutoAWQ automates the quantization process for popular LLM architectures, reducing precision while maintaining accuracy and enabling cheaper inference.

Q: What is SGLang used for?

A: SGLang is a next-generation inference engine built for structured generation and high throughput, ideal for applications like function calling, JSON generation, or agent frameworks.