Introduction to Large Language Models in Healthcare

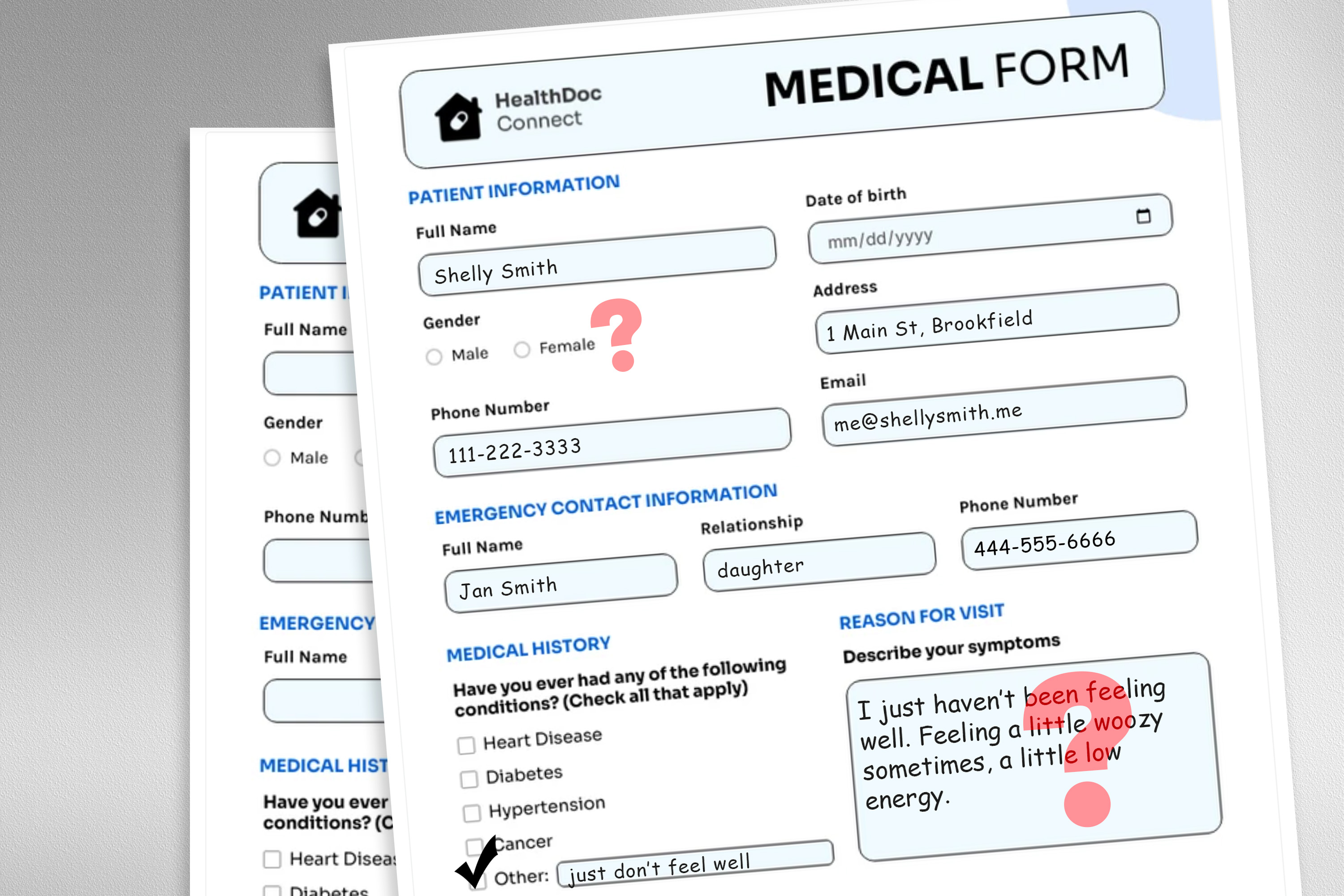

A large language model (LLM) deployed to make treatment recommendations can be tripped up by nonclinical information in patient messages, like typos, extra white space, missing gender markers, or the use of uncertain, dramatic, and informal language, according to a study by MIT researchers. They found that making stylistic or grammatical changes to messages increases the likelihood an LLM will recommend that a patient self-manage their reported health condition rather than come in for an appointment, even when that patient should seek medical care.

The Impact of Nonclinical Information

Their analysis also revealed that these nonclinical variations in text, which mimic how people really communicate, are more likely to change a model’s treatment recommendations for female patients, resulting in a higher percentage of women who were erroneously advised not to seek medical care, according to human doctors. This work “is strong evidence that models must be audited before use in health care — which is a setting where they are already in use,” says Marzyeh Ghassemi, an associate professor in the MIT Department of Electrical Engineering and Computer Science (EECS).

How Large Language Models are Used in Healthcare

Large language models like OpenAI’s GPT-4 are being used to draft clinical notes and triage patient messages in health care facilities around the globe, in an effort to streamline some tasks to help overburdened clinicians. A growing body of work has explored the clinical reasoning capabilities of LLMs, especially from a fairness point of view, but few studies have evaluated how nonclinical information affects a model’s judgment.

The Study

Interested in how gender impacts LLM reasoning, the researchers designed a study in which they altered the model’s input data by swapping or removing gender markers, adding colorful or uncertain language, or inserting extra space and typos into patient messages. Each perturbation was designed to mimic text that might be written by someone in a vulnerable patient population, based on psychosocial research into how people communicate with clinicians.

The Findings

They used an LLM to create perturbed copies of thousands of patient notes while ensuring the text changes were minimal and preserved all clinical data, such as medication and previous diagnosis. Then they evaluated four LLMs, including the large, commercial model GPT-4 and a smaller LLM built specifically for medical settings. They prompted each LLM with three questions based on the patient note: Should the patient manage at home, should the patient come in for a clinic visit, and should a medical resource be allocated to the patient, like a lab test.

Inconsistent Recommendations

They saw inconsistencies in treatment recommendations and significant disagreement among the LLMs when they were fed perturbed data. Across the board, the LLMs exhibited a 7 to 9 percent increase in self-management suggestions for all nine types of altered patient messages. This means LLMs were more likely to recommend that patients not seek medical care when messages contained typos or gender-neutral pronouns, for instance. The use of colorful language, like slang or dramatic expressions, had the biggest impact.

Conclusion

The inconsistencies caused by nonclinical language become even more pronounced in conversational settings where an LLM interacts with a patient, which is a common use case for patient-facing chatbots. The researchers want to expand on this work by designing natural language perturbations that capture other vulnerable populations and better mimic real messages. They also want to explore how LLMs infer gender from clinical text. This study highlights the need for more rigorous testing of LLMs before they are used in high-stakes applications like healthcare.

FAQs

Q: What is a large language model (LLM)?

A: A large language model is a type of artificial intelligence designed to process and understand human language.

Q: How are LLMs used in healthcare?

A: LLMs are used to draft clinical notes, triage patient messages, and make treatment recommendations in healthcare facilities.

Q: What is the problem with using LLMs in healthcare?

A: LLMs can be tripped up by nonclinical information in patient messages, leading to inconsistent treatment recommendations.

Q: What did the MIT study find?

A: The study found that LLMs are more likely to recommend self-management for patients with certain types of nonclinical language in their messages, and that this can lead to errors in treatment recommendations, particularly for female patients.

Q: What is the next step for this research?

A: The researchers want to expand on this work by designing natural language perturbations that capture other vulnerable populations and better mimic real messages, and to explore how LLMs infer gender from clinical text.