Introduction to Explainable AI

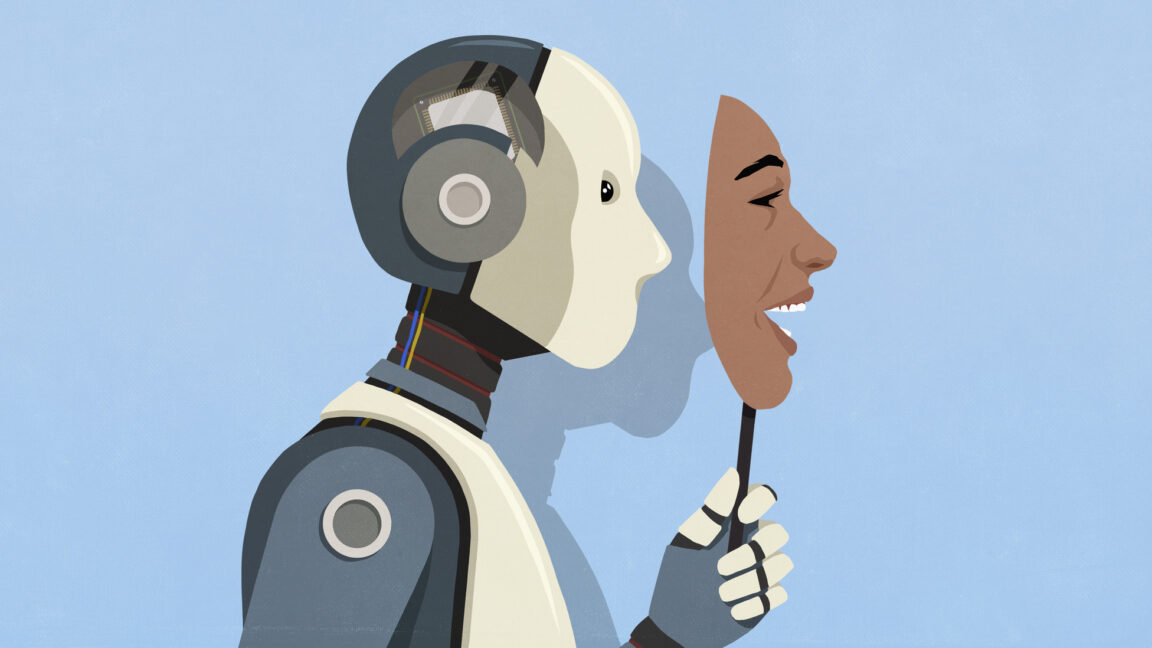

Imagine using an AI system to decide who gets a loan — but no one can explain why it approved one person and rejected another. That’s the challenge many modern AI systems face. As machine learning models grow more powerful, they often become less transparent. These “black box” models make decisions that can impact lives, yet their inner workings are hidden from users.

What is Explainable AI?

Explainable AI (XAI) is a solution to this problem. It focuses on creating tools and techniques that make AI decisions understandable to humans. Instead of just giving an output, explainable models can show why a certain prediction was made, what factors influenced it, and how reliable the decision is.

Why is Explainability Important?

Here are some important reasons why explainability is essential:

- Accountability: If something goes wrong, we need to know what happened and why. Explainability supports legal and ethical responsibility.

- Trust: When users understand a model’s decision-making, they are more likely to trust and use it. Clear explanations help reduce fear around AI.

- Debugging: Developers use explanations to find bugs, biases, or weak points in a model. This helps improve the model and make it more accurate.

Implementing Explainable AI in Python

In this article, we explore several methods of Explainable AI (XAI) and demonstrate how to implement them using Python. By using these methods, developers can create more transparent and trustworthy AI models.

Conclusion

Explainable AI is a crucial aspect of modern AI development. By making AI decisions more transparent and understandable, we can build more trustworthy and reliable models. As AI continues to grow and impact our lives, explainability will become increasingly important.

Frequently Asked Questions (FAQs)

Q: What is the main goal of Explainable AI?

A: The main goal of Explainable AI is to make AI decisions more transparent and understandable to humans.

Q: Why is explainability important in AI development?

A: Explainability is important in AI development because it supports accountability, trust, and debugging. It helps to identify biases, errors, and weaknesses in AI models, making them more reliable and trustworthy.

Q: Can Explainable AI be implemented in Python?

A: Yes, Explainable AI can be implemented in Python using various libraries and techniques. This article explores some of the methods and demonstrates how to implement them using Python.