Introduction to Vision-Language Models

Vision-language models (VLMs) are artificial intelligence systems that combine visual and language components to understand and describe images. These models have been increasingly used in various applications, including image recognition, object detection, and image captioning. However, despite their capabilities, VLMs have been found to struggle with recognizing personalized objects, such as a specific pet or a child’s backpack.

The Problem with Vision-Language Models

The problem with VLMs is that they excel at recognizing general objects, but they perform poorly at locating personalized objects. This is because VLMs are typically trained on large datasets of images that are randomly collected from various sources. These datasets do not provide the model with the contextual clues it needs to recognize personalized objects. As a result, VLMs often rely on their pre-trained knowledge to identify objects, rather than learning from context.

A New Approach to Training Vision-Language Models

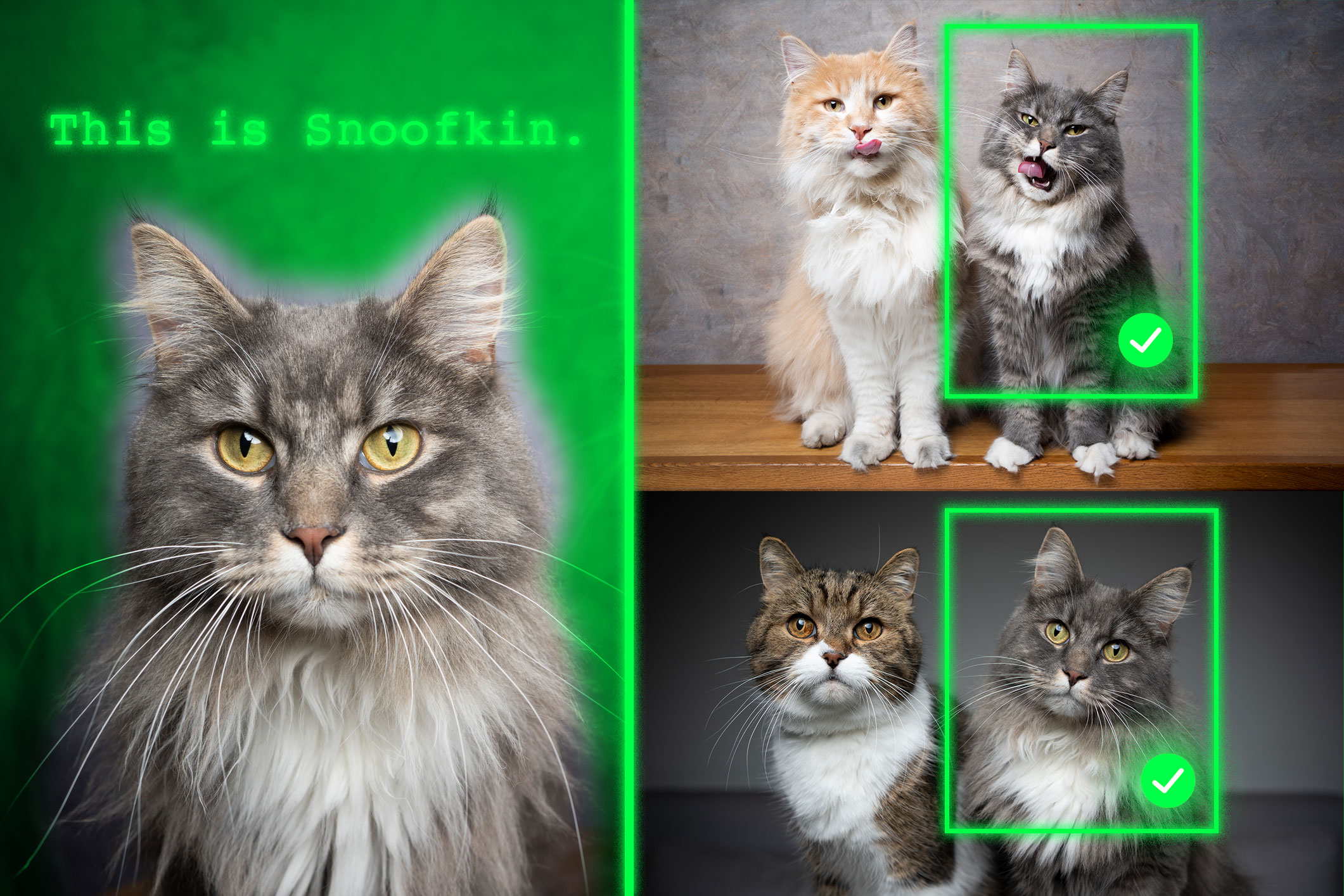

To address this shortcoming, researchers from MIT and the MIT-IBM Watson AI Lab have introduced a new training method that teaches VLMs to localize personalized objects in a scene. The method uses carefully prepared video-tracking data, where the same object is tracked across multiple frames. This dataset is designed to encourage the model to focus on contextual clues to identify the personalized object, rather than relying on pre-trained knowledge.

How the New Approach Works

The new approach works by providing the model with a few example images showing a personalized object, such as a pet. The model is then asked to identify the location of that same object in a new image. By using multiple images of the same object in different contexts, the model is encouraged to consistently localize that object of interest by focusing on the context. To prevent the model from cheating, the researchers use pseudo-names rather than actual object category names in the dataset.

Results and Implications

The results of the new approach have been promising, with models retrained using this method outperforming state-of-the-art systems at the task of personalized object localization. The technique has improved accuracy by about 12 percent on average, and up to 21 percent when using pseudo-names. As model size increases, the technique leads to greater performance gains. This new approach could help future AI systems track specific objects across time, such as a child’s backpack, or localize objects of interest, such as a species of animal in ecological monitoring.

Future Directions

The researchers plan to study possible reasons why VLMs don’t inherit in-context learning capabilities from their base LLMs. They also plan to explore additional mechanisms to improve the performance of a VLM without the need to retrain it with new data. The ultimate goal is to enable VLMs to learn from context, just like humans do, and to perform tasks without requiring extensive retraining.

Conclusion

The new approach to training VLMs has shown promising results in improving the ability of these models to recognize personalized objects. By using video-tracking data and pseudo-names, the model is encouraged to focus on contextual clues to identify the object of interest. This technique has the potential to improve the performance of VLMs in various applications, including image recognition, object detection, and image captioning. As the field of AI continues to evolve, it is likely that we will see further advancements in the development of VLMs and their ability to learn from context.

FAQs

- What are vision-language models (VLMs)?

VLMs are artificial intelligence systems that combine visual and language components to understand and describe images. - What is the problem with VLMs?

VLMs excel at recognizing general objects, but they perform poorly at locating personalized objects. - How does the new approach to training VLMs work?

The new approach uses carefully prepared video-tracking data, where the same object is tracked across multiple frames, to encourage the model to focus on contextual clues to identify the personalized object. - What are the results of the new approach?

The new approach has improved accuracy by about 12 percent on average, and up to 21 percent when using pseudo-names. - What are the potential applications of the new approach?

The new approach could help future AI systems track specific objects across time, such as a child’s backpack, or localize objects of interest, such as a species of animal in ecological monitoring.