Introduction to AI Security Risks

The integration of AI into various products, including those from Microsoft, Apple, Google, and Meta, has raised concerns about security risks. While these companies aim to provide users with innovative features, the protection mechanisms they implement may not be effective in preventing potential threats.

The Problem with User Warnings

The goals of these protection mechanisms are sound, but they rely on users reading and understanding dialog windows that warn of risks and require careful approval before proceeding. However, this approach has its limitations. As Earlence Fernandes, a University of California, San Diego professor specializing in AI security, notes, "Sometimes those users don’t fully understand what is going on, or they might just get habituated and click ‘yes’ all the time. At which point, the security boundary is not really a boundary."

The Risk of ClickFix Attacks

Many users can be tricked into following extremely dangerous instructions, as demonstrated by the rash of "ClickFix" attacks. These incidents are inevitable due to various reasons, including user fatigue, emotional distress, or lack of knowledge. Even careful users can slip up, and those who are not tech-savvy may not have the necessary understanding to make informed decisions.

Criticisms of Microsoft’s Approach

Microsoft’s warning has been criticized as a mere "cover your ass" (CYA) maneuver, aiming to shield the company from liability rather than providing effective protection. Critic Reed Mideke argues that Microsoft, like the rest of the industry, has no idea how to stop prompt injection or hallucinations, making AI fundamentally unfit for serious applications. Mideke believes that the solution is to shift liability to the user, which is not a viable approach.

Broader Implications for AI Integrations

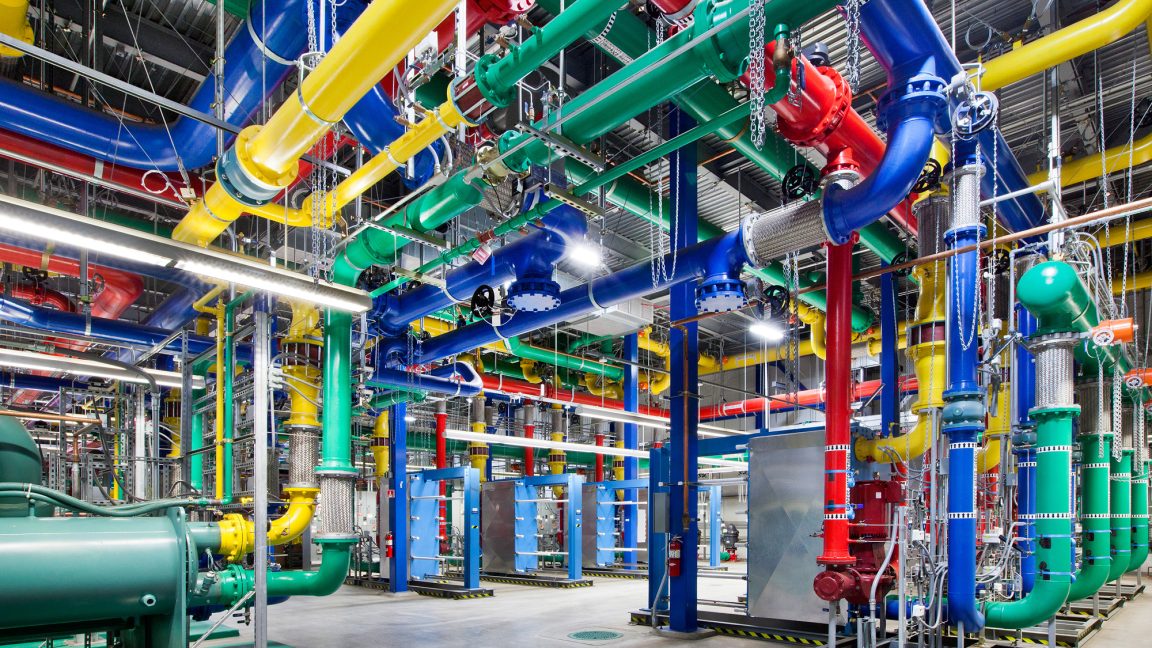

The criticisms of Microsoft’s approach extend to AI offerings from other companies, including Apple, Google, and Meta. These integrations often start as optional features but eventually become default capabilities, whether users want them or not. This raises concerns about the potential risks and consequences of relying on AI for critical tasks.

Conclusion

In conclusion, while AI integrations offer innovative features, they also pose significant security risks. The protection mechanisms implemented by companies like Microsoft rely on user warnings, which may not be effective in preventing threats. It is essential to address these concerns and develop more robust security solutions to ensure the safe use of AI in various applications.

FAQs

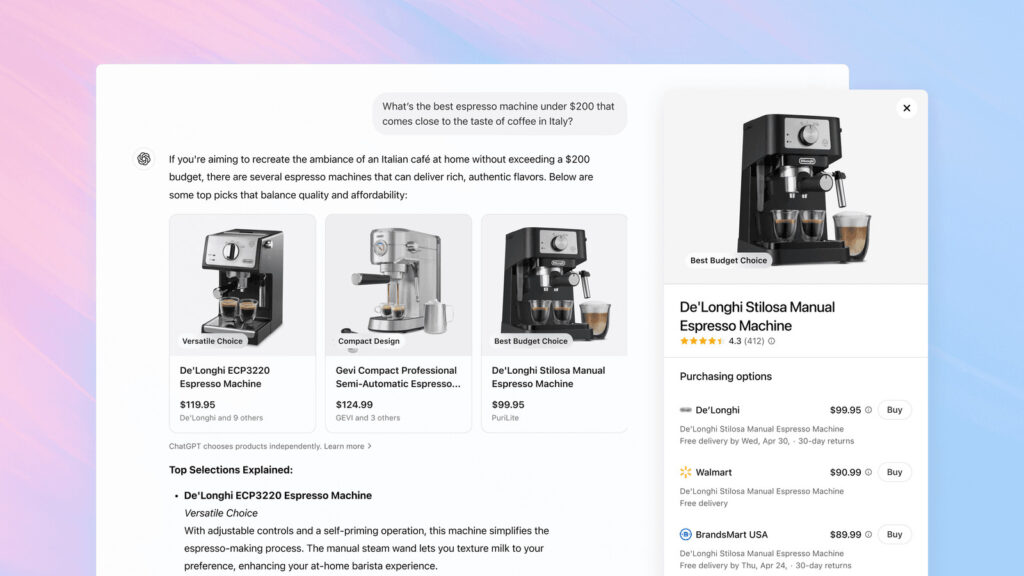

Q: What is the main concern with AI integrations in products?

A: The main concern is the potential security risks associated with AI, including prompt injection and hallucinations, which can lead to serious consequences.

Q: Why are user warnings not effective in preventing threats?

A: User warnings may not be effective because users may not fully understand the risks, or they may become habituated to clicking "yes" without carefully considering the implications.

Q: What is the criticism of Microsoft’s approach to AI security?

A: The criticism is that Microsoft’s warning is a mere "cover your ass" (CYA) maneuver, aiming to shield the company from liability rather than providing effective protection.

Q: How do AI integrations from other companies compare to Microsoft’s approach?

A: The criticisms of Microsoft’s approach extend to AI offerings from other companies, including Apple, Google, and Meta, which also raise concerns about potential risks and consequences.