Introduction to Deep Learning

Deep learning is a subset of machine learning that involves the use of neural networks to analyze and interpret data. These neural networks are designed to mimic the human brain, with layers of interconnected nodes (neurons) that process and transmit information.

What is a Perceptron?

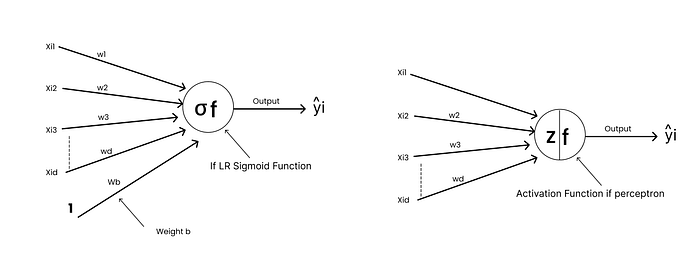

A Perceptron is a simplified model of a single neuron. It’s a basic building block of neural networks, and it’s used to make predictions based on input data. The Perceptron takes in a set of inputs, performs a computation on those inputs, and then produces an output.

Logistic Regression and Perceptrons

Logistic Regression is a type of regression analysis used for predicting the outcome of a categorical dependent variable. It’s conceptually similar to a Perceptron, except for the activation function. The activation function is a mathematical function that’s applied to the output of a node to introduce non-linearity.

Multi-Layered Perceptrons (MLPs)

A Multi-Layered Perceptron (MLP) is an evolution of single neuron models (Perceptrons). It’s a type of neural network that consists of multiple layers of interconnected nodes (neurons). Each layer processes and transmits information to the next layer, allowing the network to learn complex, non-linear patterns in data.

How MLPs Work

MLPs work by performing a series of mathematical operations on the input data. Each node in the network receives a set of inputs, performs a weighted sum of those inputs, and then applies an activation function to the result. The output of each node is then transmitted to the next layer, where the process is repeated.

Importance of Activation Functions

Activation functions are a crucial component of MLPs. They introduce non-linearity into the network, allowing it to learn complex patterns in data. Without activation functions, the network would only be able to learn linear relationships.

Applications of MLPs

MLPs have a wide range of applications in machine learning and data science. They can be used for classification, regression, and feature learning tasks. They’re particularly useful for solving intricate problems that involve complex, non-linear relationships.

Conclusion

In conclusion, Multi-Layered Perceptrons (MLPs) are a powerful tool for deep learning. They consist of multiple layers of interconnected nodes (neurons) that process and transmit information, allowing the network to learn complex, non-linear patterns in data. MLPs have a wide range of applications in machine learning and data science, and they’re particularly useful for solving intricate problems.

FAQs

What is the difference between a Perceptron and an MLP?

A Perceptron is a simplified model of a single neuron, while an MLP is a type of neural network that consists of multiple layers of interconnected nodes (neurons).

What is the role of activation functions in MLPs?

Activation functions introduce non-linearity into the network, allowing it to learn complex patterns in data.

What are some applications of MLPs?

MLPs have a wide range of applications in machine learning and data science, including classification, regression, and feature learning tasks.