Introduction to Nvidia’s New AI-Accelerating GPUs

Nvidia’s CEO Jensen Huang revealed several new AI-accelerating GPUs at the company’s GTC 2025 conference in San Jose, California. These new GPUs are set to be released over the coming months and years, and they promise to deliver significant performance improvements for AI training and inference.

What is Vera Rubin?

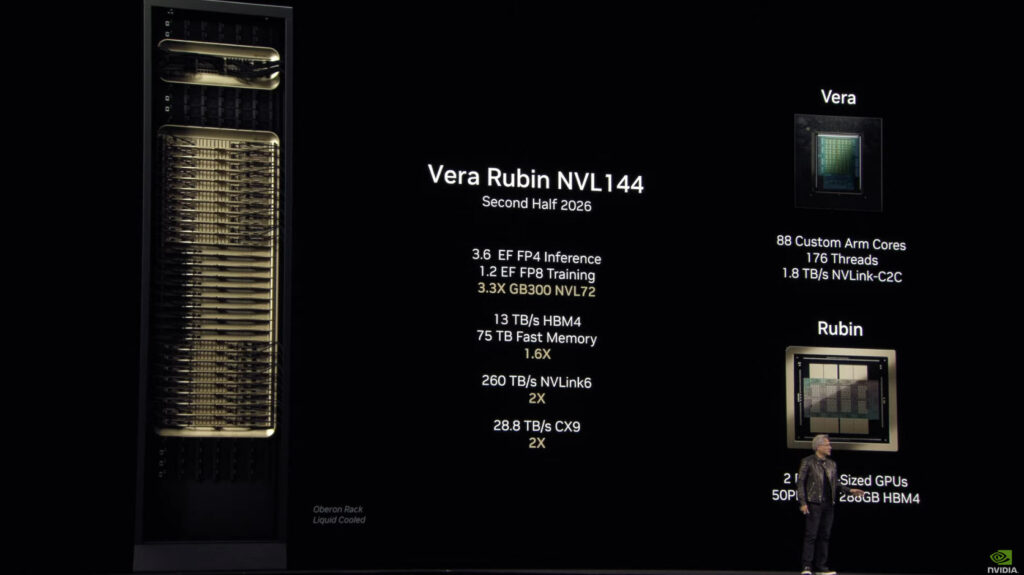

The centerpiece announcement was Vera Rubin, a GPU named after a famous astronomer. Vera Rubin is scheduled for release in the second half of 2026 and will feature tens of terabytes of memory and a custom Nvidia-designed CPU called Vera. According to Nvidia, Vera Rubin will deliver significant performance improvements over its predecessor, Grace Blackwell, particularly for AI training and inference.

Specifications of Vera Rubin

Vera Rubin features two GPUs together on one die that deliver 50 petaflops of FP4 inference performance per chip. When configured in a full NVL144 rack, the system delivers 3.6 exaflops of FP4 inference compute—3.3 times more than Blackwell Ultra’s 1.1 exaflops in a similar rack configuration. The Vera CPU features 88 custom ARM cores with 176 threads connected to Rubin GPUs via a high-speed 1.8 TB/s NVLink interface.

What is Rubin Ultra?

Huang also announced Rubin Ultra, which will follow in the second half of 2027. Rubin Ultra will use the NVL576 rack configuration and feature individual GPUs with four reticle-sized dies, delivering 100 petaflops of FP4 precision per chip. At the rack level, Rubin Ultra will provide 15 exaflops of FP4 inference compute and 5 exaflops of FP8 training performance—about four times more powerful than the Rubin NVL144 configuration. Each Rubin Ultra GPU will include 1TB of HBM4e memory, with the complete rack containing 365TB of fast memory.

Conclusion

Nvidia’s new AI-accelerating GPUs, Vera Rubin and Rubin Ultra, promise to deliver significant performance improvements for AI training and inference. With their advanced features and high-speed performance, these GPUs are set to revolutionize the field of artificial intelligence and take it to the next level.

FAQs

- Q: What is Vera Rubin?

A: Vera Rubin is a GPU named after a famous astronomer, scheduled for release in the second half of 2026. - Q: What are the specifications of Vera Rubin?

A: Vera Rubin features two GPUs together on one die that deliver 50 petaflops of FP4 inference performance per chip. - Q: What is Rubin Ultra?

A: Rubin Ultra is a GPU that will follow in the second half of 2027, using the NVL576 rack configuration and featuring individual GPUs with four reticle-sized dies. - Q: What are the benefits of Nvidia’s new AI-accelerating GPUs?

A: Nvidia’s new AI-accelerating GPUs promise to deliver significant performance improvements for AI training and inference, revolutionizing the field of artificial intelligence.