Introduction to OpenAI’s New Safety Models

OpenAI is putting more safety controls directly into the hands of AI developers with a new research preview of “safeguard” models. The new ‘gpt-oss-safeguard’ family of open-weight models is aimed squarely at customizing content classification.

What are the New Models?

The new offering will include two models, gpt-oss-safeguard-120b and a smaller gpt-oss-safeguard-20b. Both are fine-tuned versions of the existing gpt-oss family and will be available under the permissive Apache 2.0 license. This will allow any organization to freely use, tweak, and deploy the models as they see fit.

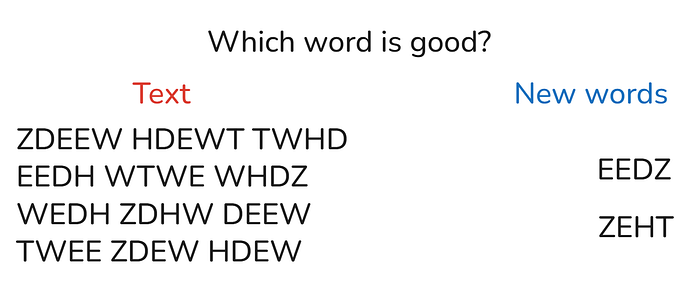

How do the New Models Work?

The real difference here isn’t just the open license; it’s the method. Rather than relying on a fixed set of rules baked into the model, gpt-oss-safeguard uses its reasoning capabilities to interpret a developer’s own policy at the point of inference. This means AI developers using OpenAI’s new model can set up their own specific safety framework to classify anything from single user prompts to full chat histories. The developer, not the model provider, has the final say on the ruleset and can tailor it to their specific use case.

Advantages of the New Models

This approach has a couple of clear advantages:

- Transparency: The models use a chain-of-thought process, so a developer can actually look under the bonnet and see the model’s logic for a classification. That’s a huge step up from the typical “black box” classifier.

- Agility: Because the safety policy isn’t permanently trained into OpenAI’s new model, developers can iterate and revise their guidelines on the fly without needing a complete retraining cycle. OpenAI, which originally built this system for its internal teams, notes this is a far more flexible way to handle safety than training a traditional classifier to indirectly guess what a policy implies.

Conclusion

Rather than relying on a one-size-fits-all safety layer from a platform holder, developers using open-source AI models can now build and enforce their own specific standards. While not live as of writing, developers will be able to access OpenAI’s new open-weight AI safety models on the Hugging Face platform.

FAQs

- Q: What is the purpose of OpenAI’s new ‘gpt-oss-safeguard’ models?

A: The new models are aimed at customizing content classification and providing more safety controls to AI developers. - Q: How do the new models work?

A: The models use their reasoning capabilities to interpret a developer’s own policy at the point of inference, allowing developers to set up their own specific safety framework. - Q: What are the advantages of the new models?

A: The new models provide transparency and agility, allowing developers to see the model’s logic for a classification and iterate and revise their guidelines on the fly. - Q: Where will the new models be available?

A: The new models will be available on the Hugging Face platform.