Introduction to Running Small Language Models on CPUs

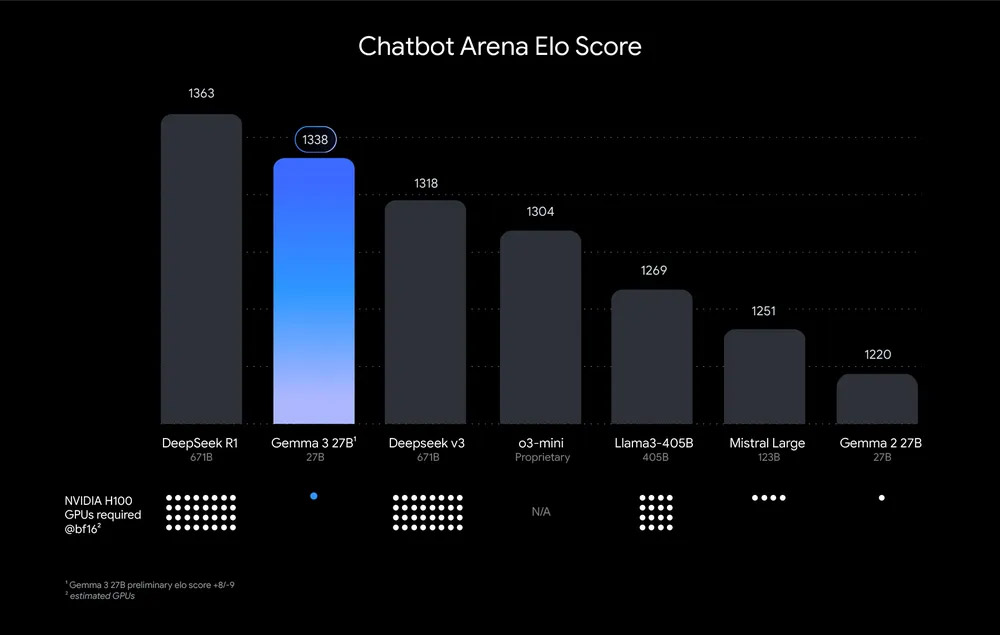

Traditionally, Large Language Model (LLM) inference required expensive GPUs. But with recent advancements, CPUs are back in the game for cost-efficient, small-scale inference. Three big shifts made this possible: smarter models, CPU-friendly runtimes, and quantization. Smaller Language Models (SLMs) are improving faster and are purpose-built for efficiency. Frameworks like llama.cpp, vLLM, and Intel optimizations bring GPU-like serving efficiency to CPUs. Compressing models drastically reduces memory footprint and latency with minimal accuracy loss.

Why SLMs on CPUs are Trending

The sweet spots for CPU deployment are 8B parameter models quantized to 4-bit and 4B parameter models quantized to 8-bit. If you’re working with a small language model, using GGUF makes life much easier. Instead of wrangling multiple conversion tools, GGUF lets you quantize and package your model in one step. The result is a single, portable file that loads everywhere, saving disk space. GGUF is built for inference efficiency.

When CPUs Make Sense

Strengths

CPUs have several strengths:

- Very low cost (especially on cloud CPUs like AWS Graviton).

- Great for single-user, low-throughput workloads.

- Privacy-friendly (local or edge deployment).

Limitations

CPUs also have some limitations:

- Batch size typically = 1 (not great for high parallelism).

- Smaller context windows.

- Throughput is lower vs GPU.

Real-World Example

A real-world example of CPUs making sense is grocery stores using SLMs on Graviton to check inventory levels: small context, small throughput, but very cost-efficient.

SLMs vs LLMs: A Hybrid Strategy

Enterprises don’t have to choose one. A hybrid model also works best:

- LLMs → abstraction tasks (summarization, sentiment analysis, knowledge extraction).

- SLMs → operational tasks (ticket classification, compliance checks, internal search).

- Integration → embed both into CRM, ERP, HRMS systems via APIs.

The CPU Inference Tech Stack

The CPU inference tech stack includes:

Inference Runtimes

In simple terms, these are the engines doing the math:

- llama.cpp (C++ CPU-first runtime, with GGUF format).

- GGML / GGUF (tensor library + model format).

- vLLM (GPU-first but CPU-capable).

- MLC LLM (portable compiler/runtime).

Local Wrappers / Launchers

In simple terms, these are the user-friendly layers on top of runtime engines:

- Ollama (CLI/API, llama.cpp under the hood).

- GPT4All (desktop app).

- LM Studio (GUI app for Hugging Face models).

Hands-On Exercise: Serving a Translation SLM on CPU with llama.cpp + EC2

A high-level 4-step process:

Step 1. Local Setup

A. Install prerequisites:

# System deps

sudo apt update && sudo apt install -y git build-essential cmake# Python deps

pip install streamlit requestsB. Build llama.cpp (if not already built):

git clone https://github.com/ggerganov/llama.cpp.git

cd llama.cpp

mkdir -p build && cd build

cmake .. -DLLAMA_BUILD_SERVER=ON

cmake --build . --config Release

cd ..C. Run the server with a GGUF model specific for your use case:

./build/bin/llama-server -hf TheBloke/Mistral-7B-Instruct-v0.2-GGUF --port 8080Now you have a local HTTP API (OpenAI-compatible).

Step 2. Create Streamlit App for our frontend

Save as app.py:

import streamlit as st

import requestsst.set_page_config(page_title="SLM Translator", page_icon="🌍", layout="centered")

st.title("🌍 CPU-based SLM Translator")

st.write("Test translation with a local llama.cpp model served on CPU.")# Inputs

source_text = st.text_area("Enter English text to translate:", "Hello, how are you today?")

target_lang = st.selectbox("Target language:", ["French", "German", "Spanish", "Tamil"])if st.button("Translate"):

prompt = f"Translate the following text into {target_lang}: {source_text}"payload = {

"model": "mistral-7b",

"messages": [

{"role": "user", "content": prompt}

],

"max_tokens": 200

}try:

response = requests.post("http://localhost:8080/v1/chat/completions", json=payload)

if response.status_code == 200:

data = response.json()

translation = data["choices"][0]["message"]["content"]

st.success(translation)

else:

st.error(f"Error: {response.text}")

except Exception as e:

st.error(f"Could not connect to llama.cpp server. Is it running?nn{e}")Step 3. Run Locally and test out your app

- Start llama-server in one terminal:

./build/bin/llama-server -hf TheBloke/Mistral-7B-Instruct-v0.2-GGUF --port 8080 - Start Streamlit in another terminal:

streamlit run app.py - Open browser → http://localhost:8501 → enter text → get translations.

Step 4. Deploy to AWS EC2

You have 2 choices here. Option A or B.

Option A. Simple (manual install)

- Launch EC2 (Graviton or x86, with ≥16GB RAM).

- SSH in, repeat the Step 1 & 2 setup (install Python, build llama.cpp, copy app.py).

- Run:

nohup ./build/bin/llama-server -hf TheBloke/Mistral-7B-Instruct-v0.2-GGUF --port 8080 & nohup streamlit run app.py --server.port 80 --server.address 0.0.0.0 &Open http://

/ in browser.

Option B. Docker (portable, easier)

Build & run:

docker build -t slm-translator .

docker run -p 8501:8501 -p 8080:8080 slm-translatorThen test at: http://localhost:8501 (local) or http://

Conclusion

With this, you get a full loop: local testing → deploy on EC2 → translation UI. CPUs are a great option for running small language models, especially when cost and efficiency are a priority.

FAQs

Q: What is the difference between SLMs and LLMs?

A: SLMs are smaller and more efficient, while LLMs are larger and more powerful.

Q: What is GGUF?

A: GGUF is a format for packaging and quantizing language models, making them more efficient and portable.

Q: Can I run SLMs on GPUs?

A: Yes, but CPUs are often a more cost-efficient option for small-scale inference.

Q: How do I deploy my SLM to AWS EC2?

A: You can deploy your SLM to EC2 using either a manual install or Docker.

Q: What is the benefit of using a hybrid strategy with SLMs and LLMs?

A: A hybrid strategy allows you to use the strengths of both SLMs and LLMs, depending on the specific task or application.