Introduction to AI Hallucinations

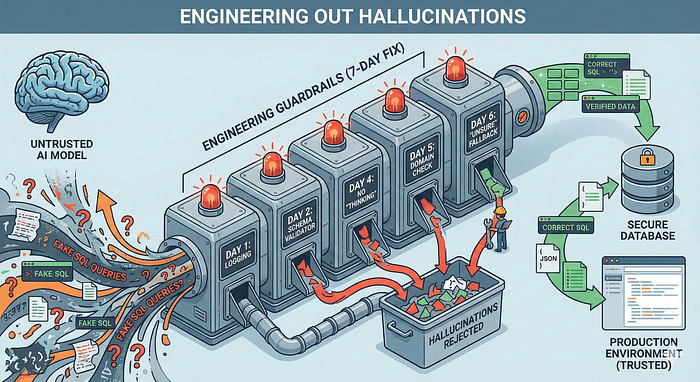

The article discusses the author’s experience dealing with AI hallucinations in production systems without changing the model itself. The author implemented a series of engineering controls, such as logging everything, validating outputs, and allowing the model to express uncertainty, which collectively resulted in a significant reduction in hallucinations and errors.

What are AI Hallucinations?

AI hallucinations refer to the phenomenon where a machine learning model produces outputs that are not based on any actual input or data, but rather on the model’s own biases or errors. This can lead to inaccurate or misleading results, which can have serious consequences in real-world applications.

The Author’s Approach

The author took a unique approach to addressing AI hallucinations. Instead of switching models, fine-tuning, or adding new data, they simply stopped trusting the AI. This involved implementing a series of engineering controls to enforce reality and maintain a critical stance toward model outputs.

Engineering Controls

The author implemented several engineering controls to reduce hallucinations and errors. These included:

- Logging everything: This involved keeping a record of all inputs, outputs, and errors to identify patterns and areas for improvement.

- Validating outputs: This involved checking the model’s outputs against real-world data to ensure accuracy and consistency.

- Allowing the model to express uncertainty: This involved giving the model the ability to indicate when it was unsure or lacked confidence in its outputs.

Results

The author’s approach resulted in a significant reduction in hallucinations and errors. By enforcing reality and maintaining a critical stance toward model outputs, the author was able to improve the accuracy and reliability of the model without changing the model itself.

Conclusion

The author’s experience highlights the importance of critical thinking and skepticism when working with AI models. By implementing simple engineering controls and maintaining a critical stance toward model outputs, it is possible to reduce hallucinations and errors without changing the model itself. This approach can be applied to a wide range of AI applications, from image recognition to natural language processing.

FAQs

- Q: What are AI hallucinations?

A: AI hallucinations refer to the phenomenon where a machine learning model produces outputs that are not based on any actual input or data. - Q: How can AI hallucinations be reduced?

A: AI hallucinations can be reduced by implementing engineering controls such as logging everything, validating outputs, and allowing the model to express uncertainty. - Q: Do I need to change my AI model to reduce hallucinations?

A: No, it is possible to reduce hallucinations without changing the model itself by implementing simple engineering controls and maintaining a critical stance toward model outputs. - Q: What are the benefits of reducing AI hallucinations?

A: Reducing AI hallucinations can improve the accuracy and reliability of AI models, leading to better decision-making and outcomes in a wide range of applications.