Introduction to AI Security Risks

According to Wiz, the race among AI companies is causing many to overlook basic security hygiene practices. This oversight has significant implications, as 65 percent of the 50 leading AI firms analyzed had leaked verified secrets on GitHub. The exposures include API keys, tokens, and sensitive credentials, often buried in code repositories that standard security tools do not check.

The Problem of Leaked Secrets

Glyn Morgan, Country Manager for UK&I at Salt Security, described this trend as a preventable and basic error. “When AI firms accidentally expose their API keys they lay bare a glaring avoidable security failure,” he said. This is particularly concerning because it provides attackers with a "golden ticket" to systems, data, and models, effectively sidestepping the usual defensive layers.

Supply Chain Security Risks

The problem extends beyond internal development teams; as enterprises increasingly partner with AI startups, they may inherit their security posture. The researchers warn that some of the leaks they found “could have exposed organisational structures, training data, or even private models.” The financial stakes are considerable, with the companies analyzed with verified leaks having a combined valuation of over $400 billion.

Examples of Leaks

The report provides examples of the risks, including:

- LangChain, which was found to have exposed multiple Langsmith API keys, some with permissions to manage the organisation and list its members.

- An enterprise-tier API key for ElevenLabs discovered sitting in a plaintext file.

- An unnamed AI 50 company had a HuggingFace token exposed in a deleted code fork, allowing access to about 1K private models.

The Limitations of Traditional Security Scanning

The Wiz report suggests this problem is so prevalent because traditional security scanning methods are no longer sufficient. Relying on basic scans of a company’s main GitHub repositories is a “commoditised approach” that misses the most severe risks. The researchers describe the situation as an “iceberg” where the most obvious risks are visible, but the greater danger lies “below the surface.”

Advanced Scanning Methodology

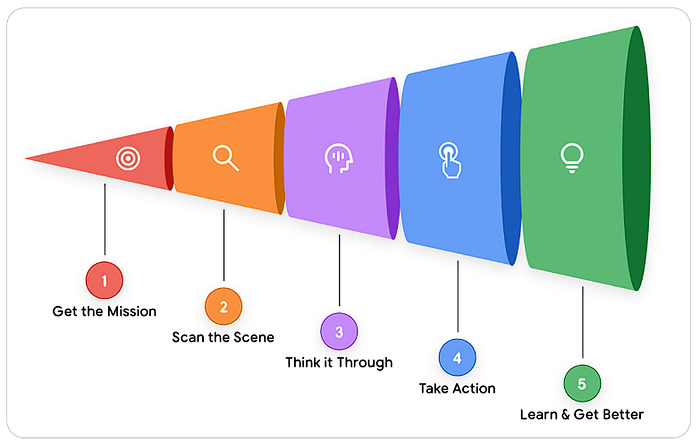

To find these hidden risks, the researchers adopted a three-dimensional scanning methodology they call “Depth, Perimeter, and Coverage”:

- Depth: Analyzing the full commit history, commit history on forks, deleted forks, workflow logs, and gists—areas most scanners “never touch.”

- Perimeter: Expanding the scan beyond the core company organisation to include organisation members and contributors.

- Coverage: Looking for new AI-related secret types that traditional scanners often miss, such as keys for platforms like WeightsAndBiases, Groq, and Perplexity.

Immediate Action Items

Wiz’s findings serve as a warning for enterprise technology executives, highlighting three immediate action items for managing both internal and third-party security risk:

- Security leaders must treat their employees as part of their company’s attack surface, creating a Version Control System (VCS) member policy.

- Internal secret scanning must evolve beyond basic repository checks, adopting the “Depth, Perimeter, and Coverage” mindset.

- This level of scrutiny must be extended to the entire AI supply chain, with CISOs probing their secrets management and vulnerability disclosure practices.

Conclusion

The central message for enterprises is that the tools and platforms defining the next generation of technology are being built at a pace that often outstrips security governance. As Wiz concludes, “For AI innovators, the message is clear: speed cannot compromise security”. For the enterprises that depend on that innovation, the same warning applies.

FAQs

- Q: What is the main issue with AI companies in terms of security?

A: Many AI companies overlook basic security hygiene practices, leading to leaked secrets such as API keys and sensitive credentials on platforms like GitHub. - Q: Why are traditional security scanning methods insufficient?

A: They do not scan deeply enough, missing areas like full commit histories and deleted forks, and they do not cover the broader perimeter of contributors and new AI-related secret types. - Q: What should companies do to address these security risks?

A: Implement a comprehensive scanning methodology that includes depth, perimeter, and coverage, and extend scrutiny to the entire AI supply chain, including employee practices and vendor security postures.