Introduction to Sora 2

OpenAI has announced the launch of Sora 2, its second-generation video-synthesis AI model. This new model can generate videos in various styles with synchronized dialogue and sound effects, a first for the company. Alongside Sora 2, OpenAI has also launched a new iOS social app that allows users to insert themselves into AI-generated videos through "cameos."

Features of Sora 2

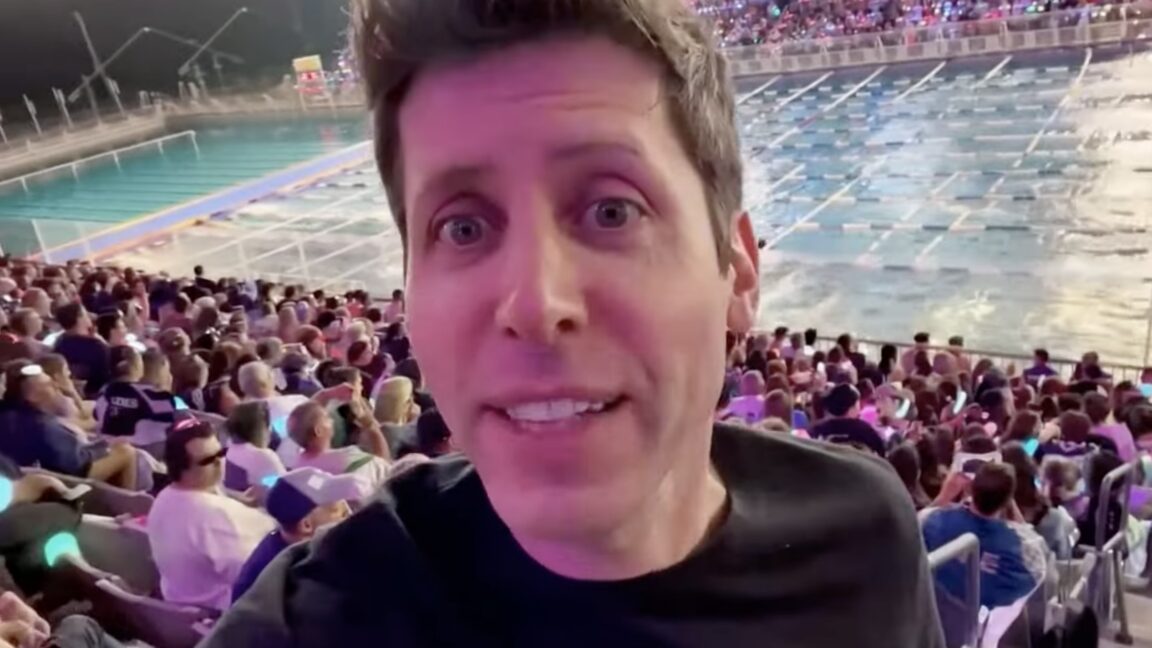

The new model can create sophisticated background soundscapes, speech, and sound effects with a high degree of realism. OpenAI showcased the model’s capabilities in an AI-generated video featuring a photorealistic version of OpenAI CEO Sam Altman. The video includes fantastical backdrops, such as a competitive ride-on duck race and a glowing mushroom garden, and demonstrates the model’s ability to generate synchronized audio and video.

Comparison to Other Models

Sora 2 is not the first video-synthesis model to generate synchronized audio and video. In May, Google’s Veo 3 became the first model from a major AI lab to achieve this. Recently, Alibaba released Wan 2.5, an open-weights video model that can also generate audio. OpenAI has now joined the party with Sora 2, which features notable visual consistency improvements over its previous video model.

Visual Consistency and Physical Accuracy

The model can follow more complex instructions across multiple shots while maintaining coherency between them. Sora 2 also demonstrates improved physical accuracy, simulating complex physical movements like Olympic gymnastics routines and triple axels while maintaining realistic physics. This is a significant improvement over the original Sora model, which struggled with similar video-generation tasks.

How Sora 2 Differs from Previous Models

Prior video models were often overoptimistic, morphing objects and deforming reality to successfully execute upon a text prompt. For example, if a basketball player missed a shot, the ball might spontaneously teleport to the hoop. In Sora 2, if a basketball player misses a shot, it will rebound off the backboard, demonstrating a more realistic understanding of physics.

Conclusion

Sora 2 represents a significant breakthrough in video-synthesis AI, with its ability to generate synchronized audio and video, improved visual consistency, and physical accuracy. The model’s capabilities have the potential to revolutionize the way we create and interact with video content. With the launch of Sora 2, OpenAI has taken a major step forward in the development of video-synthesis AI.

FAQs

Q: What is Sora 2?

A: Sora 2 is OpenAI’s second-generation video-synthesis AI model, which can generate videos in various styles with synchronized dialogue and sound effects.

Q: What are the key features of Sora 2?

A: The key features of Sora 2 include its ability to generate sophisticated background soundscapes, speech, and sound effects, as well as its improved visual consistency and physical accuracy.

Q: How does Sora 2 differ from previous video-synthesis models?

A: Sora 2 differs from previous models in its ability to generate synchronized audio and video, as well as its more realistic understanding of physics and its ability to follow complex instructions across multiple shots.

Q: What are the potential applications of Sora 2?

A: The potential applications of Sora 2 include the creation of realistic video content, such as special effects, animations, and virtual reality experiences.

Q: Is Sora 2 available to the public?

A: Sora 2 is available through OpenAI’s new iOS social app, which allows users to insert themselves into AI-generated videos through "cameos."