Introduction to Robot Navigation

A robot searching for workers trapped in a partially collapsed mine shaft must rapidly generate a map of the scene and identify its location within that scene as it navigates the treacherous terrain. Researchers have recently started building powerful machine-learning models to perform this complex task using only images from the robot’s onboard cameras. However, even the best models can only process a few images at a time, making them infeasible for applications where a robot needs to move quickly through a varied environment while processing thousands of images.

The Challenge of Simultaneous Localization and Mapping

For years, researchers have been grappling with an essential element of robotic navigation called simultaneous localization and mapping (SLAM). In SLAM, a robot recreates a map of its environment while orienting itself within the space. Traditional optimization methods for this task tend to fail in challenging scenes or require the robot’s onboard cameras to be calibrated beforehand. To avoid these pitfalls, researchers train machine-learning models to learn this task from data.

A New Approach to Mapping

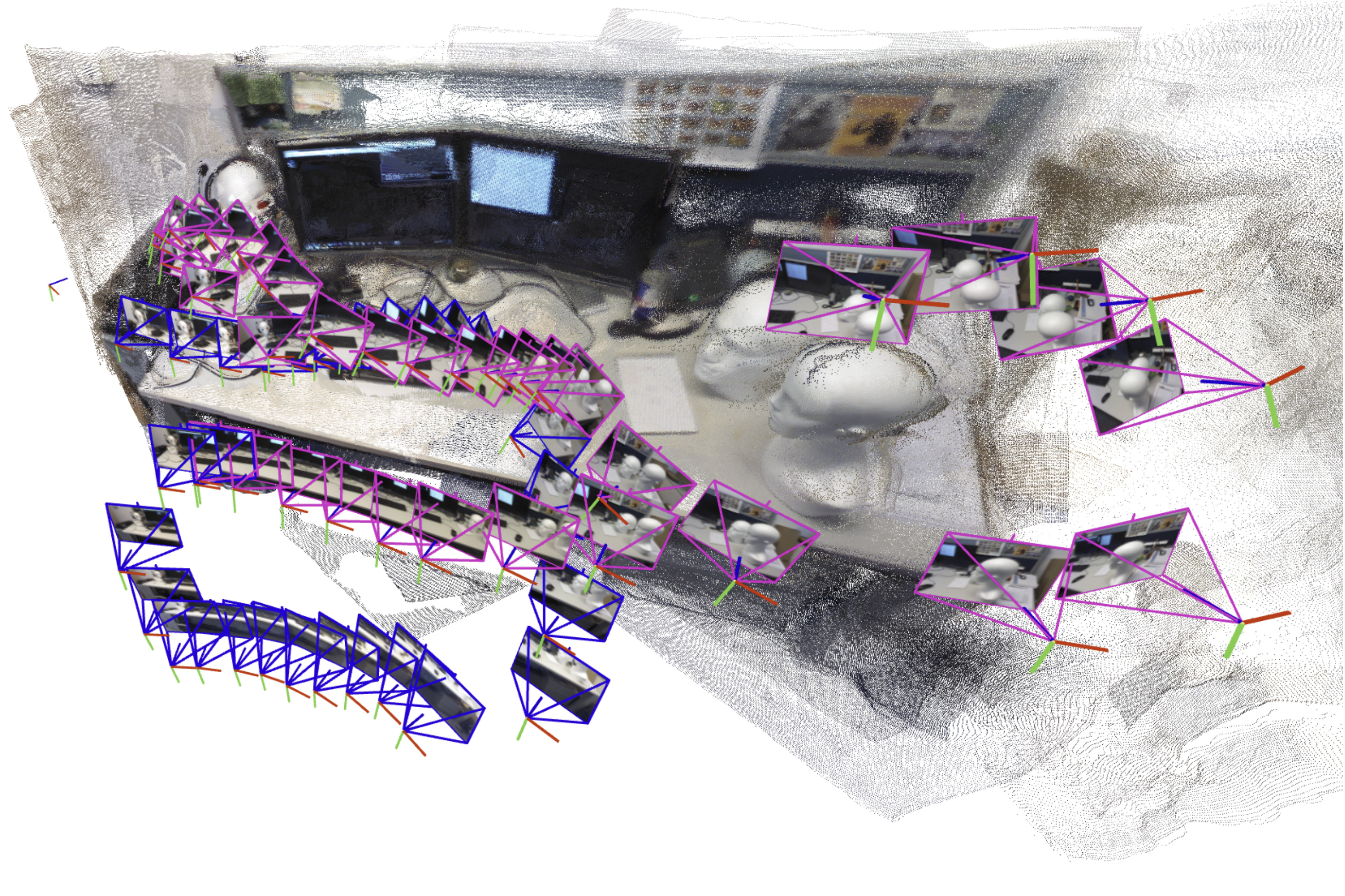

To overcome the problem of processing a large number of images, MIT researchers drew on ideas from both recent artificial intelligence vision models and classical computer vision to develop a new system. Their system accurately generates 3D maps of complicated scenes like a crowded office corridor in a matter of seconds. The AI-driven system incrementally creates and aligns smaller submaps of the scene, which it stitches together to reconstruct a full 3D map while estimating the robot’s position in real-time.

How the System Works

The system generates smaller submaps of the scene instead of the entire map. These submaps are then "glued" together into one overall 3D reconstruction. The model is still only processing a few images at a time, but the system can recreate larger scenes much faster by stitching smaller submaps together. However, the researchers found that errors in the way the machine-learning models process images made aligning submaps a more complex problem.

Overcoming the Alignment Problem

To solve this problem, the researchers developed a more flexible, mathematical technique that can represent all the deformations in these submaps. By applying mathematical transformations to each submap, this more flexible method can align them in a way that addresses the ambiguity. Based on input images, the system outputs a 3D reconstruction of the scene and estimates of the camera locations, which the robot would use to localize itself in the space.

Applications and Future Work

Beyond helping search-and-rescue robots navigate, this method could be used to make extended reality applications for wearable devices like VR headsets or enable industrial robots to quickly find and move goods inside a warehouse. The researchers want to make their method more reliable for especially complicated scenes and work toward implementing it on real robots in challenging settings.

Conclusion

The new system developed by MIT researchers has the potential to revolutionize the field of robotic navigation. By combining machine-learning models with classical computer vision techniques, the system can generate accurate 3D maps of complex scenes in a matter of seconds. This technology could have a significant impact on various applications, from search-and-rescue missions to industrial robotics and extended reality.

FAQs

Q: What is simultaneous localization and mapping (SLAM)?

A: SLAM is a technique used by robots to recreate a map of their environment while orienting themselves within the space.

Q: What is the limitation of current machine-learning models for SLAM?

A: Current machine-learning models can only process a few images at a time, making them infeasible for applications where a robot needs to move quickly through a varied environment while processing thousands of images.

Q: How does the new system developed by MIT researchers overcome this limitation?

A: The system generates smaller submaps of the scene, which are then "glued" together into one overall 3D reconstruction, allowing it to process a large number of images quickly.

Q: What are the potential applications of this technology?

A: The technology could be used in search-and-rescue missions, industrial robotics, extended reality applications, and other fields where rapid mapping and localization are crucial.