Introduction to AI’s New Frontier

Imagine asking your phone, “What’s in my fridge and what can I cook?” You snap a photo, and within seconds, the AI not only identifies eggs, broccoli, and cheese but also suggests a delicious frittata recipe — complete with cooking instructions. Or picture yourself in a foreign country, pointing your camera at a street sign, and instantly hearing a translation in your native language.

What are Multimodal and Vision-Language Models?

Vision-Language Models (VLMs) are a specific type of multimodal AI that specializes in understanding both visual and textual information. These models are designed to process and interpret text, audio, and visual data concurrently, enabling AI systems to perform tasks that were previously impossible.

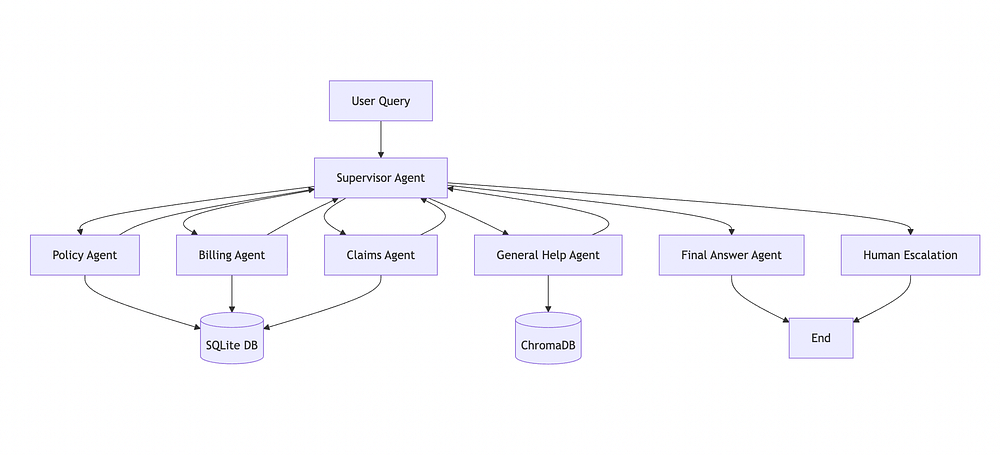

How Do These Models Work?

The architecture, operational mechanisms, and training processes behind these models are complex and rapidly evolving. Essentially, they use deep learning techniques to analyze and understand the relationships between different types of data. This allows them to make predictions, classify objects, and generate text based on visual input.

Applications of Multimodal and Vision-Language Models

These models have a wide range of applications in various industries, including:

- Healthcare: Analyzing medical images and patient data to diagnose diseases

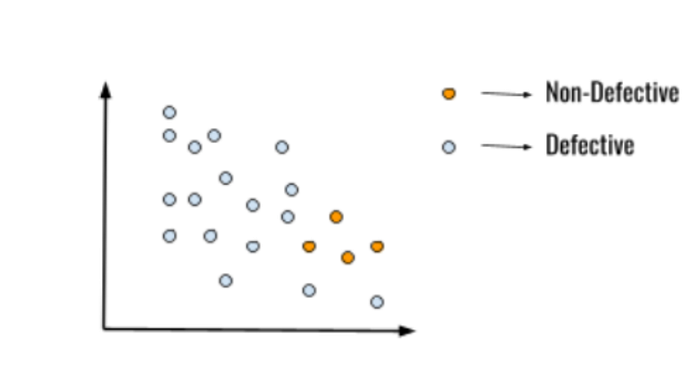

- Manufacturing: Inspecting products and detecting defects using computer vision

- E-commerce: Enabling customers to search for products using images and natural language

Challenges and Ethical Considerations

While multimodal and vision-language models have the potential to revolutionize many industries, there are also critical challenges and ethical considerations that need to be addressed. These include:

- Ensuring the accuracy and fairness of the models

- Protecting user data and privacy

- Addressing potential biases and discrimination

Future Trends and Developments

The development of multimodal and vision-language models is a rapidly evolving field, with new breakthroughs and advancements being made regularly. As these models become more powerful and sophisticated, we can expect to see even more innovative applications and use cases.

Conclusion

Multimodal and vision-language models are transforming the field of AI, enabling machines to see, hear, and understand the world in ways that were previously impossible. As these models continue to evolve and improve, we can expect to see significant advancements in various industries and aspects of our lives. It is crucial, however, to address the challenges and ethical considerations associated with these technologies to ensure their development and deployment are responsible and beneficial to society.

FAQs

- Q: What are multimodal models?

A: Multimodal models are AI systems that can process and interpret multiple types of data, such as text, audio, and visual information. - Q: What are vision-language models?

A: Vision-language models are a specific type of multimodal model that specializes in understanding both visual and textual information. - Q: What are some applications of multimodal and vision-language models?

A: These models have applications in healthcare, manufacturing, e-commerce, and many other industries, enabling tasks such as image analysis, natural language processing, and more. - Q: What are some challenges associated with multimodal and vision-language models?

A: Challenges include ensuring accuracy and fairness, protecting user data and privacy, and addressing potential biases and discrimination.