Introduction to Vector Search

As AI tools continue to evolve, many applications we use daily, like recommendation engines, image search, and chat-based assistants, rely on vector search. This technique lets machines find “similar” things quickly, whether that’s related documents, nearby images, or contextually relevant responses. But here’s the catch: most of this happens in the cloud, because storing and querying high-dimensional vector data is computationally expensive and storage-heavy.

The Challenges of Cloud-Based Vector Search

This poses two major problems. First, privacy: cloud-based AI search often requires sending personal data to remote servers. Second, accessibility: people with limited connectivity or working on edge devices can’t take full advantage of these powerful tools. Wouldn’t it be useful if your phone or laptop could do this locally, without sending data elsewhere?

The Solution: LEANN

That’s the challenge tackled in a new paper titled “LEANN: A Low‑Storage Vector Index” by Wang et al. (2025). The authors present a method that makes fast, accurate, and memory-efficient vector search possible on small, resource-constrained devices without relying on cloud infrastructure.

How LEANN Works

LEANN addresses this challenge with two key innovations:

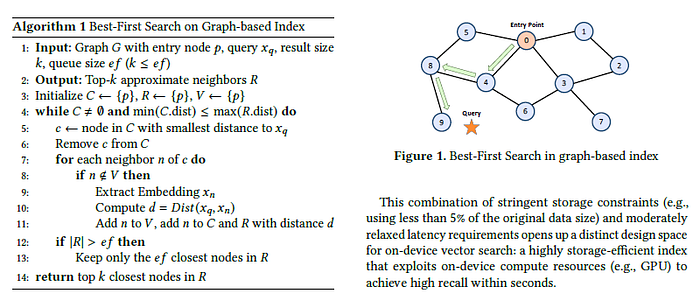

- Graph Pruning: Instead of storing the full HNSW graph, LEANN trims it down to a much smaller version that still preserves the ability to navigate effectively.

- On-the-Fly Vector Reconstruction: LEANN doesn’t store all the original data vectors. Instead, it stores a small seed set and reconstructs the needed vectors at query time using a lightweight model.

The Benefits of LEANN

Together, these strategies reduce storage by up to 45 times compared to a standard HNSW implementation without significant loss in accuracy or speed. That’s a game-changer for local AI. The authors demonstrate LEANN on several real-world datasets and show that it performs comparably to full HNSW in both latency and recall, while using only a fraction of the storage.

Why LEANN Matters

To me, this paper is interesting because it offers a practical way to bring powerful AI capabilities to smaller, offline environments. Think of a few concrete examples:

- On-device document search: Imagine being able to ask your phone to “find that PDF I read last week about neural networks” and get a meaningful result, even if you’re on a plane or in a remote location.

- Private photo retrieval: Instead of uploading your photos to the cloud to search by visual similarity, your device could handle that locally.

- Assistive tools for healthcare or education: In regions with limited internet access, lightweight vector search could power diagnostic tools or personalized learning without needing external servers.

A Simple Demo of Low-Storage Vector Search

Although I did not find an open source implementation of LEANN that could be used to demonstrate it for this post, here’s a simple example using hnswlib to build a vector index, simulate reduced storage using a smaller seed set, and estimate memory savings.

What the Demo Shows

- A full embedding matrix for 10,000 vectors takes ~5–10MB (depending on dtype and dimension).

- By storing only 5% of the vectors and reconstructing others, we can significantly reduce memory usage.

- The HNSW index itself is also compact but pruning it further (not shown here) could yield more savings.

Conclusion

LEANN shows that you don’t need to choose between efficiency and performance when doing vector search. With smart algorithmic design, it’s possible to build AI systems that are both capable and accessible running directly on the devices we use every day. This leads to an open question: How will lightweight, local vector search change the design of future AI applications? Will more systems shift to offline-first models or will cloud infrastructure remain dominant?

FAQs

- What is vector search?: Vector search is a technique that lets machines find “similar” things quickly, whether that’s related documents, nearby images, or contextually relevant responses.

- What is HNSW?: HNSW, or Hierarchical Navigable Small World, is a popular approximate nearest neighbor algorithm used in vector search.

- How does LEANN reduce storage?: LEANN reduces storage by up to 45 times compared to a standard HNSW implementation by using graph pruning and on-the-fly vector reconstruction.

- What are the benefits of LEANN?: The benefits of LEANN include fast, accurate, and memory-efficient vector search on small devices, improved privacy, and increased accessibility.