Introduction to AI Architectures

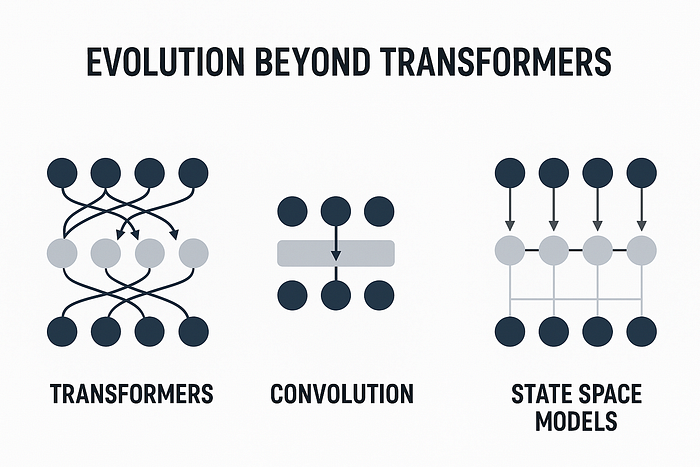

The world of Artificial Intelligence (AI) has been revolutionized by the introduction of Transformers, the architecture that powers most modern AI models, including ChatGPT. Since 2017, Transformers have dominated the AI landscape, enabling machines to understand and process human language more effectively. However, like any technology, Transformers have their limitations, particularly when it comes to processing long documents.

The Limitations of Transformers

Transformers rely on self-attention mechanisms to process input sequences, which can be computationally expensive and limiting when dealing with long documents. This has led researchers to explore new AI architectures that can deliver similar intelligence with improved efficiency.

Next-Generation AI Architectures

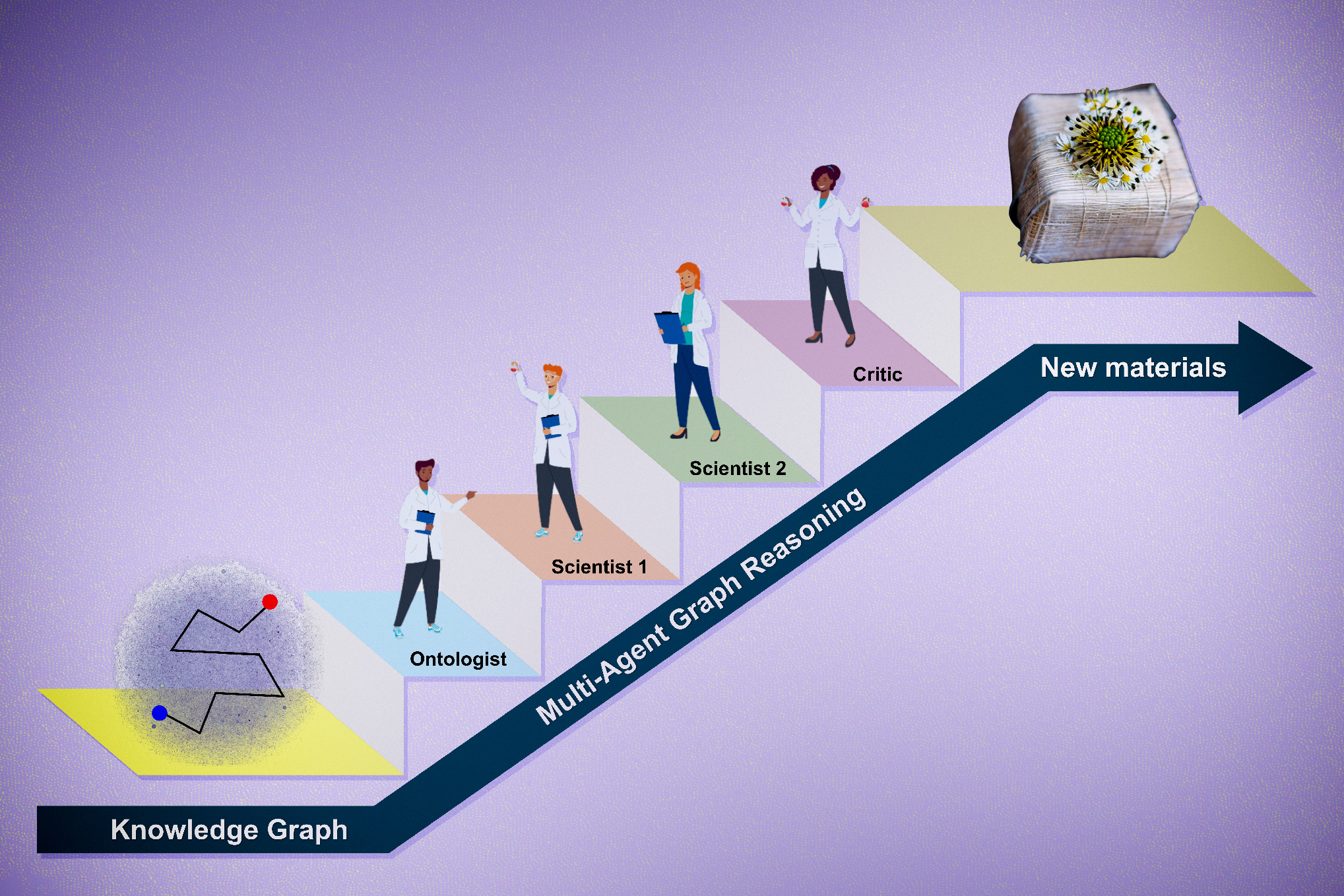

Several next-generation AI architectures have been proposed, including Mamba, Hyena, and RWKV. These architectures promise to overcome the limitations of Transformers and provide more efficient processing of long documents. They achieve this through novel attention mechanisms and more efficient use of computational resources.

Underlying Principles and Applications

The underlying principles of these new architectures are based on advances in machine learning and deep learning. They have the potential to be applied in a wide range of areas, including natural language processing, computer vision, and speech recognition. For instance, Mamba uses a novel attention mechanism that allows it to process long documents more efficiently, while Hyena uses a combination of attention and recurrent neural networks to achieve state-of-the-art results.

Broader Implications for AI Development

The development of these new architectures has significant implications for the field of AI. As researchers continue to push the boundaries of what is possible with AI, we can expect to see more hybrid models that combine the strengths of different approaches. This will enable the creation of more sophisticated AI systems that can tackle complex tasks and provide more accurate results.

Conclusion

In conclusion, the AI landscape is evolving rapidly, with new architectures emerging that promise to deliver more efficient and accurate processing of complex tasks. As researchers continue to explore the possibilities of AI, we can expect to see significant advances in the field, leading to more sophisticated and capable AI systems.

FAQs

What are Transformers and how do they work?

Transformers are a type of AI architecture that relies on self-attention mechanisms to process input sequences. They are widely used in natural language processing and have achieved state-of-the-art results in many tasks.

What are the limitations of Transformers?

The main limitation of Transformers is their computational expense and inability to process long documents efficiently.

What are the next-generation AI architectures?

Next-generation AI architectures include Mamba, Hyena, and RWKV, which promise to overcome the limitations of Transformers and provide more efficient processing of long documents.

What are the potential applications of these new architectures?

The potential applications of these new architectures include natural language processing, computer vision, and speech recognition, among others.

How will the development of these new architectures impact the field of AI?

The development of these new architectures will enable the creation of more sophisticated AI systems that can tackle complex tasks and provide more accurate results, leading to significant advances in the field of AI.