Introduction to AI Content on YouTube

AI content has become increasingly prevalent on the Internet over the past few years. Initially, this content was easily identifiable as fake due to its poor quality, such as mutated hands. However, with advancements in technology, synthetic images and videos have become more realistic, making it challenging to differentiate them from real content. Google, having played a significant role in the development of this technology, now bears some responsibility for regulating AI video content on YouTube.

The Problem of AI Content

Google’s AI models have contributed to the rise of AI content, some of which is used to spread misinformation and harass individuals. Creators and influencers are concerned that their brands could be damaged by AI videos that depict them saying or doing things they never actually did. Even lawmakers are worried about the potential consequences of this technology. Despite these concerns, Google is unlikely to ban AI content from YouTube, given its significant investment in the technology.

Likeness Detection System

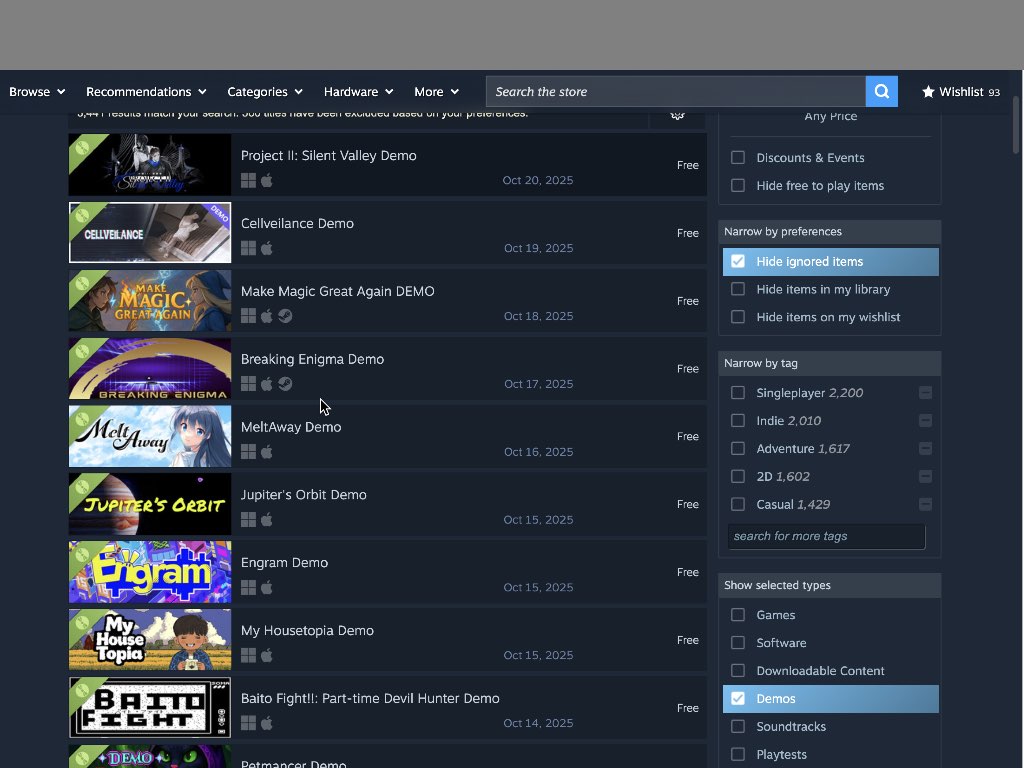

Earlier this year, YouTube announced its plans to introduce tools that would flag face-stealing AI content on the platform. The likeness detection system, similar to the site’s copyright detection system, has now been expanded beyond its initial testing phase. YouTube has notified the first batch of eligible creators that they can use the likeness detection feature. However, to access this feature, creators will need to provide Google with more personal information.

How the Likeness Detection System Works

The likeness detection system is currently in beta testing and is available to a limited number of creators. It can be found in the "Content detection" menu in YouTube Studio. To set up the feature, creators must verify their identity by providing a photo of a government ID and a video of their face. This requirement may seem unnecessary, given that many creators have already posted videos featuring their faces. However, it is a necessary step to ensure the effectiveness of the system.

Conclusion

The introduction of the likeness detection system is a step in the right direction for regulating AI content on YouTube. While it may not completely eliminate the problem of face-stealing AI videos, it provides creators with a tool to protect their brands and identities. As the technology continues to evolve, it is essential for Google and YouTube to stay ahead of the curve and develop more effective solutions to address the challenges posed by AI content.

FAQs

- What is the likeness detection system on YouTube?

The likeness detection system is a tool that flags face-stealing AI content on the platform. - Why do creators need to provide personal information to use the likeness detection system?

Creators need to provide a photo of a government ID and a video of their face to verify their identity and ensure the effectiveness of the system. - Is the likeness detection system available to all creators?

No, the system is currently in beta testing and is only available to a limited number of creators. - How can creators access the likeness detection system?

The system can be found in the "Content detection" menu in YouTube Studio. - What is the purpose of the likeness detection system?

The purpose of the system is to protect creators’ brands and identities from face-stealing AI videos.